Merge branch 'dev'

@ -5,15 +5,11 @@

|

||||

.vs

|

||||

.vscode

|

||||

docker-compose*.yml

|

||||

docker-compose.dcproj

|

||||

*.sln

|

||||

!eShopOnContainers-ServicesAndWebApps.sln

|

||||

*.md

|

||||

hosts

|

||||

LICENSE

|

||||

*.testsettings

|

||||

vsts-docs

|

||||

test

|

||||

ServiceFabric

|

||||

readme

|

||||

k8s

|

||||

@ -31,4 +27,13 @@ cli-linux

|

||||

**/wwwroot/lib/*

|

||||

global.json

|

||||

**/appsettings.localhost.json

|

||||

src/Web/WebSPA/wwwroot/

|

||||

src/Web/WebSPA/wwwroot/

|

||||

packages/

|

||||

csproj-files/

|

||||

test-results/

|

||||

TestResults/

|

||||

src/Mobile/

|

||||

src/Web/Catalog.WebForms/

|

||||

src/Web/WebMonolithic/

|

||||

src/BuildingBlocks/CommandBus/

|

||||

src/Services/Marketing/Infrastructure/

|

||||

12

.gitignore

vendored

@ -26,6 +26,9 @@ bld/

|

||||

# Visual Studio 2015 cache/options directory

|

||||

.vs/

|

||||

|

||||

# Dockerfile projects folder for restore-packages script

|

||||

csproj-files/

|

||||

|

||||

# .js files created on build:

|

||||

src/Web/WebMVC/wwwroot/js/site*

|

||||

|

||||

@ -42,6 +45,8 @@ src/Web/WebMVC/wwwroot/js/site*

|

||||

*.VisualState.xml

|

||||

TestResult.xml

|

||||

|

||||

tests-results/

|

||||

|

||||

# Build Results of an ATL Project

|

||||

[Dd]ebugPS/

|

||||

[Rr]eleasePS/

|

||||

@ -269,4 +274,9 @@ pub/

|

||||

.mfractor

|

||||

|

||||

# Ignore HealthCheckdb

|

||||

*healthchecksdb*

|

||||

*healthchecksdb*

|

||||

|

||||

# Ignores all extra inf.yaml and app.yaml that are copied by prepare-devspaces.ps1

|

||||

src/**/app.yaml

|

||||

src/**/inf.yaml

|

||||

|

||||

|

||||

111

README.md

@ -1,72 +1,28 @@

|

||||

# eShopOnContainers - Microservices Architecture and Containers based Reference Application (**BETA state** - Visual Studio 2017 and CLI environments compatible)

|

||||

# eShopOnContainers - Microservices Architecture and Containers based Reference Application (**BETA state** - Visual Studio and CLI environments compatible)

|

||||

Sample .NET Core reference application, powered by Microsoft, based on a simplified microservices architecture and Docker containers.

|

||||

|

||||

## Linux Build Status for 'dev' branch

|

||||

|

||||

Dev branch contains the latest "stable" code, and their images are tagged with `:dev` in our [Docker Hub](https://cloud.docker.com/u/eshop/repository/list):

|

||||

|

||||

Api Gateways base image

|

||||

| Basket API | Catalog API | Identity API | Location API |

|

||||

| ------------- | ------------- | ------------- | ------------- |

|

||||

| [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=199&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=197&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=200&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=202&branchName=dev) |

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=201&branchName=dev)

|

||||

| Marketing API | Ordering API | Payment API | Api Gateways base image |

|

||||

| ------------- | ------------- | ------------- | ------------- |

|

||||

| [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=203&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=198&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=205&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=201&branchName=dev)

|

||||

|

||||

Basket API

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=199&branchName=dev)

|

||||

|

||||

Catalog API

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=197&branchName=dev)

|

||||

|

||||

Identity API

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=200&branchName=dev)

|

||||

|

||||

Location API

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=202&branchName=dev)

|

||||

|

||||

Marketing API

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=203&branchName=dev)

|

||||

|

||||

Ordering API

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=198&branchName=dev)

|

||||

|

||||

Payment API

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=205&branchName=dev)

|

||||

|

||||

Webhooks API

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=207&branchName=dev)

|

||||

|

||||

Web Shopping Aggregator

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=206&branchName=dev)

|

||||

|

||||

Mobile Shopping Aggregator

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=204&branchName=dev)

|

||||

|

||||

Webbhooks demo client

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=208&branchName=dev)

|

||||

|

||||

WebMVC Client

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=209&branchName=dev)

|

||||

|

||||

WebSPA Client

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=210&branchName=dev)

|

||||

|

||||

Web Status

|

||||

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=211&branchName=dev)

|

||||

| Web Shopping Aggregator | Mobile Shopping Aggregator | WebMVC Client | WebSPA Client |

|

||||

| ------------- | ------------- | ------------- | ------------- |

|

||||

| [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=206&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=204&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=209&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=210&branchName=dev) |

|

||||

|

||||

| Web Status | Webhooks API | Webbhooks demo client |

|

||||

| ------------- | ------------- | ------------- |

|

||||

[](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=211&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=207&branchName=dev) | [](https://msftdevtools.visualstudio.com/eShopOnContainers/_build/latest?definitionId=208&branchName=dev) |

|

||||

|

||||

## IMPORTANT NOTES!

|

||||

|

||||

**You can use either the latest version of Visual Studio or simply Docker CLI and .NET CLI for Windows, Mac and Linux**.

|

||||

|

||||

**Note for Pull Requests (PRs)**: We accept pull request from the community. When doing it, please do it onto the **DEV branch** which is the consolidated work-in-progress branch. Do not request it onto Master branch, if possible.

|

||||

@ -74,7 +30,14 @@ Web Status

|

||||

**NEWS / ANNOUNCEMENTS**

|

||||

Do you want to be up-to-date on .NET Architecture guidance and reference apps like eShopOnContainers? --> Subscribe by "WATCHING" this new GitHub repo: https://github.com/dotnet-architecture/News

|

||||

|

||||

## Update to .NET Core 3

|

||||

|

||||

> There's currently an update to .NET Core 3 going on in the branch [features/migration-dotnet3](https://github.com/dotnet-architecture/eShopOnContainers/tree/features/migration-dotnet3).

|

||||

>

|

||||

> You can monitor this branch, but it's being changed frequently, community contributions will be accepted once it's officially released.

|

||||

|

||||

## Updated for .NET Core 2.2 "wave" of technologies

|

||||

|

||||

eShopOnContainers is updated to .NET Core 2.x (currently updated to 2.2) "wave" of technologies. Not just compilation but also new recommended code in EF Core, ASP.NET Core, and other new related versions.

|

||||

|

||||

The **dockerfiles** in the solution have also been updated and now support [**Docker Multi-Stage**](https://blogs.msdn.microsoft.com/stevelasker/2017/09/11/net-and-multistage-dockerfiles/) since mid-December 2017.

|

||||

@ -82,6 +45,7 @@ The **dockerfiles** in the solution have also been updated and now support [**Do

|

||||

>**PLEASE** Read our [branch guide](./branch-guide.md) to know about our branching policy

|

||||

|

||||

> ### DISCLAIMER

|

||||

>

|

||||

> **IMPORTANT:** The current state of this sample application is **BETA**, because we are constantly evolving towards newly released technologies. Therefore, many areas could be improved and change significantly while refactoring the current code and implementing new features. Feedback with improvements and pull requests from the community will be highly appreciated and accepted.

|

||||

>

|

||||

> This reference application proposes a simplified microservice oriented architecture implementation to introduce technologies like .NET Core with Docker containers through a comprehensive application. The chosen domain is eShop/eCommerce but simply because it is a well-known domain by most people/developers.

|

||||

@ -91,11 +55,11 @@ However, this sample application should not be considered as an "eCommerce refer

|

||||

|

||||

|

||||

|

||||

> Read the planned <a href='https://github.com/dotnet/eShopOnContainers/wiki/01.-Roadmap-and-Milestones-for-future-releases'>Roadmap and Milestones for future releases of eShopOnContainers</a> within the Wiki for further info about possible new implementations and provide feedback at the <a href='https://github.com/dotnet/eShopOnContainers/issues'>ISSUES section</a> if you'd like to see any specific scenario implemented or improved. Also, feel free to discuss on any current issue.

|

||||

> Read the planned <a href='https://github.com/dotnet-architecture/eShopOnContainers/wiki/Roadmap'>Roadmap</a> within the Wiki for further info about possible new implementations and provide feedback at the <a href='https://github.com/dotnet/eShopOnContainers/issues'>ISSUES section</a> if you'd like to see any specific scenario implemented or improved. Also, feel free to discuss on any current issue.

|

||||

|

||||

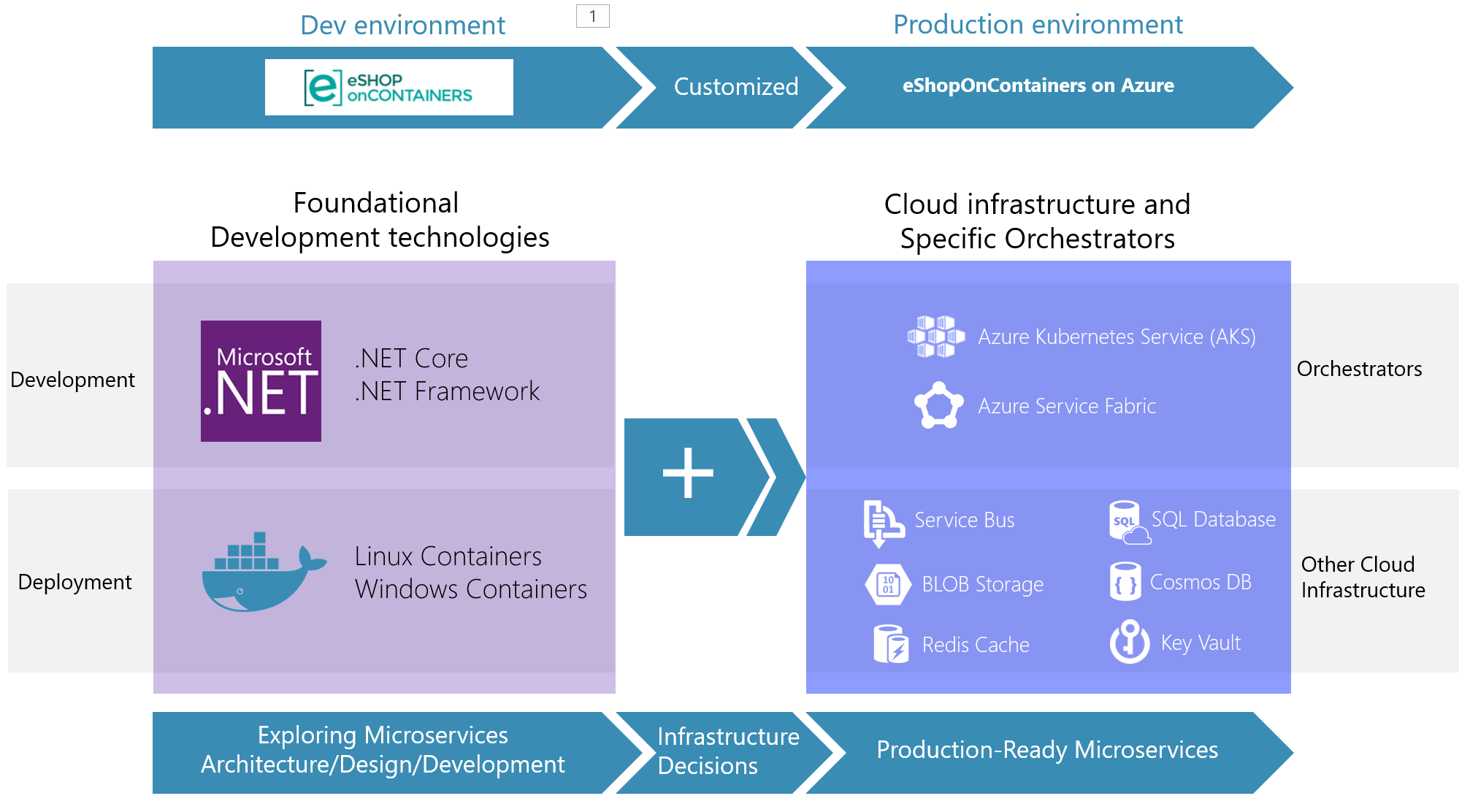

### Architecture overview

|

||||

This reference application is cross-platform at the server and client side, thanks to .NET Core services capable of running on Linux or Windows containers depending on your Docker host, and to Xamarin for mobile apps running on Android, iOS or Windows/UWP plus any browser for the client web apps.

|

||||

The architecture proposes a microservice oriented architecture implementation with multiple autonomous microservices (each one owning its own data/db) and implementing different approaches within each microservice (simple CRUD vs. DDD/CQRS patterns) using Http as the communication protocol between the client apps and the microservices and supports asynchronous communication for data updates propagation across multiple services based on Integration Events and an Event Bus (a light message broker, to choose between RabbitMQ or Azure Service Bus, underneath) plus other features defined at the <a href='https://github.com/dotnet/eShopOnContainers/wiki/01.-Roadmap-and-Milestones-for-future-releases'>roadmap</a>.

|

||||

The architecture proposes a microservice oriented architecture implementation with multiple autonomous microservices (each one owning its own data/db) and implementing different approaches within each microservice (simple CRUD vs. DDD/CQRS patterns) using Http as the communication protocol between the client apps and the microservices and supports asynchronous communication for data updates propagation across multiple services based on Integration Events and an Event Bus (a light message broker, to choose between RabbitMQ or Azure Service Bus, underneath) plus other features defined at the <a href='https://github.com/dotnet-architecture/eShopOnContainers/wiki/Roadmap'>roadmap</a>.

|

||||

<p>

|

||||

<img src="img/eshop_logo.png">

|

||||

<img src="https://user-images.githubusercontent.com/1712635/38758862-d4b42498-3f27-11e8-8dad-db60b0fa05d3.png">

|

||||

@ -125,6 +89,12 @@ The architecture proposes a microservice oriented architecture implementation wi

|

||||

> <p> A similar case is defined in regard to Redis cache running as a container for the development environment. Or a No-SQL database (MongoDB) running as a container.

|

||||

> <p> However, in a real production environment it is recommended to have your databases (SQL Server, Redis, and the NO-SQL database, in this case) in HA (High Available) services like Azure SQL Database, Redis as a service and Azure CosmosDB instead the MongoDB container (as both systems share the same access protocol). If you want to change to a production configuration, you'll just need to change the connection strings once you have set up the servers in an HA cloud or on-premises.

|

||||

|

||||

> ### Important Note on EventBus

|

||||

> In this solution's current EventBus is a simplified implementation, mainly used for learning purposes (development and testing), so it doesn't handle all production scenarios, most notably on error handling. <p>

|

||||

> The following forks provide production environment level implementation examples with eShopOnContainers :

|

||||

> * Implementation with [NServiceBus](https://github.com/Particular/NServiceBus) : https://github.com/Particular/eShopOnContainers

|

||||

> * Implementation with [CAP](https://github.com/dotnetcore/CAP) : https://github.com/yang-xiaodong/eShopOnContainers

|

||||

|

||||

## Related documentation and guidance

|

||||

While developing this reference application, we've been creating a reference <b>Guide/eBook</b> focusing on <b>architecting and developing containerized and microservice based .NET Applications</b> (download link available below) which explains in detail how to develop this kind of architectural style (microservices, Docker containers, Domain-Driven Design for certain microservices) plus other simpler architectural styles, like monolithic apps that can also live as Docker containers.

|

||||

<p>

|

||||

@ -168,25 +138,20 @@ Finally, those microservices are consumed by multiple client web and mobile apps

|

||||

<img src="img/xamarin-mobile-App.png">

|

||||

|

||||

## Setting up your development environment for eShopOnContainers

|

||||

### Visual Studio 2017 and Windows based

|

||||

This is the more straightforward way to get started:

|

||||

https://github.com/dotnet-architecture/eShopOnContainers/wiki/02.-Setting-eShopOnContainers-in-a-Visual-Studio-2017-environment

|

||||

|

||||

### CLI and Windows based

|

||||

For those who prefer the CLI on Windows, using dotnet CLI, docker CLI and VS Code for Windows:

|

||||

https://github.com/dotnet/eShopOnContainers/wiki/03.-Setting-the-eShopOnContainers-solution-up-in-a-Windows-CLI-environment-(dotnet-CLI,-Docker-CLI-and-VS-Code)

|

||||

### Windows based (CLI and Visual Studio)

|

||||

|

||||

### CLI and Mac based

|

||||

For those who prefer the CLI on a Mac, using dotnet CLI, docker CLI and VS Code for Mac:

|

||||

https://github.com/dotnet-architecture/eShopOnContainers/wiki/04.-Setting-eShopOnContainer-solution-up-in-a-Mac,-VS-for-Mac-or-with-CLI-environment--(dotnet-CLI,-Docker-CLI-and-VS-Code)

|

||||

<https://github.com/dotnet-architecture/eShopOnContainers/wiki/Windows-setup>

|

||||

|

||||

### Mac based (CLI ans Visual Studio for Mac)

|

||||

|

||||

<https://github.com/dotnet-architecture/eShopOnContainers/wiki/Mac-setup>

|

||||

|

||||

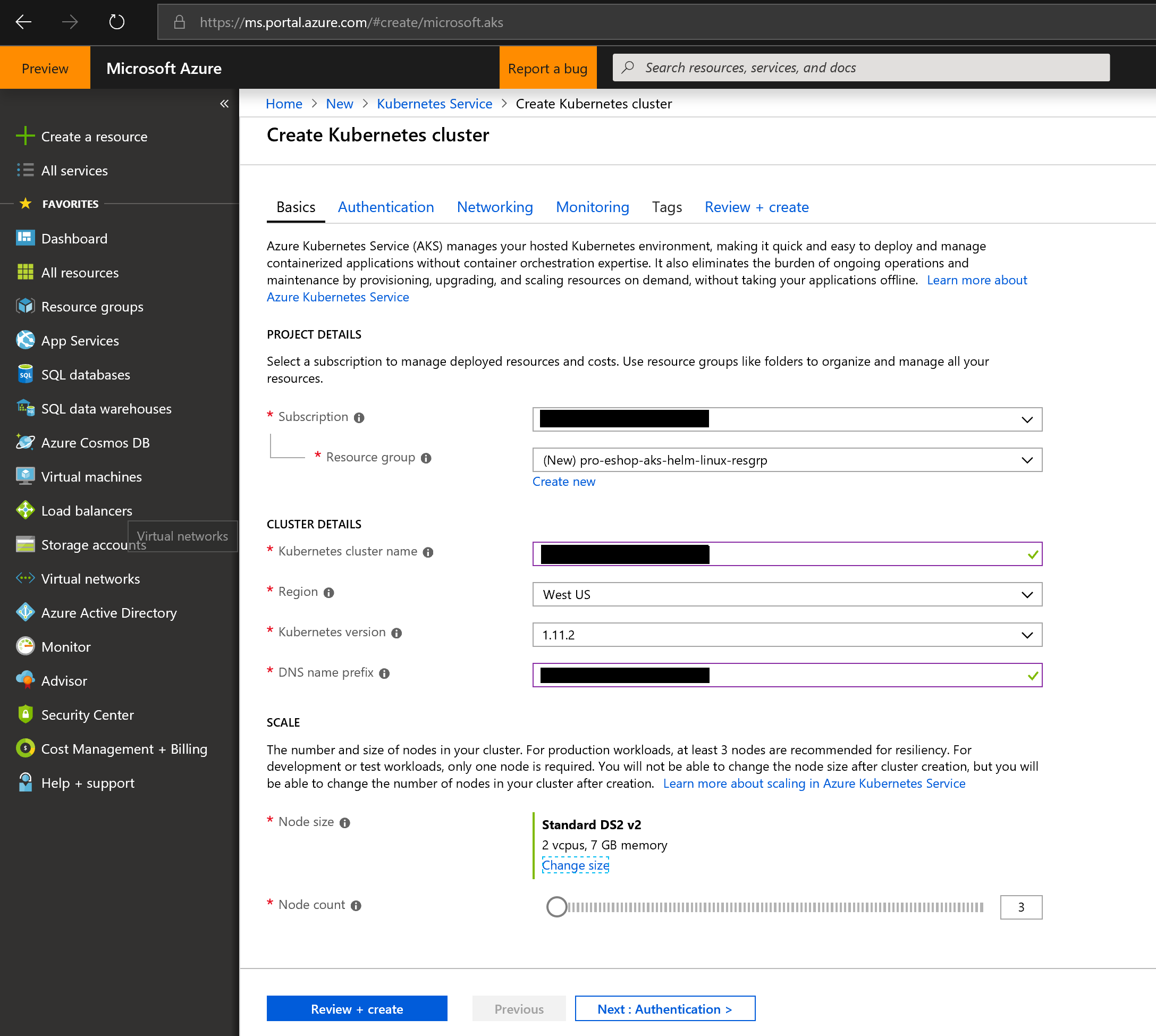

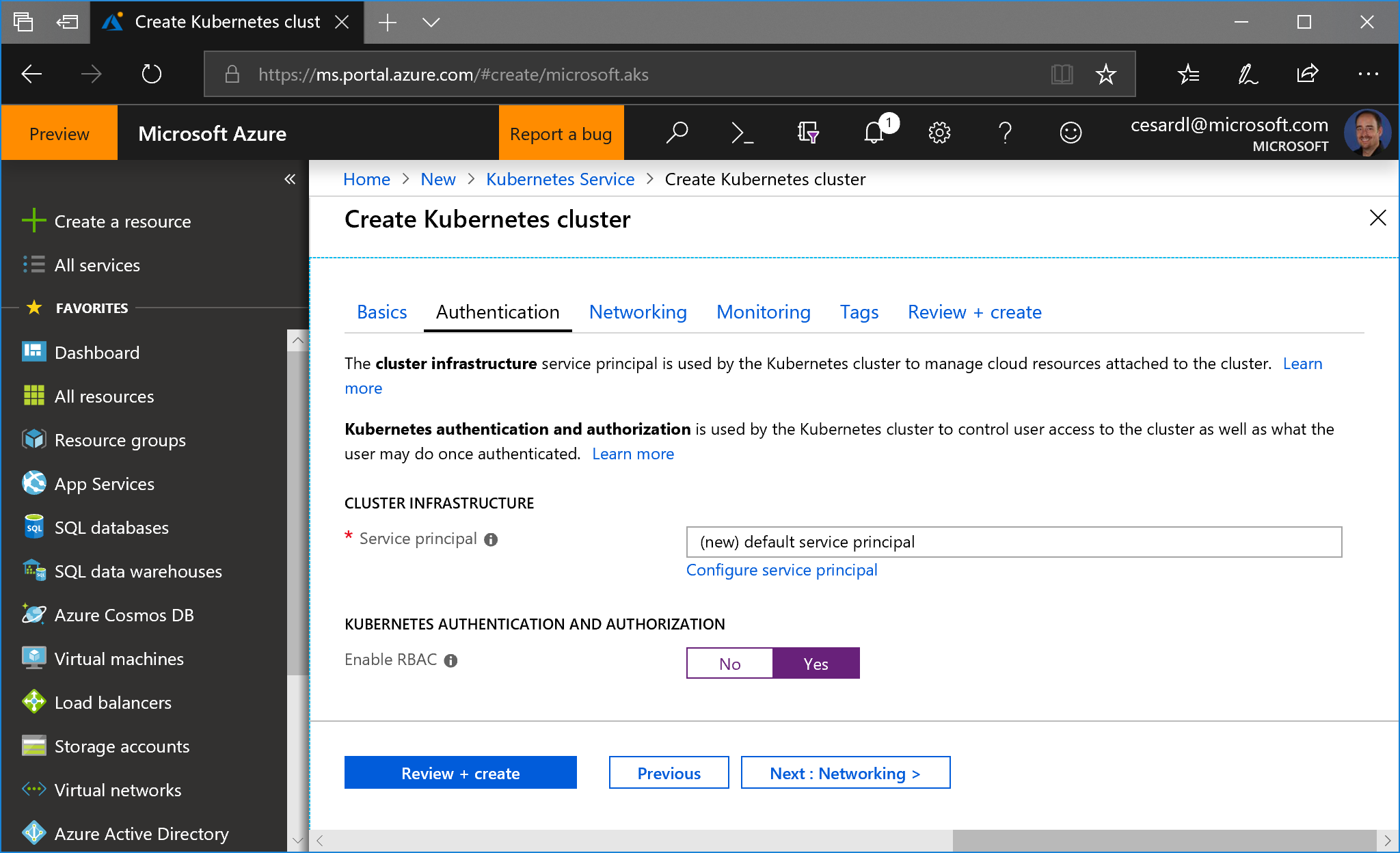

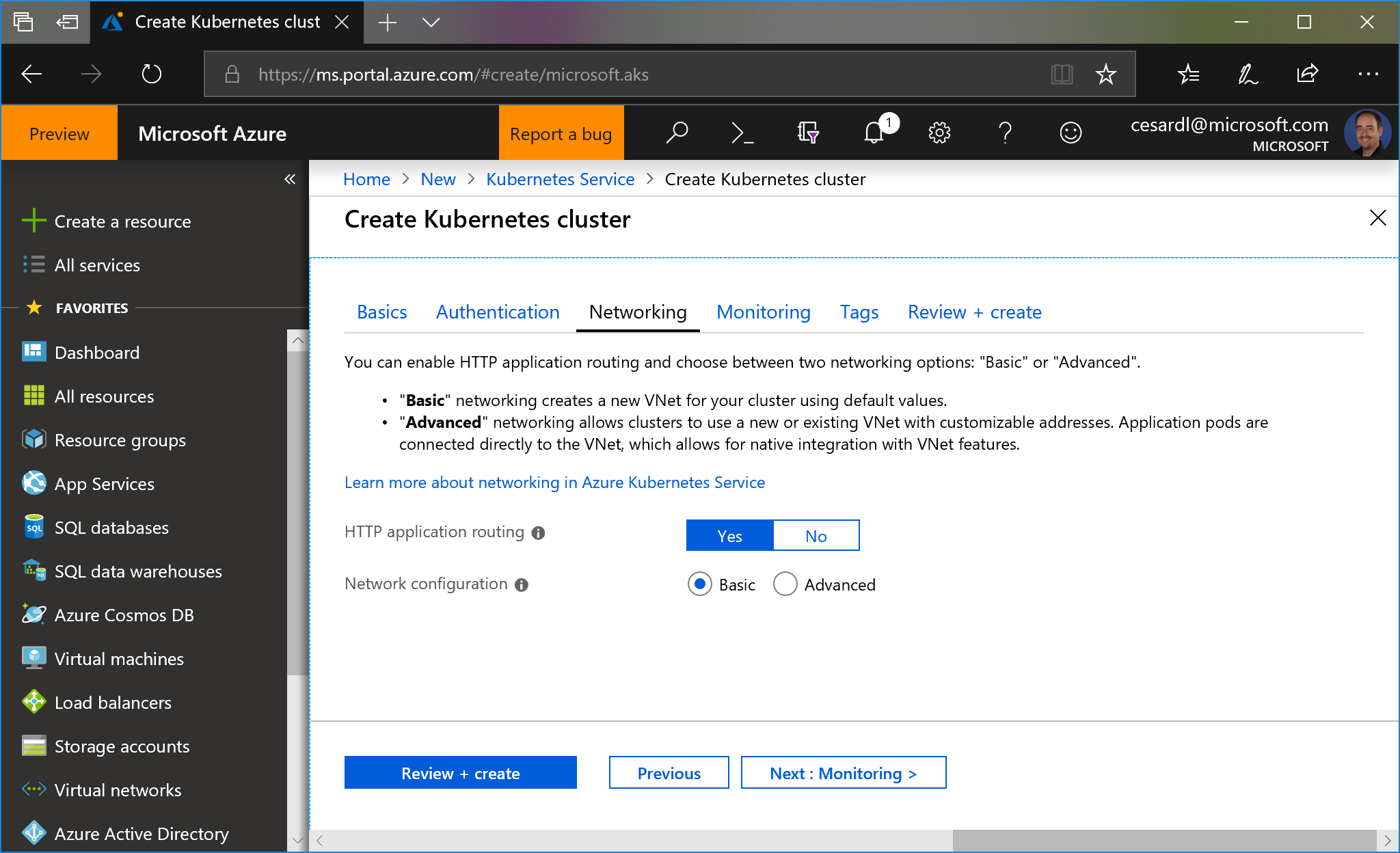

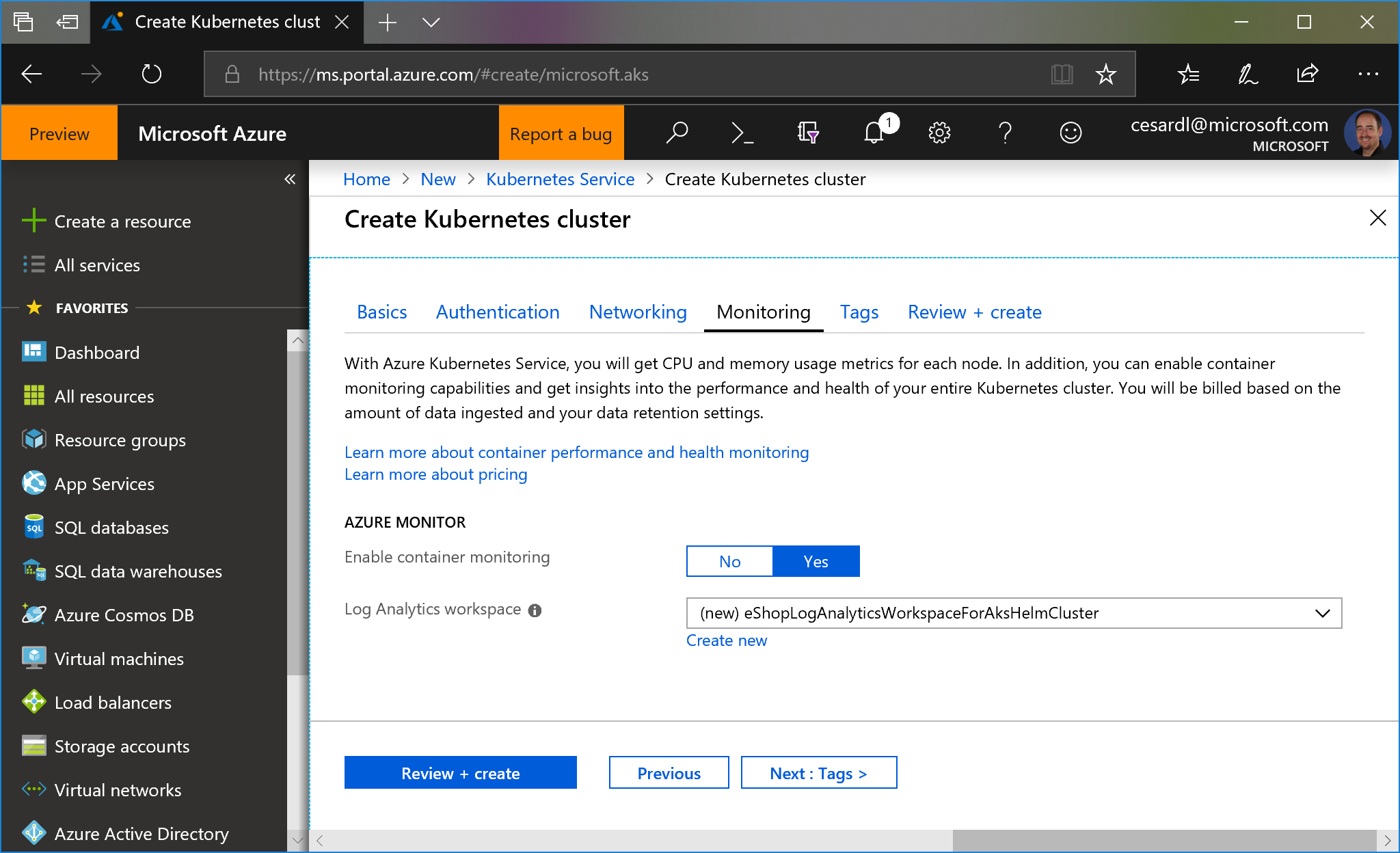

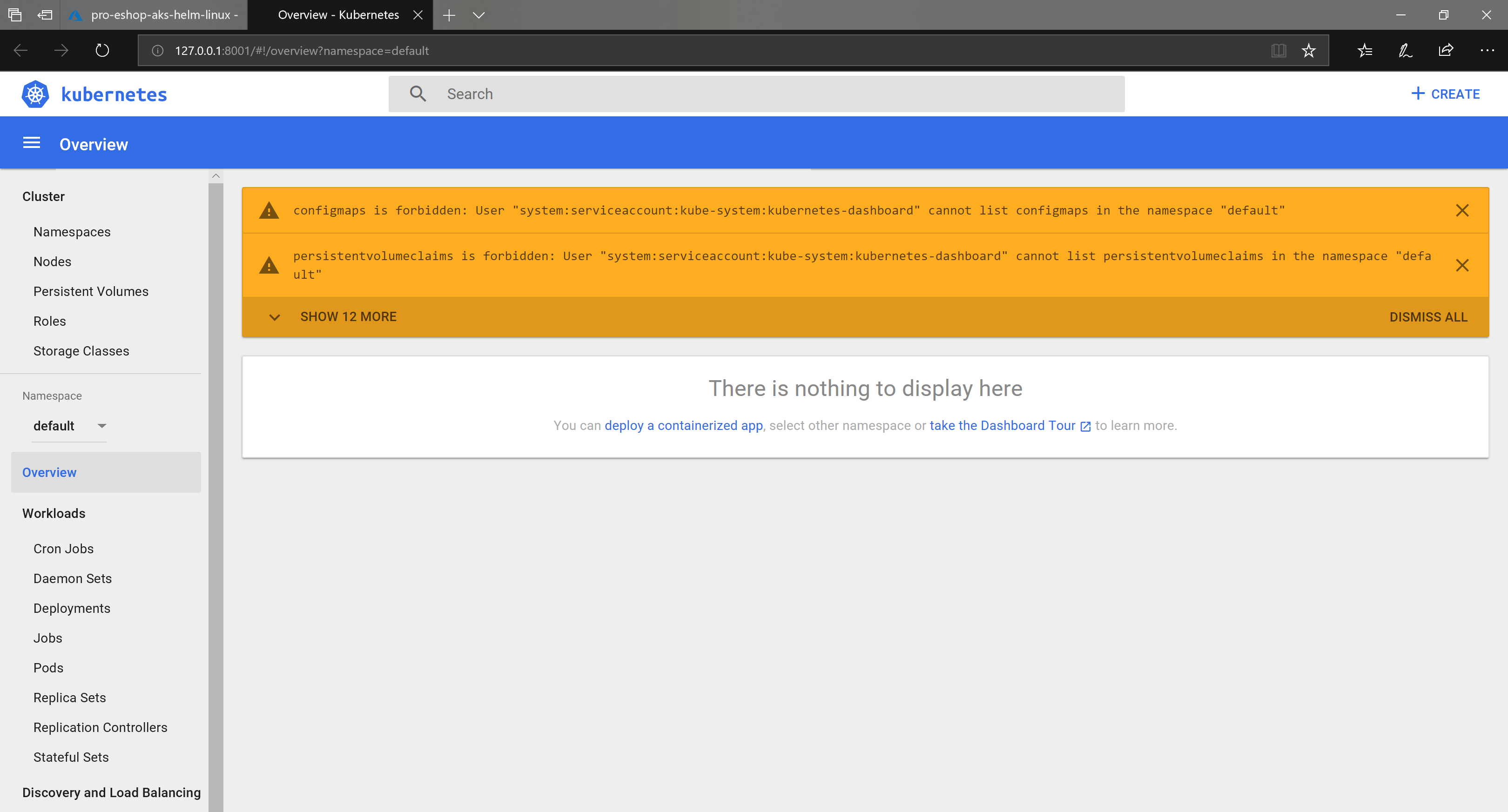

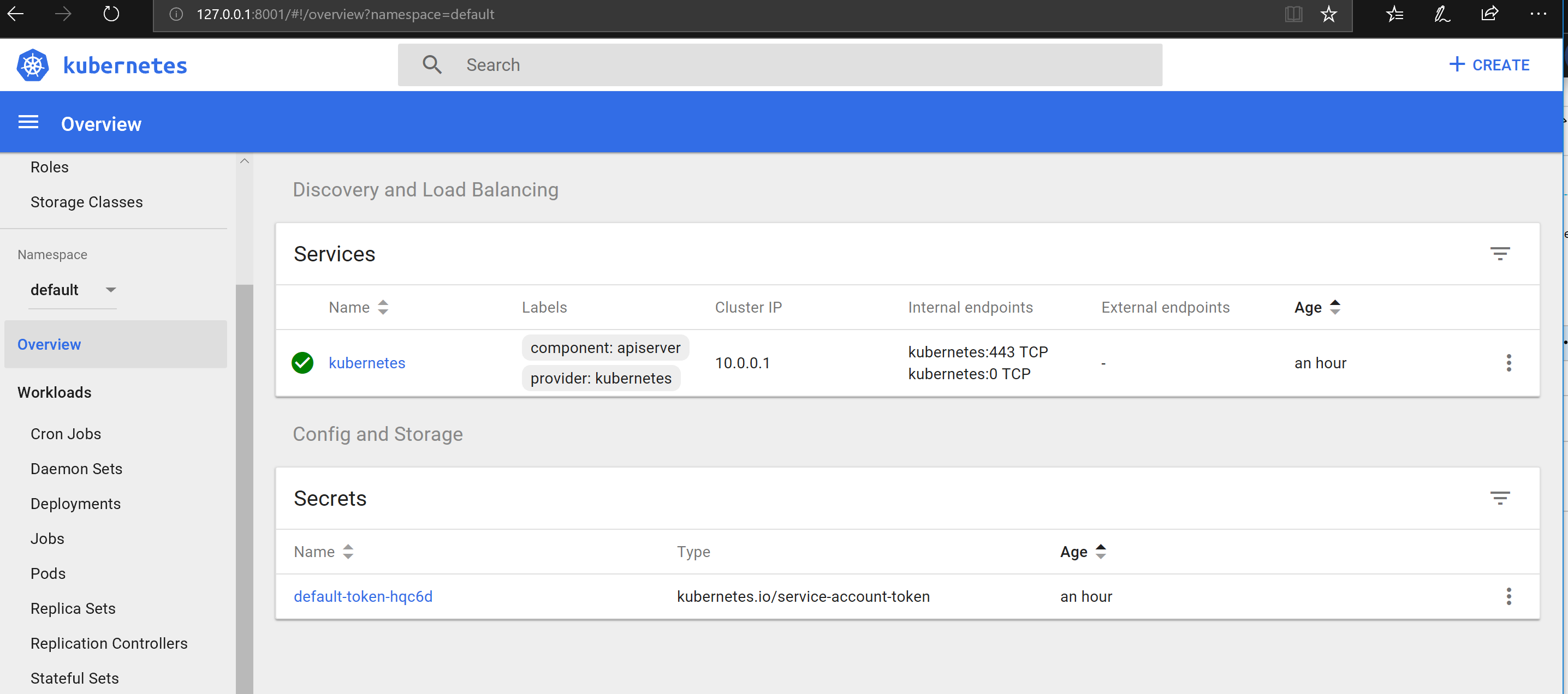

## Orchestrators: Kubernetes and Service Fabric

|

||||

|

||||

See at the [Wiki](https://github.com/dotnet-architecture/eShopOnContainers/wiki) the posts on setup/instructions about how to deploy to Kubernetes or Service Fabric in Azure (although you could also deploy to any other cloud or on-premises).

|

||||

|

||||

## Sending feedback and pull requests

|

||||

|

||||

As mentioned, we'd appreciate your feedback, improvements and ideas.

|

||||

You can create new issues at the issues section, do pull requests and/or send emails to **eshop_feedback@service.microsoft.com**

|

||||

|

||||

## Questions

|

||||

[QUESTION] Answer +1 if the solution is working for you (Through VS2017 or CLI environment):

|

||||

https://github.com/dotnet/eShopOnContainers/issues/107

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -17,34 +15,15 @@ trigger:

|

||||

exclude:

|

||||

- src/ApiGateways/Mobile.Bff.Shopping/aggregator/*

|

||||

- src/ApiGateways/Web.Bff.Shopping/aggregator/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build apigws

|

||||

inputs:

|

||||

dockerComposeCommand: 'build mobileshoppingapigw mobilemarketingapigw webshoppingapigw webmarketingapigw'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push apigws

|

||||

inputs:

|

||||

dockerComposeCommand: 'push mobileshoppingapigw mobilemarketingapigw webshoppingapigw webmarketingapigw'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: mobileshoppingapigw mobilemarketingapigw webshoppingapigw webmarketingapigw

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: ocelotapigw

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -12,34 +10,15 @@ trigger:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Services/Basket/*

|

||||

- k8s/helm/basket-api/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build basket

|

||||

inputs:

|

||||

dockerComposeCommand: 'build basket.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push basket

|

||||

inputs:

|

||||

dockerComposeCommand: 'push basket.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: basket.api

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: basket.api

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

|

||||

92

build/azure-devops/buildimages.yaml

Normal file

@ -0,0 +1,92 @@

|

||||

parameters:

|

||||

services: ''

|

||||

registryEndpoint: ''

|

||||

helmfrom: ''

|

||||

helmto: ''

|

||||

|

||||

jobs:

|

||||

- job: BuildContainersForPR_Linux

|

||||

condition: eq('${{ variables['Build.Reason'] }}', 'PullRequest')

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

steps:

|

||||

- bash: docker-compose build ${{ parameters.services }}

|

||||

displayName: Create multiarch manifest

|

||||

env:

|

||||

TAG: ${{ variables['Build.SourceBranchName'] }}

|

||||

- job: BuildContainersForPR_Windows

|

||||

condition: eq('${{ variables['Build.Reason'] }}', 'PullRequest')

|

||||

pool:

|

||||

vmImage: 'windows-2019'

|

||||

steps:

|

||||

- bash: docker-compose build ${{ parameters.services }}

|

||||

displayName: Create multiarch manifest

|

||||

env:

|

||||

TAG: ${{ variables['Build.SourceBranchName'] }}

|

||||

PLATFORM: win

|

||||

NODE_IMAGE: stefanscherer/node-windows:10

|

||||

- job: BuildLinux

|

||||

condition: ne('${{ variables['Build.Reason'] }}', 'PullRequest')

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build ${{ parameters.services }}

|

||||

inputs:

|

||||

dockerComposeCommand: 'build ${{ parameters.services }}'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: ${{ parameters.registryEndpoint }}

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=${{ variables['Build.SourceBranchName'] }}

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push ${{ parameters.images }}

|

||||

inputs:

|

||||

dockerComposeCommand: 'push ${{ parameters.services }}'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: ${{ parameters.registryEndpoint }}

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=${{ variables['Build.SourceBranchName'] }}

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: ${{ parameters.helmfrom }}

|

||||

targetFolder: ${{ parameters.helmto }}

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: ${{ parameters.helmto }}

|

||||

artifactName: helm

|

||||

- job: BuildWindows

|

||||

condition: ne('${{ variables['Build.Reason'] }}', 'PullRequest')

|

||||

pool:

|

||||

vmImage: 'windows-2019'

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build ${{ parameters.services }}

|

||||

inputs:

|

||||

dockerComposeCommand: 'build ${{ parameters.services }}'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: ${{ parameters.registryEndpoint }}

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=${{ variables['Build.SourceBranchName'] }}

|

||||

PLATFORM=win

|

||||

NODE_IMAGE=stefanscherer/node-windows:10

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push ${{ parameters.services }}

|

||||

inputs:

|

||||

dockerComposeCommand: 'push ${{ parameters.services }}'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: ${{ parameters.registryEndpoint }}

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=${{ variables['Build.SourceBranchName'] }}

|

||||

PLATFORM=win

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -12,34 +10,15 @@ trigger:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Services/Catalog/*

|

||||

- k8s/helm/catalog-api/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build catalog

|

||||

inputs:

|

||||

dockerComposeCommand: 'build catalog.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push catalog

|

||||

inputs:

|

||||

dockerComposeCommand: 'push catalog.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: catalog.api

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: catalog.api

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -12,34 +10,15 @@ trigger:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Services/Identity/*

|

||||

- k8s/helm/identity-api/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build identity

|

||||

inputs:

|

||||

dockerComposeCommand: 'build identity.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push identity

|

||||

inputs:

|

||||

dockerComposeCommand: 'push identity.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: identity.api

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: identity.api

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

|

||||

25

build/azure-devops/infrastructure/azure-pipelines.yml

Normal file

@ -0,0 +1,25 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

branches:

|

||||

include:

|

||||

- master

|

||||

- dev

|

||||

paths:

|

||||

include:

|

||||

- k8s/helm/basket-data/*

|

||||

- k8s/helm/keystore-data/*

|

||||

- k8s/helm/nosql-data/*

|

||||

- k8s/helm/rabbitmq/*

|

||||

- k8s/helm/sql-data/*

|

||||

steps:

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -11,35 +9,16 @@ trigger:

|

||||

include:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Services/Location/*

|

||||

- k8s/helm/locations-api/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build locations

|

||||

inputs:

|

||||

dockerComposeCommand: 'build locations.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push locations

|

||||

inputs:

|

||||

dockerComposeCommand: 'push locations.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

- k8s/helm/locations-api/*

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: locations.api

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: locations.api

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -11,35 +9,16 @@ trigger:

|

||||

include:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Services/Marketing/*

|

||||

- k8s/helm/marketing-api/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build marketing

|

||||

inputs:

|

||||

dockerComposeCommand: 'build marketing.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push marketing

|

||||

inputs:

|

||||

dockerComposeCommand: 'push marketing.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

- k8s/helm/marketing-api/*

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: marketing.api

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: marketing.api

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -10,35 +8,17 @@ trigger:

|

||||

paths:

|

||||

include:

|

||||

- src/ApiGateways/Mobile.Bff.Shopping/aggregator/*

|

||||

- k8s/helm/mobileshoppingagg/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build mobileshoppingagg

|

||||

inputs:

|

||||

dockerComposeCommand: 'build mobileshoppingagg'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push mobileshoppingagg

|

||||

inputs:

|

||||

dockerComposeCommand: 'push mobileshoppingagg'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

- k8s/helm/mobileshoppingagg/*

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: mobileshoppingagg

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: mobileshoppingagg

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

|

||||

|

||||

30

build/azure-devops/multiarch.yaml

Normal file

@ -0,0 +1,30 @@

|

||||

parameters:

|

||||

image: ''

|

||||

branch: ''

|

||||

registry: 'eshop'

|

||||

registryEndpoint: ''

|

||||

|

||||

jobs:

|

||||

- job: manifest

|

||||

condition: and(succeeded(),ne('${{ variables['Build.Reason'] }}', 'PullRequest'))

|

||||

dependsOn:

|

||||

- BuildWindows

|

||||

- BuildLinux

|

||||

pool:

|

||||

vmImage: 'Ubuntu 16.04'

|

||||

steps:

|

||||

- task: Docker@1

|

||||

displayName: Docker Login

|

||||

inputs:

|

||||

command: login

|

||||

containerregistrytype: 'Container Registry'

|

||||

dockerRegistryEndpoint: ${{ parameters.registryEndpoint }}

|

||||

- bash: |

|

||||

mkdir -p ~/.docker

|

||||

sed '$ s/.$//' $DOCKER_CONFIG/config.json > ~/.docker/config.json

|

||||

echo ',"experimental": "enabled" }' >> ~/.docker/config.json

|

||||

docker --config ~/.docker manifest create ${{ parameters.registry }}/${{ parameters.image }}:${{ parameters.branch }} ${{ parameters.registry }}/${{ parameters.image }}:linux-${{ parameters.branch }} ${{ parameters.registry }}/${{ parameters.image }}:win-${{ parameters.branch }}

|

||||

docker --config ~/.docker manifest create ${{ parameters.registry }}/${{ parameters.image }}:latest ${{ parameters.registry }}/${{ parameters.image }}:linux-latest ${{ parameters.registry }}/${{ parameters.image }}:win-latest

|

||||

docker --config ~/.docker manifest push ${{ parameters.registry }}/${{ parameters.image }}:${{ parameters.branch }}

|

||||

docker --config ~/.docker manifest push ${{ parameters.registry }}/${{ parameters.image }}:latest

|

||||

displayName: Create multiarch manifest

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -14,34 +12,15 @@ trigger:

|

||||

- k8s/helm/ordering-api/*

|

||||

- k8s/helm/ordering-backgroundtasks/*

|

||||

- k8s/helm/ordering-signalrhub/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build ordering

|

||||

inputs:

|

||||

dockerComposeCommand: 'build ordering.api ordering.backgroundtasks ordering.signalrhub'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push ordering

|

||||

inputs:

|

||||

dockerComposeCommand: 'push ordering.api ordering.backgroundtasks ordering.signalrhub'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: ordering.api

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: ordering.api

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -12,34 +10,15 @@ trigger:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Services/Payment/*

|

||||

- k8s/helm/payment-api/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build payment

|

||||

inputs:

|

||||

dockerComposeCommand: 'build payment.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push payment

|

||||

inputs:

|

||||

dockerComposeCommand: 'push payment.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: payment.api

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: payment.api

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -10,35 +8,16 @@ trigger:

|

||||

paths:

|

||||

include:

|

||||

- src/ApiGateways/Web.Bff.Shopping/aggregator/*

|

||||

- k8s/helm/webshoppingagg/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build webshoppingagg

|

||||

inputs:

|

||||

dockerComposeCommand: 'build webshoppingagg'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push webshoppingagg

|

||||

inputs:

|

||||

dockerComposeCommand: 'push webshoppingagg'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

- k8s/helm/webshoppingagg/*

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: webshoppingagg

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: webshoppingagg

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -11,35 +9,16 @@ trigger:

|

||||

include:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Services/Webhooks/*

|

||||

- k8s/helm/webhooks-api/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build webhooks

|

||||

inputs:

|

||||

dockerComposeCommand: 'build webhooks.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push webhooks

|

||||

inputs:

|

||||

dockerComposeCommand: 'push webhooks.api'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

- k8s/helm/webhooks-api/*

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: webhooks.api

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: webhooks.api

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -11,35 +9,16 @@ trigger:

|

||||

include:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Web/WebhookClient/*

|

||||

- k8s/helm/webhooks-web/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build webhooks.client

|

||||

inputs:

|

||||

dockerComposeCommand: 'build webhooks.client'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push webhooks.client

|

||||

inputs:

|

||||

dockerComposeCommand: 'push webhooks.client'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

- k8s/helm/webhooks-web/*

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: webhooks.client

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: webhooks.client

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -11,35 +9,16 @@ trigger:

|

||||

include:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Web/WebMVC/*

|

||||

- k8s/helm/webmvc/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build webmvc

|

||||

inputs:

|

||||

dockerComposeCommand: 'build webmvc'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push webmvc

|

||||

inputs:

|

||||

dockerComposeCommand: 'push webmvc'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

- k8s/helm/webmvc/*

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: webmvc

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: webmvc

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -12,34 +10,15 @@ trigger:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Web/WebSPA/*

|

||||

- k8s/helm/webspa/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build webspa

|

||||

inputs:

|

||||

dockerComposeCommand: 'build webspa'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push webspa

|

||||

inputs:

|

||||

dockerComposeCommand: 'push webspa'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: webspa

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: webspa

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

@ -1,5 +1,3 @@

|

||||

pool:

|

||||

vmImage: 'ubuntu-16.04'

|

||||

variables:

|

||||

registryEndpoint: eshop-registry

|

||||

trigger:

|

||||

@ -11,35 +9,16 @@ trigger:

|

||||

include:

|

||||

- src/BuildingBlocks/*

|

||||

- src/Web/WebStatus/*

|

||||

- k8s/helm/webstatus/*

|

||||

steps:

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose build webstatus

|

||||

inputs:

|

||||

dockerComposeCommand: 'build webstatus'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: DockerCompose@0

|

||||

displayName: Compose push webstatus

|

||||

inputs:

|

||||

dockerComposeCommand: 'push webstatus'

|

||||

containerregistrytype: Container Registry

|

||||

dockerRegistryEndpoint: $(registryEndpoint)

|

||||

dockerComposeFile: docker-compose.yml

|

||||

qualifyImageNames: true

|

||||

projectName: ""

|

||||

dockerComposeFileArgs: |

|

||||

TAG=$(Build.SourceBranchName)

|

||||

- task: CopyFiles@2

|

||||

inputs:

|

||||

sourceFolder: $(Build.SourcesDirectory)/k8s/helm

|

||||

targetFolder: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- task: PublishBuildArtifacts@1

|

||||

inputs:

|

||||

pathtoPublish: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

artifactName: helm

|

||||

- k8s/helm/webstatus/*

|

||||

jobs:

|

||||

- template: ../buildimages.yaml

|

||||

parameters:

|

||||

services: webstatus

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

helmfrom: $(Build.SourcesDirectory)/k8s/helm

|

||||

helmto: $(Build.ArtifactStagingDirectory)/k8s/helm

|

||||

- template: ../multiarch.yaml

|

||||

parameters:

|

||||

image: webstatus

|

||||

branch: $(Build.SourceBranchName)

|

||||

registryEndpoint: $(registryEndpoint)

|

||||

26

build/multiarch-manifests/create-manifests.ps1

Normal file

@ -0,0 +1,26 @@

|

||||

Param(

|

||||

[parameter(Mandatory=$true)][string]$registry

|

||||

)

|

||||

|

||||

if ([String]::IsNullOrEmpty($registry)) {

|

||||

Write-Host "Registry must be set to docker registry to use" -ForegroundColor Red

|

||||

exit 1

|

||||

}

|

||||

|

||||

Write-Host "This script creates the local manifests, for pushing the multi-arch manifests" -ForegroundColor Yellow

|

||||

Write-Host "Tags used are linux-master, win-master, linux-dev, win-dev, linux-latest, win-latest" -ForegroundColor Yellow

|

||||

Write-Host "Multiarch images tags will be master, dev, latest" -ForegroundColor Yellow

|

||||

|

||||

|

||||

$services = "identity.api", "basket.api", "catalog.api", "ordering.api", "ordering.backgroundtasks", "marketing.api", "payment.api", "locations.api", "webhooks.api", "ocelotapigw", "mobileshoppingagg", "webshoppingagg", "ordering.signalrhub", "webstatus", "webspa", "webmvc", "webhooks.client"

|

||||

|

||||

foreach ($svc in $services) {

|

||||

Write-Host "Creating manifest for $svc and tags :latest, :master, and :dev"

|

||||

docker manifest create $registry/${svc}:master $registry/${svc}:linux-master $registry/${svc}:win-master

|

||||

docker manifest create $registry/${svc}:dev $registry/${svc}:linux-dev $registry/${svc}:win-dev

|

||||

docker manifest create $registry/${svc}:latest $registry/${svc}:linux-latest $registry/${svc}:win-latest

|

||||

Write-Host "Pushing manifest for $svc and tags :latest, :master, and :dev"

|

||||

docker manifest push $registry/${svc}:latest

|

||||

docker manifest push $registry/${svc}:dev

|

||||

docker manifest push $registry/${svc}:master

|

||||

}

|

||||

2

cli-windows/set-dockernat-networkategory-to-private.ps1

Normal file

@ -0,0 +1,2 @@

|

||||

#Requires -RunAsAdministrator

|

||||

Get-NetConnectionProfile | Where-Object { $_.InterfaceAlias -match "(DockerNAT)" } | ForEach-Object { Set-NetConnectionProfile -InterfaceIndex $_.InterfaceIndex -NetworkCategory Private }

|

||||

37

docker-compose.elk.yml

Normal file

@ -0,0 +1,37 @@

|

||||

version: '3.4'

|

||||

|

||||

services:

|

||||

|

||||

elasticsearch:

|

||||

build:

|

||||

context: elk/elasticsearch/

|

||||

volumes:

|

||||

- ./elk/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml:ro

|

||||

ports:

|

||||

- "9200:9200"

|

||||

- "9300:9300"

|

||||

environment:

|

||||

ES_JAVA_OPTS: "-Xmx256m -Xms256m"

|

||||

|

||||

logstash:

|

||||

build:

|

||||

context: elk/logstash/

|

||||

volumes:

|

||||

- ./elk/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml:ro

|

||||

- ./elk/logstash/pipeline:/usr/share/logstash/pipeline:ro

|

||||

ports:

|

||||

- "8080:8080"

|

||||

environment:

|

||||

LS_JAVA_OPTS: "-Xmx256m -Xms256m"

|

||||

depends_on:

|

||||

- elasticsearch

|

||||

|

||||

kibana:

|

||||

build:

|

||||

context: elk/kibana/

|

||||

volumes:

|

||||

- ./elk/kibana/config/:/usr/share/kibana/config:ro

|

||||

ports:

|

||||

- "5601:5601"

|

||||

depends_on:

|

||||

- elasticsearch

|

||||

@ -110,6 +110,8 @@ services:

|

||||

- ApplicationInsights__InstrumentationKey=${INSTRUMENTATION_KEY}

|

||||

- OrchestratorType=${ORCHESTRATOR_TYPE}

|

||||

- UseLoadTest=${USE_LOADTEST:-False}

|

||||

- Serilog__MinimumLevel__Override__Microsoft.eShopOnContainers.BuildingBlocks.EventBusRabbitMQ=Verbose

|

||||

- Serilog__MinimumLevel__Override__Ordering.API=Verbose

|

||||

ports:

|

||||

- "5102:80" # Important: In a production environment your should remove the external port (5102) kept here for microservice debugging purposes.

|

||||

# The API Gateway redirects and access through the internal port (80).

|

||||

@ -130,6 +132,7 @@ services:

|

||||

- ApplicationInsights__InstrumentationKey=${INSTRUMENTATION_KEY}

|

||||

- OrchestratorType=${ORCHESTRATOR_TYPE}

|

||||

- UseLoadTest=${USE_LOADTEST:-False}

|

||||

- Serilog__MinimumLevel__Override__Microsoft.eShopOnContainers.BuildingBlocks.EventBusRabbitMQ=Verbose

|

||||

ports:

|

||||

- "5111:80"