Review wiki changes

@ -1,15 +1,18 @@

|

||||

# API Gateways

|

||||

eShopOnContainer uses four API Gateways that implement the [Backend for Frontends (BFF) pattern](https://samnewman.io/patterns/architectural/bff/).

|

||||

|

||||

eShopOnContainer use 4 API Gateways that implement the BFF pattern. Overall architecture is:

|

||||

Overall architecture is shown in the next diagram:

|

||||

|

||||

|

||||

|

||||

|

||||

The image above is the architecture when running eShopOnContainers on Kubernetes with Service Mesh enabled. If Service Mesh is disabled all the "Linkerd" containers do not exist and if running outside Kubernetes the "Ingress controller" do not exists and you access directly to API Gateways.

|

||||

The image above is the architecture when running eShopOnContainers on Kubernetes with Service Mesh enabled. If Service Mesh is disabled all of the "Linkerd" containers don't exist and if running outside Kubernetes the "Ingress controller" doesn't exists and you access directly the API Gateways.

|

||||

|

||||

In this architecture the 4 blue boxes in the column labelled as "eShop Ingress" are the four BFFs.

|

||||

In this architecture the four blue boxes in the column labelled as "eShop Ingress" are the four BFFs.

|

||||

|

||||

Currently they are implemented using [Envoy](https://www.envoyproxy.io/). Each BFF provides a unique entrypoint for its clients and then forwards the call to the specific microservice or the custom aggregator.

|

||||

Currently they are implemented using [Envoy](https://www.envoyproxy.io/). Each BFF provides a unique endpoint for its clients and then forwards the call to the specific microservice or the custom aggregator.

|

||||

|

||||

The communication between BFF and the microservices is always using HTTP/REST. This could change in the future by using gRPC from the BFF to the microservices, while mantaining a HTTP/REST façade from the BFFs to the clients.

|

||||

It's important to highlight that:

|

||||

|

||||

**Note**: The communication between the aggregators and the microservices is using gRPC, but between the BFFs and the aggregators is still HTTP/REST.

|

||||

- The communication between BFF and the microservices plus aggregator is HTTP/REST.

|

||||

- The communication between the aggregators and the microservices is gRPC.

|

||||

|

||||

This could change in the future by using gRPC from the BFF to the microservices plus aggregators, while maintaining an HTTP/REST façade from the BFFs to the clients.

|

||||

|

||||

@ -1,3 +1,13 @@

|

||||

> **CONTENT**

|

||||

|

||||

- [Overview](#overview)

|

||||

- [EventBus](#eventbus)

|

||||

- [gRPC](#grpc)

|

||||

- [API Gateways](#api-gateways)

|

||||

- [Internal architectural patterns](#internal-architectural-patterns)

|

||||

- [Database servers](#database-servers)

|

||||

- [More on-line details and guidance](#more-on-line-details-and-guidance)

|

||||

|

||||

## Overview

|

||||

|

||||

This reference application is cross-platform for both the server and client side, thanks to .NET Core services, it's capable of running on Linux or Windows containers depending on your Docker host. It also has a Xamarin mobile app that supports Android, iOS and Windows/UWP, as well as an ASP.NET Core Web MVC and an SPA apps.

|

||||

@ -16,23 +26,23 @@ For a production-grade solutions you should use a more robust implementation bas

|

||||

|

||||

## gRPC

|

||||

|

||||

Most communications between microservices are decoupled using the EventBus and the "pub/sub" pattern. But in some cases, we have explicit communications between microservices. Currently those communications are limited from the custom aggregators to internal microservices.

|

||||

Most communications between microservices are decoupled using the EventBus and the "pub/sub" pattern.

|

||||

|

||||

For those explicit communications gRPC is used (instead of HTTP/JSON). gRPC is a RPC-based protocol that have great performance and low bandwidth usage, making it the best candidate for internal microservices communication.

|

||||

However, the communications between the custom aggregators and the internal microservices is currently implemented with gRPC, instead of HTTP/JSON. gRPC is a RPC-based protocol that has great performance and low bandwidth usage, making it the best candidate for internal microservices communication.

|

||||

|

||||

More information about gRPC and eShopOnContainers can be found [here](./gRPC.md)

|

||||

More information about gRPC and eShopOnContainers can be found [in the gRPC article in this wiki](./gRPC.md)

|

||||

|

||||

## API Gateways

|

||||

|

||||

The architecture also includes an implementation of the API Gateway pattern and Backend-For-Front-End (BFF), to publish simplified APIs and include additional security measures for hiding/securing the internal microservices from the client apps or outside consumers.

|

||||

The architecture also includes an implementation of the API Gateway and [Backends for Frontends (BFF)](https://samnewman.io/patterns/architectural/bff/) patterns, to publish simplified APIs and include additional security measures for hiding/securing the internal microservices from the client apps or outside consumers.

|

||||

|

||||

These API Gateways are implemented using [Envoy](https://www.envoyproxy.io/), an OSS high-performant, production ready, proxy and API Gateway. Currently these API Gateways only perform request forwarding to internal microservices and custom aggregators, giving the clients then ilusion of a single base URL. In the future we plan to add specific features like:

|

||||

These API Gateways are implemented using [Envoy](https://www.envoyproxy.io/), an OSS high-performant, production ready, proxy and API Gateway. Currently these API Gateways only perform request forwarding to internal microservices and custom aggregators, giving the clients then experience of a single base URL. Features that could be implemented in the future are:

|

||||

|

||||

* Automatic translation from/to grpc to/from HTTP/REST.

|

||||

* Authentication and Authorization management

|

||||

* Cache support

|

||||

- Automatic translation from/to gRPC to/from HTTP/REST.

|

||||

- Authentication and Authorization management

|

||||

- Cache support

|

||||

|

||||

If you need additional functionality and a much richer set of features suitable for commercial APIs, you can also add a full API Gateway product like Azure API Management on top of these API Gateways.

|

||||

If you need additional functionality and a much richer set of features suitable for commercial APIs, you can also add a full API Gateway product like [Azure API Management](https://azure.microsoft.com/services/api-management/) on top of these API Gateways.

|

||||

|

||||

|

||||

|

||||

@ -43,9 +53,7 @@ Currently two aggregators exists:

|

||||

1. Mobile Shopping: Aggregator for shopping operations called by Xamarin App

|

||||

2. Web Shopping: Aggregator for shopping operations called by Web clients (MVC & SPA)

|

||||

|

||||

For more information about the relationship between API Gateways, aggregators, clients and microservices check the

|

||||

|

||||

>**Note** Previous versions of eShopOnContainers were using [Ocelot](https://github.com/ThreeMammals/Ocelot) instead of Envoy. Ocelot is a great netcore OSS open project, to create a API Gateway. Ocelot support a wide set of features, and it is a serious candidate to be used in every netcore based project. However the lack of support for gRPC was the main reason to change Ocelot for Envoy in eShop.

|

||||

>**Note** Previous versions of eShopOnContainers were using [Ocelot](https://github.com/ThreeMammals/Ocelot) instead of Envoy. Ocelot is a great .NET Core OSS open project, to create an API Gateway. Ocelot supports a wide set of features, and it's a serious candidate to be used in every :NET Core based project. However the lack of support for gRPC was the main reason to change Ocelot for Envoy in eShopOnContainers.

|

||||

|

||||

## Internal architectural patterns

|

||||

|

||||

|

||||

27

BFF-implementation.md

Normal file

@ -0,0 +1,27 @@

|

||||

|

||||

The current implementation of the [Backends for Frontends (BFF) pattern](https://samnewman.io/patterns/architectural/bff/) is shown in the following diagram:

|

||||

|

||||

|

||||

|

||||

**Note:** This schema only show one BFF. Each client type (web and mobile) has its own BFF.

|

||||

|

||||

The BFF is composed by two containers: One Envoy proxy and one custom aggregator (Note: The marketing BFF doesn't have a custom aggregator, because it doesn't have any complex logic).

|

||||

|

||||

## Envoy

|

||||

|

||||

An Envoy proxy acts as an ingress for the BFF and **provides a single URL** for the client. All client calls go through the Envoy proxy. Then, based on the some rules, the Envoy proxy can:

|

||||

|

||||

1. Forward the call to the custom aggregator.

|

||||

2. Forward the call directly to a internal microservice

|

||||

|

||||

## Custom aggregator

|

||||

|

||||

The custom aggregator is another container, that exposes an HTTP/JSON API and has complex methods, that involves data from various internal microservices. Each method of the custom aggregator calls one (or usually more than one) internal microservice, aggregates the results (applying custom logic) and returns data to the client.

|

||||

|

||||

All calls from the aggregator to microservices are performed using gRPC (dashed lines in diagram).

|

||||

|

||||

## Client Application

|

||||

|

||||

A client application calls the BFF only through the single URL exposed by the Envoy proxy. Based on the request data, the request is then forwarded to a internal microservice (single crud calls) or to the custom aggregator (complex logic calls), but this is transparent to the client.

|

||||

|

||||

When the call is forwarded directly from Envoy to an internal microservice, it's performed using HTTP/JSON. That is, right now, internal microservices expose a mix of methods: some in gRPC (called by aggregators) and some in HTTP/JSON (called by Envoy). This is subject to change in the future (all microservices methods could be in gRPC and Envoy could automatically translate between gRPC and HTTP/JSON if needed).

|

||||

31

BFFs.md

@ -1,31 +0,0 @@

|

||||

# BFF implementation

|

||||

|

||||

Current implementation of the [BFF pattern](https://samnewman.io/patterns/architectural/bff/) is as follow in the diagram:

|

||||

|

||||

|

||||

|

||||

**Note:** This schema only show one BFF. Each client type (web and mobile) has its own BFF.

|

||||

|

||||

The BFF is composed by two containers: One Envoy proxy and one custom aggregator (Note: The marketing BFF do not have custom aggregator, because has no complex logic).

|

||||

|

||||

## Envoy

|

||||

|

||||

An Envoy proxy acts a ingress for the BFF and **provides a single URL** for the client. All client calls go through the Envoy proxy. Then, based on the some rules, the Envoy proxy can:

|

||||

|

||||

1. Forward the call to the custom aggregator.

|

||||

2. Forward the call directly to a internal microservice

|

||||

|

||||

## Custom aggregator

|

||||

|

||||

The custom aggregator is another container, that exposes a HTTP/JSON API and has complex methods, that involves data from various internal microservices. Each method of the custom aggregator calls one (or usually more than one) internal microservice, aggregates the results (applying custom logic) and returns data to the client.

|

||||

|

||||

All calls from the aggregator to microservices are performed using gRPC (dashed lines in diagram).

|

||||

|

||||

## Client Application

|

||||

|

||||

Client application calls the BFF only through the single URL exposed by the Envoy proxy. Based on the request data, the request is then forwarded to a internal microservice (single crud calls) or to the custom aggregaor (complex logic calls), but this is transparent to the client.

|

||||

|

||||

When the call is forwarded directly from Envoy to internal microservice, it is performed using HTTP/JSON. That implies, that, right now, internal microservices expose a mix of methods: some in gRPC (called by aggregators) and some in HTTP/JSON (called by Envoy). This is subject to change in the future (all microservices methods could be in gRPC and Envoy could automatically translate between gRPC and HTTP/JSON if needed).

|

||||

|

||||

|

||||

|

||||

@ -5,6 +5,8 @@

|

||||

- [Additional pre-requisites](#additional-pre-requisites)

|

||||

- [Install Helm](#install-helm)

|

||||

- [Install eShopOnContainers using Helm](#install-eshoponcontainers-using-helm)

|

||||

- [Allow large headers (needed for login to work)](#allow-large-headers-needed-for-login-to-work)

|

||||

- [Using Linkerd as Service Mesh (Advanced Scenario)](#using-linkerd-as-service-mesh-advanced-scenario)

|

||||

- [Customizing the deployment](#customizing-the-deployment)

|

||||

- [Using your own images](#using-your-own-images)

|

||||

- [Using specific DNS](#using-specific-dns)

|

||||

@ -26,21 +28,21 @@ The following steps are using the Azure portal to create the AKS cluster:

|

||||

|

||||

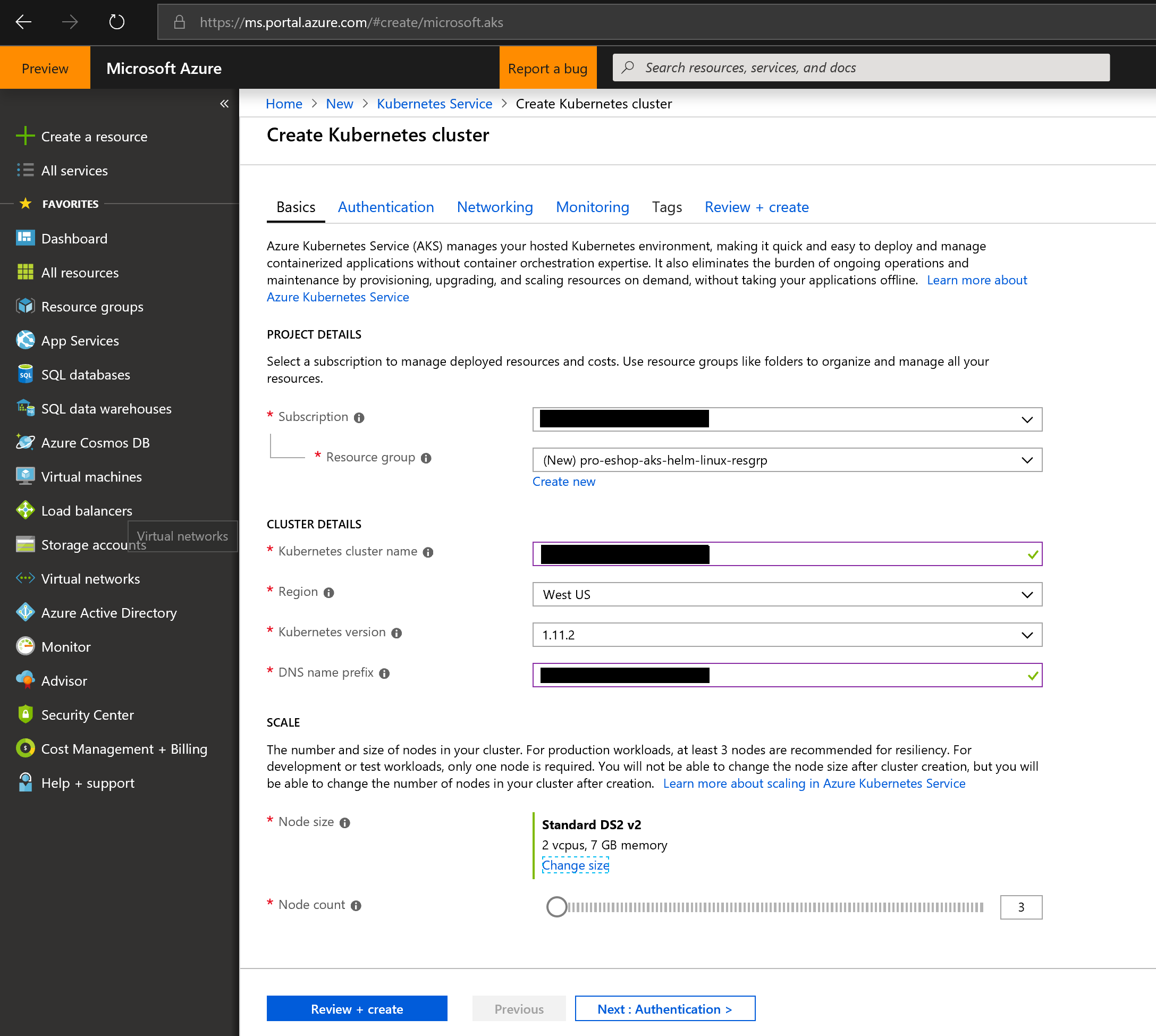

- Start the process by providing the general data, like in the following screenshot:

|

||||

|

||||

|

||||

/create-kubernetes-cluster-basics.png)

|

||||

|

||||

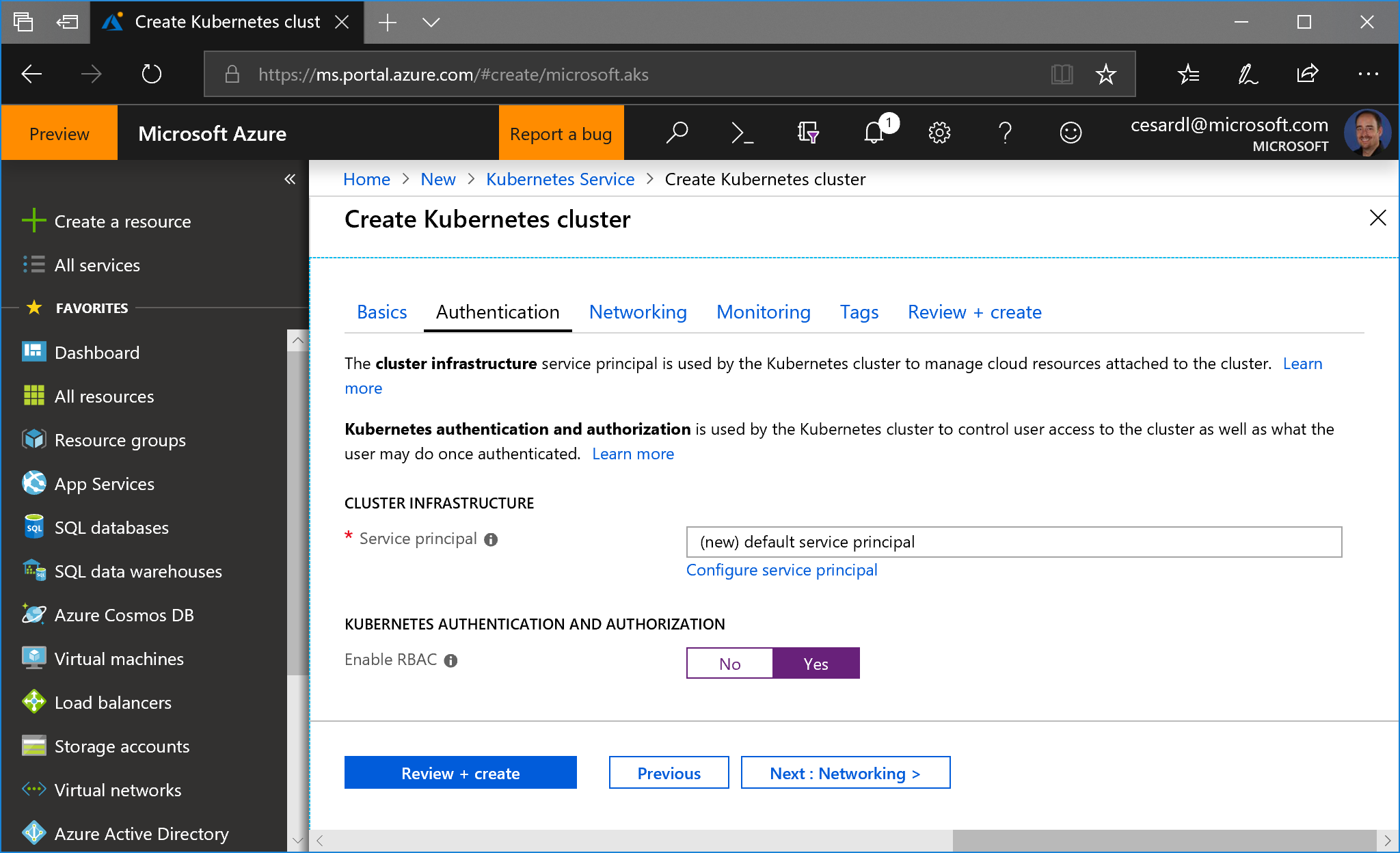

- Then, very important, in the next step, enable RBAC:

|

||||

|

||||

|

||||

/create-kubernetes-cluster-authentication.png)

|

||||

|

||||

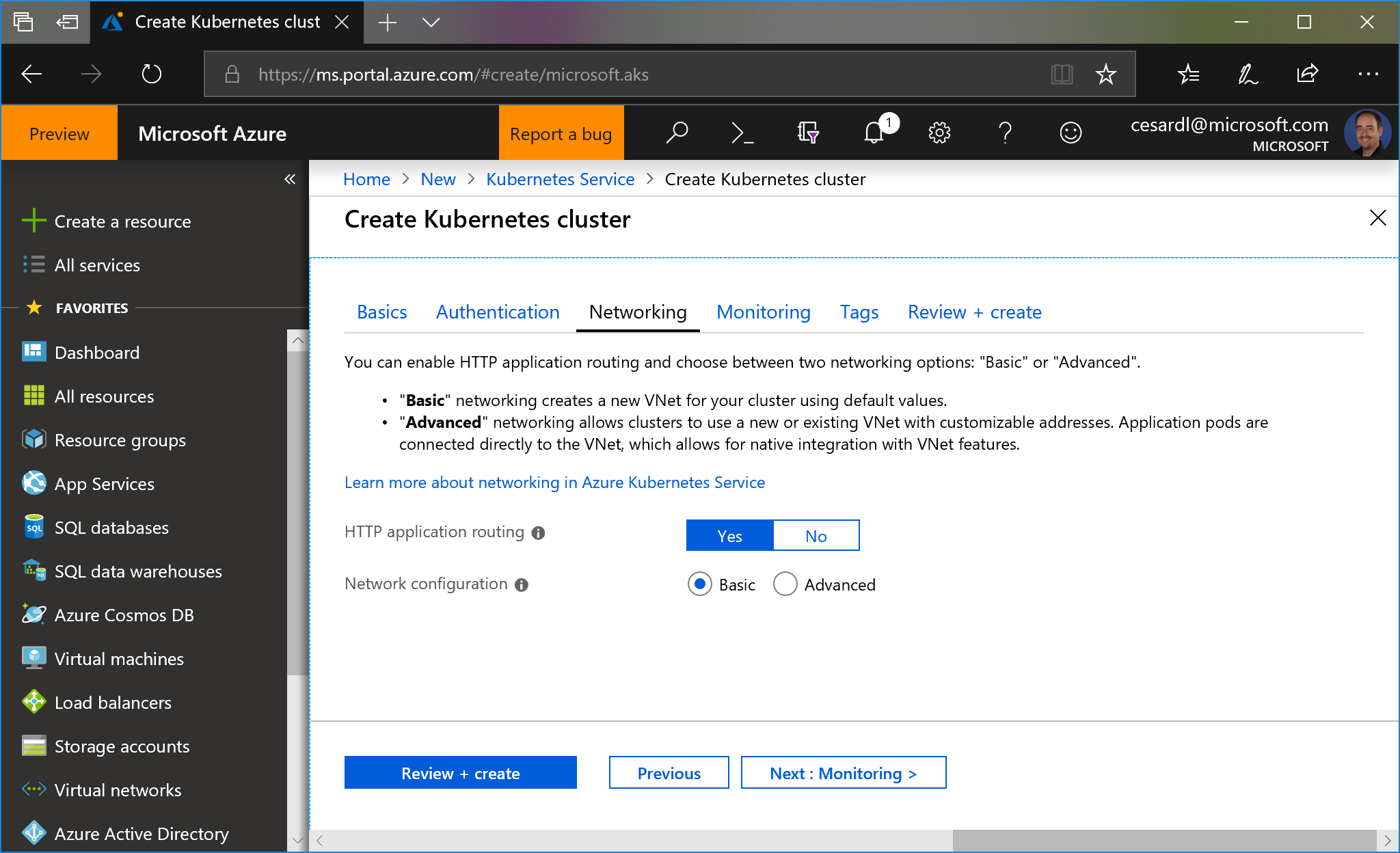

- **Enable http routing**. Make sure to check the checkbox "Http application routing" on "Networking" settings. For more info, read the [documentation](https://docs.microsoft.com/en-us/azure/aks/http-application-routing)

|

||||

|

||||

You can use **basic network** settings since for a test you don't need integration into any existing VNET.

|

||||

|

||||

|

||||

/create-kubernetes-cluster-networking.png)

|

||||

|

||||

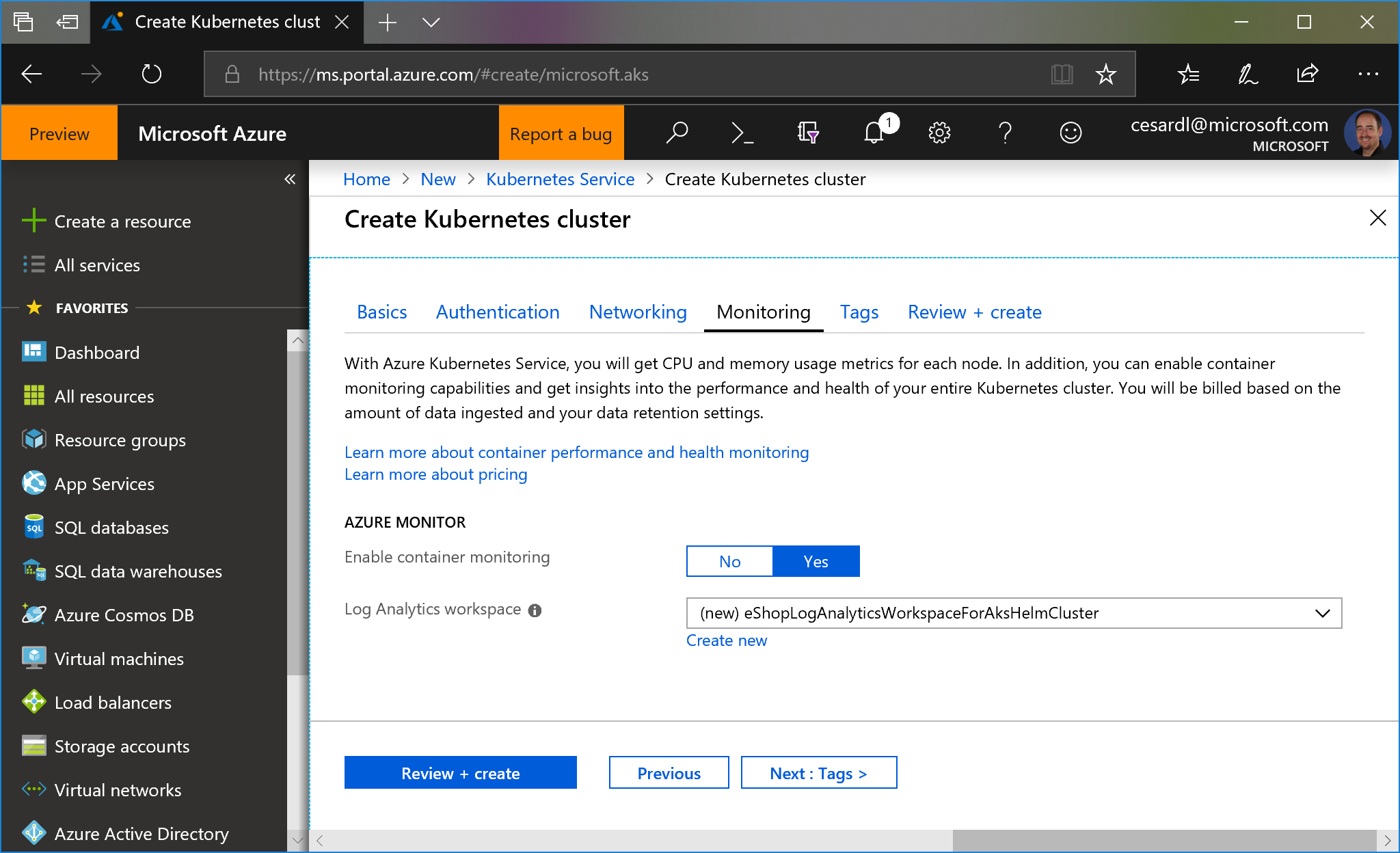

- You can also enable monitoring:

|

||||

|

||||

|

||||

/create-kubernetes-cluster-monitoring.png)

|

||||

|

||||

- Finally, create the cluster. It'll take a few minutes for it to be ready.

|

||||

|

||||

@ -49,19 +51,19 @@ The following steps are using the Azure portal to create the AKS cluster:

|

||||

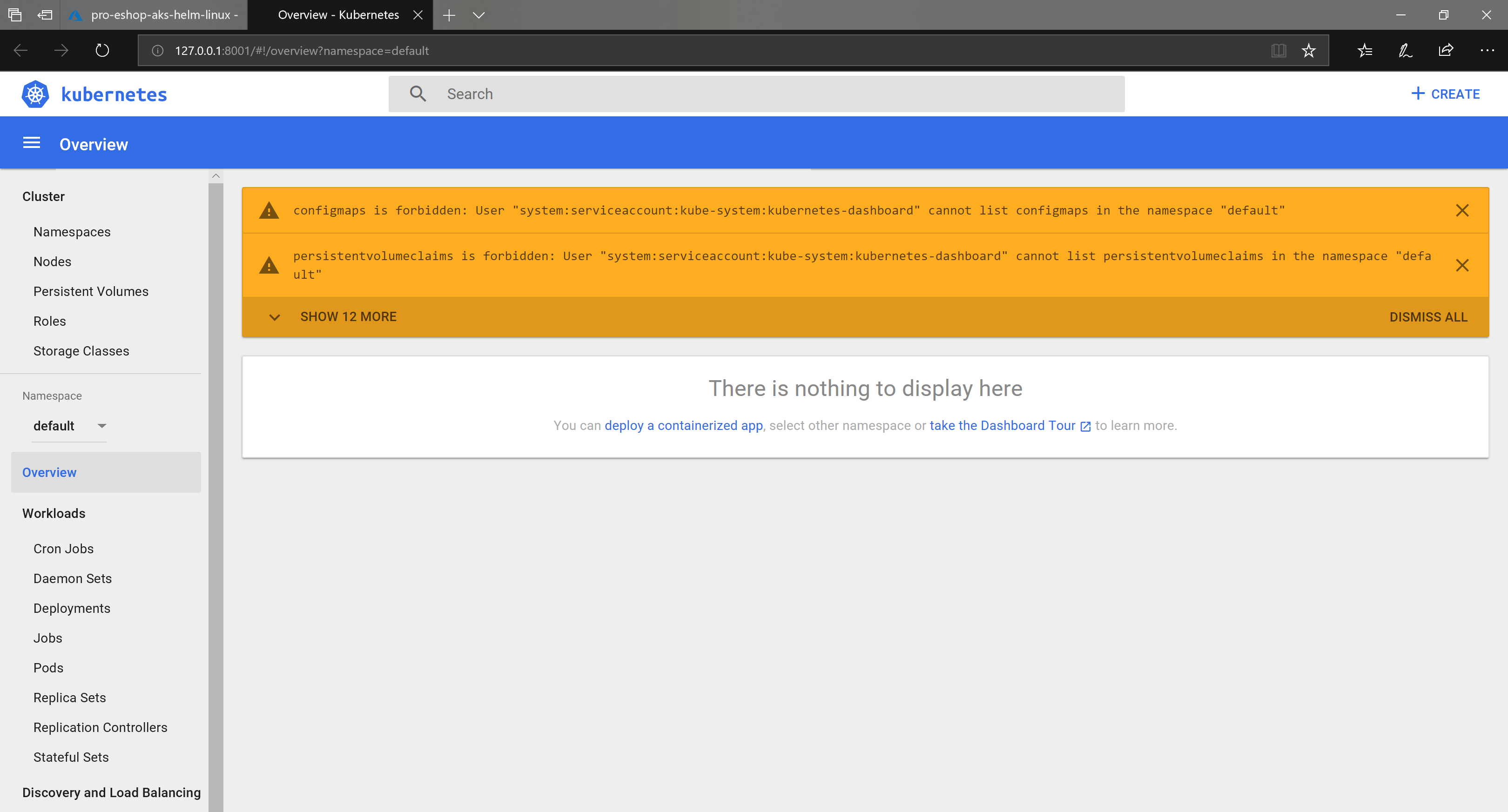

In order NOT to get errors in the Kubernetes dashboard, you'll need to set the following service-account steps.

|

||||

|

||||

Here you can see the errors you might see:

|

||||

|

||||

/rbac-kubernetes-dashboard.png)

|

||||

|

||||

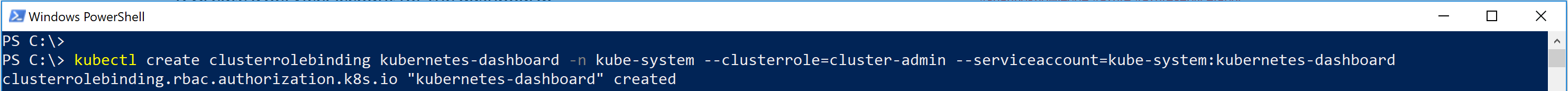

- Because the cluster is using RBAC, you need to grant needed rights to the Service Account `kubernetes-dashboard` with this kubectl command:

|

||||

|

||||

`kubectl create clusterrolebinding kubernetes-dashboard -n kube-system --clusterrole=cluster-admin --serviceaccount=kube-system:kubernetes-dashboard`

|

||||

|

||||

|

||||

/command-to-kubernetes-dashboard.png)

|

||||

|

||||

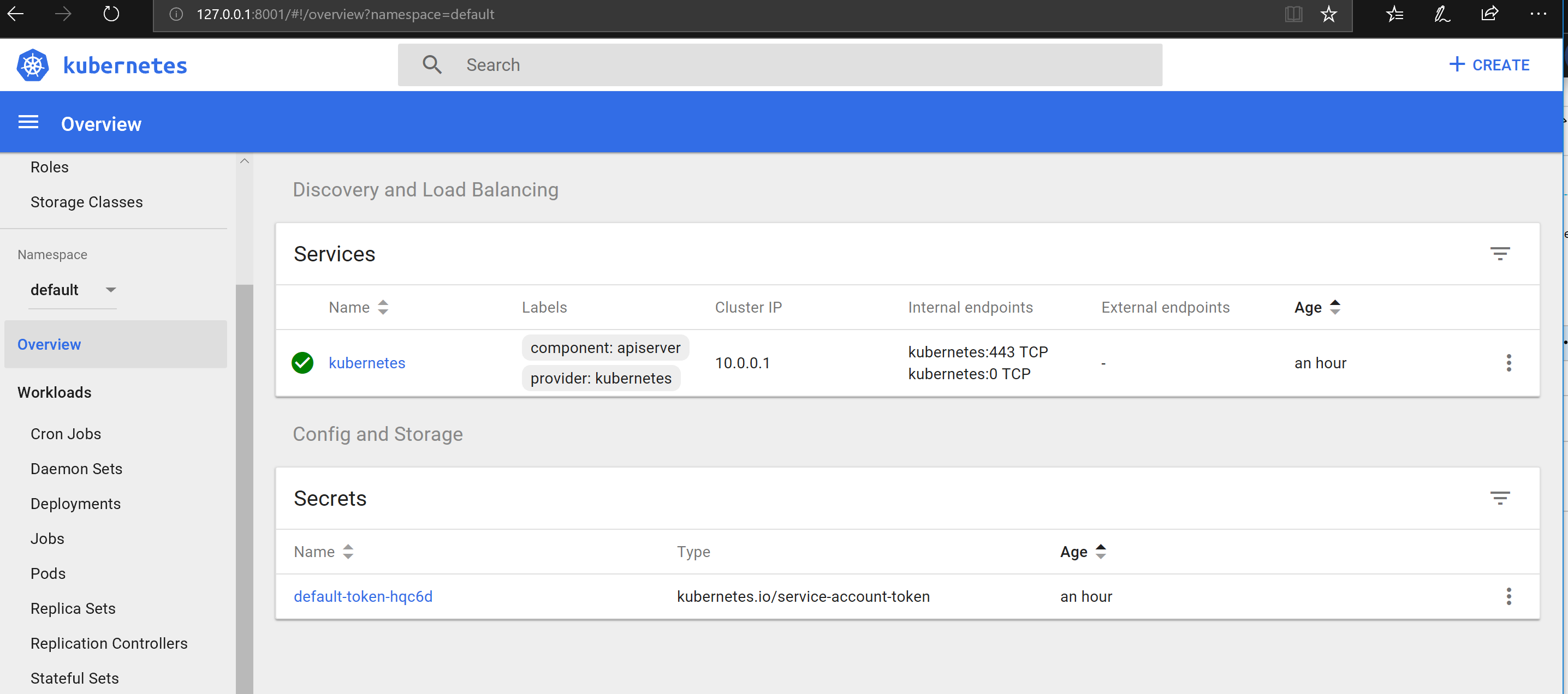

Now, just run the Azure CLI command to browse the Kubernetes Dashboard:

|

||||

|

||||

`az aks browse --resource-group pro-eshop-aks-helm-linux-resgrp --name pro-eshop-aks-helm-linux`

|

||||

|

||||

|

||||

/kubernetes-dashboard.png)

|

||||

|

||||

## Additional pre-requisites

|

||||

|

||||

@ -73,25 +75,25 @@ You need to have helm installed on your machine, and Tiller must be installed on

|

||||

|

||||

**Note**: If your ASK cluster is not RBAC-enabled (default option in portal) you may receive following error when running a helm command:

|

||||

|

||||

```

|

||||

```console

|

||||

Error: Get http://localhost:8080/api/v1/namespaces/kube-system/configmaps?labelSelector=OWNER%!D(MISSING)TILLER: dial tcp [::1]:8080: connect: connection refused

|

||||

```

|

||||

|

||||

If so, type:

|

||||

|

||||

```

|

||||

```powershell

|

||||

kubectl --namespace=kube-system edit deployment/tiller-deploy

|

||||

```

|

||||

|

||||

Your default text editor will popup with the YAML definition of the tiller deploy. Search for:

|

||||

|

||||

```

|

||||

```yaml

|

||||

automountServiceAccountToken: false

|

||||

```

|

||||

|

||||

And change it to:

|

||||

|

||||

```

|

||||

```yaml

|

||||

automountServiceAccountToken: true

|

||||

```

|

||||

|

||||

@ -99,9 +101,9 @@ Save the file and close the editor. This should reapply the deployment in the cl

|

||||

|

||||

## Install eShopOnContainers using Helm

|

||||

|

||||

All steps need to be performed on `/k8s/helm` folder. The easiest way is to use the `deploy-all.ps1` script from a Powershell window:

|

||||

All steps need to be performed on `/k8s/helm` folder. The easiest way is to use the `deploy-all.ps1` script from a PowerShell window:

|

||||

|

||||

```

|

||||

```powershell

|

||||

.\deploy-all.ps1 -externalDns aks -aksName eshoptest -aksRg eshoptest -imageTag dev -useMesh $false

|

||||

```

|

||||

|

||||

@ -109,7 +111,7 @@ This will install all the [eShopOnContainers public images](https://hub.docker.c

|

||||

|

||||

Once the script is run, you should see following output when using `kubectl get deployment`:

|

||||

|

||||

```

|

||||

```console

|

||||

NAME READY UP-TO-DATE AVAILABLE AGE

|

||||

eshop-apigwmm 1/1 1 1 29d

|

||||

eshop-apigwms 1/1 1 1 29d

|

||||

@ -140,7 +142,7 @@ eshop-webstatus 1/1 1 1 30d

|

||||

|

||||

Every public service is exposed through its own ingress resource, as you can see if using `kubectl get ing`:

|

||||

|

||||

```

|

||||

```console

|

||||

eshop-apigwmm eshop.<your-guid>.<region>.aksapp.io <public-ip> 80 4d

|

||||

eshop-apigwms eshop.<your-guid>.<region>.aksapp.io <public-ip> 80 4d

|

||||

eshop-apigwwm eshop.<your-guid>.<region>.aksapp.io <public-ip> 80 4d

|

||||

@ -159,15 +161,15 @@ Ingresses are automatically configured to use the public DNS of the AKS provided

|

||||

|

||||

One step more is needed: we need to configure the nginx ingress controller that AKS has to allow larger headers. This is because the headers sent by identity server exceed the size configured by default. Fortunately this is very easy to do. Just type (from the `/k8s/helm` folder):

|

||||

|

||||

```

|

||||

kubectl apply -f aks-httpaddon-cfg.yaml

|

||||

```powershell

|

||||

kubectl apply -f aks-httpaddon-cfg.yaml

|

||||

```

|

||||

|

||||

Then you can restart the pod that runs the nginx controller. Its name is `addon-http-application-routing-nginx-ingress-controller-<something>` and runs on `kube-system` namespace. So run a `kubectl get pods -n kube-system` find it and delete with `kubectl delete pod <pod-name> -n kube-system`.

|

||||

|

||||

**Note:** If running in a bash shell you can type:

|

||||

|

||||

```

|

||||

```powershell

|

||||

kubectl delete pod $(kubectl get pod -l app=addon-http-application-routing-nginx-ingress -n kube-system -o jsonpath="{.items[0].metadata.name}) -n kube-system

|

||||

```

|

||||

|

||||

@ -175,21 +177,23 @@ You can view the MVC client at `http://[dns]/webmvc` and the SPA at the `http://

|

||||

|

||||

## Using Linkerd as Service Mesh (Advanced Scenario)

|

||||

|

||||

There is the possibility to install eShopOnContainers ready to run with [Linkerd](https://linkerd.io/) Service Mesh. To use Linkerd, you must follow the following steps:

|

||||

There is the possibility to install eShopOnContainers ready to run with the [Linkerd](https://linkerd.io/) [Service Mesh](./Resiliency-and-Service-Mesh.md). To use Linkerd, you must complete the following steps:

|

||||

|

||||

1. Install Linkerd on your cluster. We don't provide Linkerd installation scripts, but the process is described in the [Linkerd installation documentation](https://linkerd.io/2/getting-started/#step-0-setup). Steps 0 trough 3 needs to be completed.

|

||||

2. Then install eShopOnContainers using the procedure described above, but in the `deploy-all.ps1` pass the parameter `useMesh` to `$true`.

|

||||

1. Install Linkerd on your cluster. The process is described in the [Linkerd installation documentation](https://linkerd.io/2/getting-started/#step-0-setup). Steps 0 trough 3 need to be done.

|

||||

2. Then install eShopOnContainers as per the procedure described above, but using this command:

|

||||

```powershell

|

||||

.\deploy-all.ps1 -externalDns aks -aksName eshoptest -aksRg eshoptest -imageTag dev -useMesh $true

|

||||

```

|

||||

|

||||

Once eShop is installed you can check that all non-infrastructure pods have two containers:

|

||||

|

||||

|

||||

/pods.png)

|

||||

|

||||

Now you can use the command `linkerd dashboard` to show the mesh and monitor all the connections between eShopOnContainer pods.

|

||||

|

||||

The mesh monitors all HTTP connections (including gRPC), but do not monitor RabbitMq or any other connection (SQL, Mongo, ...)

|

||||

|

||||

For more information read the [Resiliency and Service Meh](./Resiliency-and-mesh.md) page.

|

||||

The mesh monitors all HTTP connections (including gRPC), but don't monitor RabbitMQ or any other connection (SQL, Mongo, ...)

|

||||

|

||||

For more information read the [Resiliency and Service Mesh](./Resiliency-and-Service-Mesh.md) page in the wiki.

|

||||

|

||||

## Customizing the deployment

|

||||

|

||||

|

||||

@ -64,11 +64,15 @@ Then install Helm from the package manager:

|

||||

choco install kubernetes-helm

|

||||

```

|

||||

|

||||

```powershell

|

||||

Get-FileHash -Path .\helm-v2.16.1-windows-amd64.zip -Algorithm sha256

|

||||

```

|

||||

|

||||

### Install Helm server (Tiller)

|

||||

|

||||

To install Tiller:

|

||||

|

||||

- Go to the **k8s** folder in your local copy of the eShopOnContainers repo

|

||||

- Go to the **deploy/k8s** folder in your local copy of the eShopOnContainers repo

|

||||

|

||||

- Create the Tiller service account by running:

|

||||

|

||||

@ -97,7 +101,7 @@ To install the NGINX Ingress controller, run the following commands:

|

||||

|

||||

## Install eShopOnContainers using Helm

|

||||

|

||||

- Go to the **k8s\helm** folder in your local copy of the eShopOnContainers repo.

|

||||

- Go to the **deploy/k8s/helm** folder in your local copy of the eShopOnContainers repo.

|

||||

|

||||

At this point you have two options for installing eShopOnContainers:

|

||||

|

||||

@ -106,13 +110,13 @@ At this point you have two options for installing eShopOnContainers:

|

||||

|

||||

### Deploy your local images

|

||||

|

||||

The first task to deploy your local images is to create them, which you can achieve by just running this command from the CLI on the root folder of your local repo:

|

||||

The first task to deploy your local images is to create them, which you can achieve by just running the usual command from the CLI on the src folder of your local repo:

|

||||

|

||||

```powershell

|

||||

docker-compose build

|

||||

```

|

||||

|

||||

Then, just run this script from the `k8s\helm` folder, to deploy your local images:

|

||||

Then, just run this script from the `deploy\k8s\helm` folder, to deploy your local images:

|

||||

|

||||

```powershell

|

||||

.\deploy-all.ps1 -imageTag linux-latest -useLocalk8s $true -imagePullPolicy Never

|

||||

@ -127,7 +131,7 @@ The parameter `useLocalk8s` to `$true`, forces the script to use `localhost` as

|

||||

If you prefer to deploy the public images (built from the **dev** branch on each commit), just run this script:

|

||||

|

||||

```powershell

|

||||

.\deploy-all.ps1 -imageTag dev -useLocalk8s $true

|

||||

.\deploy-all.ps1 -imageTag dev

|

||||

```

|

||||

|

||||

### Check deployment status

|

||||

@ -262,7 +266,13 @@ The reason is because MVC needs to access the Identity Server from both outside

|

||||

|

||||

Solving this requires some manual steps:

|

||||

|

||||

From the `/k8s` folder run `kubectl apply -f .\nginx-ingress\local-dockerk8s\mvc-fix.yaml`. This will create two additional ingresses (for MVC and Identity API) to any valid DNS that points to your machine. This enable the use of 10.75.0.1 IP.

|

||||

From the `deploy/k8s` folder run the following command:

|

||||

|

||||

```yaml

|

||||

kubectl apply -f .\nginx-ingress\local-dockerk8s\mvc-fix.yaml

|

||||

```

|

||||

|

||||

This will create two additional ingresses (for MVC and Identity API) to any valid DNS that points to your machine. This enable the use of 10.75.0.1 IP.

|

||||

|

||||

Update the `configmap` of Web MVC by typing (**line breaks are mandatory**):

|

||||

|

||||

|

||||

111

Mac-setup.md

@ -61,9 +61,8 @@ This configuration is necessary so you don't get the following error when trying

|

||||

|

||||

|

||||

|

||||

|

||||

That is because the by default IP used to redirect to the Identity service/app used by the application (based on IdentityServer4) is the IP 10.0.75.1.

|

||||

That IP is always set up when installing Docker for Windows in a Windows 10 machine. It is also used by Windows Server 2016 when using Windows Containers.

|

||||

That is because the by default IP used to redirect to the Identity service/app used by the application (based on IdentityServer4) is the IP `10.0.75.1`.

|

||||

That IP is always set up when installing Docker for Windows in a Windows 10 machine. It is also used by Windows Server 2016 when using Windows Containers.

|

||||

|

||||

eShopOnContainers uses that IP as the "by default choice" so anyone testing the app don't need to configure further settings. However, that IP is not used by "Docker for Mac", so you need to change the config.

|

||||

|

||||

@ -71,47 +70,84 @@ If you were to access the Docker containers from remote machines or mobile phone

|

||||

|

||||

### Setting up the docker-compose environment variables and settings

|

||||

|

||||

As explained [here by Docker](https://docs.docker.com/docker-for-mac/networking/#use-cases-and-workarounds),

|

||||

the Mac has a changing IP address (or none if you have no network access). From June 2017 onwards our recommendation is to connect to the special Mac-only DNS name docker.for.mac.localhost which will resolve to the internal IP address used by the host.

|

||||

As explained in the [networking page of Docker Desktop for Mac](https://docs.docker.com/docker-for-mac/networking/#use-cases-and-workarounds),

|

||||

the Mac has a changing IP address (or none if you have no network access). So the recommendation now is to connect to the special Mac-only DNS name `docker.for.mac.localhost` which will resolve to the internal IP address used by the host.

|

||||

|

||||

In the `docker-compose.override.yml` file, replace the IdentityUrl environment variable (or any place where the IP 10.0.75.1 is used) with:

|

||||

|

||||

```bash

|

||||

IdentityUrl=http://docker.for.mac.localhost:5105

|

||||

```yaml

|

||||

IdentityUrl=http://docker.for.mac.localhost:5105

|

||||

```

|

||||

|

||||

You could also set your real IP at the Mac's network adapter. But that would be a worse solution as it'll depend on the network you are connecting your Mac development machine..

|

||||

|

||||

Therefore, the WebMVC service definition at the `docker-compose.override.yml` should finally be configured as shown bellow:

|

||||

|

||||

```bash

|

||||

webmvc:

|

||||

environment:

|

||||

- ASPNETCORE_ENVIRONMENT=Development

|

||||

- ASPNETCORE_URLS=http://0.0.0.0:80

|

||||

- CatalogUrl=http://catalog.api

|

||||

- OrderingUrl=http://ordering.api

|

||||

- BasketUrl=http://basket.api

|

||||

- LocationsUrl=http://locations.api

|

||||

- IdentityUrl=http://docker.for.mac.localhost:5105

|

||||

- MarketingUrl=http://marketing.api

|

||||

- CatalogUrlHC=http://catalog.api/hc

|

||||

- OrderingUrlHC=http://ordering.api/hc

|

||||

- IdentityUrlHC=http://identity.api/hc

|

||||

- BasketUrlHC=http://basket.api/hc

|

||||

- MarketingUrlHC=http://marketing.api/hc

|

||||

- PaymentUrlHC=http://payment.api/hc

|

||||

- UseCustomizationData=True

|

||||

- ApplicationInsights__InstrumentationKey=${INSTRUMENTATION_KEY}

|

||||

- OrchestratorType=${ORCHESTRATOR_TYPE}

|

||||

- UseLoadTest=${USE_LOADTEST:-False}

|

||||

ports:

|

||||

- "5100:80"

|

||||

```

|

||||

```yaml

|

||||

webmvc:

|

||||

environment:

|

||||

- ASPNETCORE_ENVIRONMENT=Development

|

||||

- ASPNETCORE_URLS=http://0.0.0.0:80

|

||||

- CatalogUrl=http://catalog.api

|

||||

- OrderingUrl=http://ordering.api

|

||||

- BasketUrl=http://basket.api

|

||||

- LocationsUrl=http://locations.api

|

||||

- IdentityUrl=http://docker.for.mac.localhost:5105

|

||||

- MarketingUrl=http://marketing.api

|

||||

- CatalogUrlHC=http://catalog.api/hc

|

||||

- OrderingUrlHC=http://ordering.api/hc

|

||||

- IdentityUrlHC=http://identity.api/hc

|

||||

- BasketUrlHC=http://basket.api/hc

|

||||

- MarketingUrlHC=http://marketing.api/hc

|

||||

- PaymentUrlHC=http://payment.api/hc

|

||||

- UseCustomizationData=True

|

||||

- ApplicationInsights__InstrumentationKey=${INSTRUMENTATION_KEY}

|

||||

- OrchestratorType=${ORCHESTRATOR_TYPE}

|

||||

- UseLoadTest=${USE_LOADTEST:-False}

|

||||

ports:

|

||||

- "5100:80"

|

||||

```

|

||||

|

||||

You also have to update the `.env` file as follows:

|

||||

|

||||

```yaml

|

||||

ESHOP_EXTERNAL_DNS_NAME_OR_IP=docker.for.mac.localhost

|

||||

```

|

||||

|

||||

> **IMPORTANT**

|

||||

>

|

||||

> If you've already encountered the error shown above, when trying to sign in, then **you have to delete the `sqldata` microservice**, because the **IdentityServer** database would've already been seeded, allowing only connections from `localhost`.

|

||||

|

||||

When starting up for the first time, the **IdentityServer** database is seeded with the allowed clients, taking the information from the environment variables below, in `docker-compose.override.yml`.

|

||||

|

||||

```yaml

|

||||

identity-api:

|

||||

environment:

|

||||

- ASPNETCORE_ENVIRONMENT=Development

|

||||

- ASPNETCORE_URLS=http://0.0.0.0:80

|

||||

- SpaClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5104

|

||||

- XamarinCallback=http://${ESHOP_PROD_EXTERNAL_DNS_NAME_OR_IP}:5105/xamarincallback

|

||||

- ConnectionString=${ESHOP_AZURE_IDENTITY_DB:-Server=sqldata;Database=Microsoft.eShopOnContainers.Service.IdentityDb;User Id=sa;Password=Pass@word}

|

||||

- MvcClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5100

|

||||

- LocationApiClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5109

|

||||

- MarketingApiClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5110

|

||||

- BasketApiClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5103

|

||||

- OrderingApiClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5102

|

||||

- MobileShoppingAggClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5120

|

||||

- WebShoppingAggClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5121

|

||||

- WebhooksApiClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5113

|

||||

- WebhooksWebClient=http://${ESHOP_EXTERNAL_DNS_NAME_OR_IP}:5114

|

||||

- UseCustomizationData=True

|

||||

- ApplicationInsights__InstrumentationKey=${INSTRUMENTATION_KEY}

|

||||

- OrchestratorType=${ORCHESTRATOR_TYPE}

|

||||

ports:

|

||||

- "5105:80"

|

||||

|

||||

```

|

||||

|

||||

If you re-deploy with `docker-compose up`, now the login page should work properly, as in the screenshot below.

|

||||

|

||||

NOTE: For some reason, if using SAFARI browser, it cannot reach docker.for.mac.localhost but using Chrome in Mac, it works with no issues. Since the usage of docker.for.mac.localhost is just for development purposes, just use Chrome for tests.

|

||||

NOTE: For some reason, if using SAFARI browser, it can't reach `docker.for.mac.localhost` but using Chrome in Mac, it works with no issues. Since the usage of `docker.for.mac.localhost` is just for development purposes, just use Chrome for tests.

|

||||

|

||||

|

||||

|

||||

@ -152,13 +188,6 @@ While building the docker images should take between 15 and 30 minutes to comple

|

||||

|

||||

The first time you run this command it'll take some more additional time as it needs to pull/download the dotnet/core/aspnet and SDK images, so it'll take its time.

|

||||

|

||||

Later on you can try adding a parameter to speed up the image building process:

|

||||

|

||||

```console

|

||||

cd eShopOnContainers

|

||||

docker-compose build --build-arg RESTORECMD=scripts/restore-packages

|

||||

```

|

||||

|

||||

When the `docker-compose build` command finishes, you can check out with Docker CLI the images created with the following Docker command:

|

||||

|

||||

```console

|

||||

@ -227,7 +256,7 @@ For running just the Docker containers and web apps, you'd just need the .NET Co

|

||||

|

||||

But if you want to try the eShopOnContainers mobile app, that requires Xamarin and therefore, the iOS and Android platforms, too. Those mobile platforms are optional for this Wiki walkthrough, though.

|

||||

|

||||

**Make sure you have the latest SDK 2.2 version from <https://dotnet.microsoft.com/download/dotnet-core/2.2> installed.**

|

||||

**Make sure you have the latest SDK 3.0 version from <https://dotnet.microsoft.com/download/dotnet-core/3.0> installed.**

|

||||

|

||||

### Open the solution with Visual Studio for Mac

|

||||

|

||||

@ -235,7 +264,7 @@ Run Visual Studio for Mac and open the solution `eShopOnContainers-ServicesAndWe

|

||||

|

||||

If you just want to run the containers/microservices and web apps, do NOT open the other solutions, like `eShopOnContainers.sln` as those solutions will also open the Xamarin projects and that might slow you down when testing due to additional dependencies in VS.

|

||||

|

||||

After opening the `eShopOnContainers-ServicesAndWebApps.sln` solution for the first time, it is recommended to wait for a few minutes as VS will be restoring many NuGet packages and the solution won't be able to compile or run until it gets all the nuGet packages dependencies, in the first place (this time is only needed the first time you open the solution. Next times it is a lot faster).

|

||||

After opening the `eShopOnContainers-ServicesAndWebApps.sln` solution for the first time, it is recommended to wait for a few minutes as VS will be restoring many NuGet packages and the solution won't be able to compile or run until it gets all the nuGet packages dependencies, in the first place (this time is only needed the first time you open the solution. Next times it's a lot faster).

|

||||

|

||||

This is VS for Mac with the `eShopOnContainers-ServicesAndWebApps.sln` solution.

|

||||

|

||||

|

||||

92

Resiliency-and-Service-Mesh.md

Normal file

@ -0,0 +1,92 @@

|

||||

> **CONTENT**

|

||||

|

||||

- [Service Mesh](#service-mesh)

|

||||

- [Service Mesh and eShopOnContainers](#service-mesh-and-eshoponcontainers)

|

||||

- [Why Linkerd?](#why-linkerd)

|

||||

- [Installing the Mesh](#installing-the-mesh)

|

||||

- [Enabling Mesh](#enabling-mesh)

|

||||

- [Service profiles](#service-profiles)

|

||||

|

||||

Previous versions of eShopOnContainers used the [Polly library](https://github.com/App-vNext/Polly) to provide resiliency scenarios. Polly is a fantastic open source library that provides advanced resiliency scenarios and patterns like retries (with exponential backoff) or circuit breakers.

|

||||

|

||||

This version of eShops drops the use of Polly in the following cases:

|

||||

|

||||

- HTTP REST calls between microservices

|

||||

- gRPC calls between microservices

|

||||

|

||||

Polly is still used to guarantee resiliency in database connections and RabbitMQ/Azure Service Bus connections, but it's no longer used for resiliency between synchronous microservice-to-microservice communication.

|

||||

|

||||

## Service Mesh

|

||||

|

||||

In a production scenarios based on Kubernetes using a [Service Mesh](https://docs.microsoft.com/azure/aks/servicemesh-about) is a good option to provide resiliency between your services.

|

||||

|

||||

A Service Mesh is a product that offers resiliency, observability and others features to your workloads running on the cluster. There are various meshes you can use and each one has its own characteristics, so you should evaluate carefully which one suits better your needs. Once installed the mesh will monitor all the traffic between your services and apply the needed policies. Those policies could be for resiliency (like using retries and/or circuit breakers) or for other tasks (like encrypting all traffic inside the cluster).

|

||||

|

||||

When you use a Mesh for resiliency, nothing special is needed **in your code**. The Mesh is a pure infrastructure concept, so your Kubernetes files will be affected, but your code won't. If the Mesh is controlling the network and applying a specific policy for making retries, these retries will be made automatically (at the Mesh level) without your code even notice. From your code perspective, you just make a single call, that can either succeed of fail after some retries. If the mesh is applying an open circuit-breaker, your code won't know that: simply all your network calls will fail (until the circuit is closed again).

|

||||

|

||||

This simplifies your code, and allows you to focus on the business requirements, and let the mesh apply the needed policies.

|

||||

|

||||

## Service Mesh and eShopOnContainers

|

||||

|

||||

The reason to drop Polly for microservice-to-microservice communications is to show the use of a Service Mesh. One of the reasons to use a Service Mesh is to delegate on it the communications resiliency and set policies of retries, circuit breakers and QoS.

|

||||

|

||||

To use service mesh, eShopOnContainers has to be deployed in a Kubernetes cluster. Using eShopOnContainers from a docker host (deployed with docker compose) means you can't use the service mesh and, in this case, there's no resiliency for the communications built-in.

|

||||

|

||||

eShopOnContainers is ready to use [Linkerd](https://linkerd.io) as Service Mesh. There were several options to choose from, but Linkerd was chosen mainly for its ease of installation and configuration, and because it has a minimal impact on the cluster where it's installed.

|

||||

|

||||

### Why Linkerd?

|

||||

|

||||

There are a various meshes out there, and selecting the right one for your project can be a hard choice. Every mesh offers a set of features and follows different approaches to solve the same set of problems. Based on your experience, deployment operations, code management and requirements, one mesh can fit better than others.

|

||||

|

||||

So, **before choosing a specific service mesh, evaluate various options, explore some of them, and take a decision based on your experience and needs**. Linkerd was chosen for eShopOnContainers, but this doesn't mean that Linkerd should be the mesh for your projects. In the future, eShopOnContainers might be integrated with other meshes as well.

|

||||

|

||||

Before selecting a Mesh, you should ask yourself some questions:

|

||||

|

||||

- Do you _really_ need the Mesh? A Mesh is a non-trivial piece of infrastructure that impacts your solution. Don't add complexity if it's not needed. Some of the problems that a Mesh solves can be solved using other ways (that is, resiliency can be solved by Polly, A/B testing can be solved using different services and with standard ingress controller, and so on).

|

||||

|

||||

- Can your infrastructure support the Mesh? The mesh is not free. Usually every Mesh comes with a set of containers, runs as a side-car containers for all your workload, and needs additional containers running as a control plane. Those containers require CPU and memory to run.

|

||||

|

||||

If you evaluate those questions and come up with the answer that you want to use a Mesh, then you need to choose the right one for you. There are various options out there:

|

||||

|

||||

- [Istio](https://istio.io/): Istio is a full featured and highly customizable Mesh. It offers a lot of powerful features, but comes with a high learning curve and complex deployment (for example 80+ CRDs are added to your cluster). Although a basic installation of istio is not complex, getting the real benefits requires a significant ammount of work. Istio integrates a lot of different products (Envoy, Grafana, Prometheus, Jaeger, Kali) with each one playing a specific role in the mesh.

|

||||

|

||||

- [Consul](https://www.consul.io/mesh.html): Consul from Hashicorp is another option for a service Mesh. Like Istio it uses Envoy as sidecars and offers a wide set of advanced capabilities.

|

||||

|

||||

- [Linkerd](https://linkerd.io/2/overview/): Linkerd (please note that in eShop, "Linkerd" always referrs to **Linkerd 2**), is a lightweight and easy-to-install service mesh. It doesn't offer the same broad range of capabilities as Istio or Consul, but it's easier to install and start with.

|

||||

|

||||

**Linkerd was chosen for eShopOnContainers because it's easy to install and setup**. Other meshes offer a broader range of services, but most of them go beyond the scope of a project like eShopOnContainers. However remember: you must evaluate the options for your needs before choosing your mesh, if any.

|

||||

|

||||

## Installing the Mesh

|

||||

|

||||

To use eShopOnContainers under Linkerd, you need to install Linkerd first in your cluster. This is an administrative task performed only once. You can find the details, very straightforward and clearly described in its [installation page](https://linkerd.io/2/getting-started/). Just follow steps 0 through 3.

|

||||

|

||||

## Enabling Mesh

|

||||

|

||||

Once Linkerd is installed you can deploy eShopOnContainers. To enable the integration with Linkerd, pass the parameter `useMesh` to `$true` when running the `deploy-all.ps1` script. For the curious ones, this parameter passes the value `true` for`inf.mesh.enabled` to all helm charts. When this value is enabled the helm charts:

|

||||

|

||||

1. Add the `linkerd.io/inject: enabled` to all needed deployments.

|

||||

2. Add the annotations declared in file `ingress_values.yaml` to all ingress resources. Provided `ingress_values.yaml` is as follows:

|

||||

|

||||

```yaml

|

||||

ingress:

|

||||

mesh:

|

||||

annotations:

|

||||

nginx.ingress.kubernetes.io/configuration-snippet:

|

||||

proxy_set_header l5d-dst-override $service_name.$namespace.svc.cluster.local:$service_port;

|

||||

proxy_hide_header l5d-remote-ip;

|

||||

proxy_hide_header l5d-server-id;

|

||||

```

|

||||

|

||||

This is the specific configuration needed to enable the integration between NGINX ingress (and/or Http Application Routing as is derived from NGINX) and Linkerd. If you use other ingress controller you will need to update this file accordingly, following the [Linkerd ingress integration](https://linkerd.io/2/tasks/using-ingress/) instructions.

|

||||

|

||||

## Service profiles

|

||||

|

||||

By default Linkerd only monitors the network status and gives you detailed results that you can view by using the `linkerd` CLI tool.

|

||||

|

||||

To enable retries and other network policies you must declare a _service profile_ for the specified service you want to be controlled. A very detailed explanation about service profiles is in the [Linkerd documentation](https://linkerd.io/2/tasks/setting-up-service-profiles/)

|

||||

|

||||

Just for reference, the service profiles for basket and catalog API are provided. Feel free to update them, play with them and explore all Linkerd scenarios!

|

||||

|

||||

You can find the service profiles in folder `deploy/k8s/linkerd`. Just use `kubectl apply` to apply them to the cluster. Once a service profile is applied, Linkerd is able to give you detailed statistics (by route) and apply retries and other policies.

|

||||

|

||||

**Note** Previous versions of eShopOnContainers had specific business scenarios to demo the [circuit breaker](https://en.wikipedia.org/wiki/Circuit_breaker_design_pattern) pattern. These scenarios have been removed since, when using a mesh, the circuit breakers are applied by the mesh under-the-hoods, and the caller doesn't receive any specific information that a request has been aborted by the circuit breaker. Right now in Linkerd2 there is no specific option to set a circuit breaker policy. This could change in the future as the mesh itself evolves.

|

||||

@ -1,89 +0,0 @@

|

||||

# Resiliency and Service Mesh

|

||||

|

||||

Previous versions of eShopOnContainers used the [Polly library](https://github.com/App-vNext/Polly) to provide resiliency scenarios. Polly is a fantastic open source library that provides advanced resiliency scenarios and patterns like retries (with exponential backoff) or circuit breakers.

|

||||

|

||||

This version of eShops drops the use of Polly in the following cases:

|

||||

|

||||

* Http REST calls between microservices

|

||||

* gRPC calls between microservices

|

||||

|

||||

Polly is still used to guarantee resiliency in database connections and RabbitMQ/Azure Service Bus connections, but is no longer used for resiliency between synchronous microservice-to-microservice communication.

|

||||

|

||||

## Service Mesh

|

||||

|

||||

In a production scenarios based on Kubernetes using a [Service Mesh](https://docs.microsoft.com/en-us/azure/aks/servicemesh-about) is a good option to provide resilienciy between your services.

|

||||

|

||||

A Service Mesh is a product that offers resiliency, observability and others features to your workloads running on the cluster. There are various meshes you can use and each one have its own characteristics, so you should evaluate carefully which one suits better your needs. Once installed the mesh will monitor all the traffic between your services and apply the needed policies. Those policies could be for resiliency (like using retries and/or circuit breakers) or for other tasks (like encrypt all traffic inside the cluster).

|

||||

|

||||

When you use a Mesh for resiliency, nothing special is needed **in your code**. The Mesh is a pure infrastructure concept, so your Kubernetes files will be affected, but your code won't. If the Mesh is controlling the network and applying a specific policy for making retries, these retries will be made automatically (at the Mesh level) whithout your code having any notice. From your code perspective, you have done a single call, that can either succeed of fail after some retries. If the mesh is applying an open circuit-breaker, your code won't know that: simply all your network calls will fail (until the circuit is closed again).

|

||||

|

||||

This simplifies your code, as allows you to focus on the business requeriments, and let the mesh apply the needed policies.

|

||||

|

||||

## Service Mesh and eShopOnContainers

|

||||

|

||||

The reason to drop Polly for microservice-to-microservice communications is to show the use of a [Service Mesh](https://docs.microsoft.com/en-us/dotnet/architecture/cloud-native/service-mesh-communication-infrastructure). One of the reasons to use a Service Mesh is to delegate on it the communications resiliency and set policies of retries, circuit breakers and QoS.

|

||||

|

||||

To use service mesh, is needed to deploy eShopOnContainers on a Kubernetes cluster. Using eShopOnContainers from docker host (using compose) can't use Service Mesh and in this case no resiliency for the communications is built.

|

||||

|

||||

eShopOnContainers is ready to use [Linkerd](https://linkerd.io) as Service Mesh. There were several options to choose from, we choose Linkerd mainly for its easy installation and configuration, and because has minimal impact on the cluster where is installed.

|

||||

|

||||

### Why Linkerd?

|

||||

|

||||

There are a various meshes out here, and selecting the right service mesh for your project can be a hard choice. Every mesh offers a set of features and follow distinct approaches to solve the same set of problems. Based on your experience, deployment operations, code management and requirements one mesh can fit better than other.

|

||||

|

||||

So, **before choosing a specific service mesh, evaluate various options, play with some of them, and take a decision based on your experience and needs**. For eShopOnContainers we choose Linkerd as our current Mesh but this don't mean that Linkerd should be your mesh for your projects. In the future we don't discard to integrate eShop with other meshes as well.

|

||||

|

||||

Before selecting a Mesh, you should ask yourself some questions:

|

||||

|

||||

* Do you _really_ need the Mesh? A Mesh is a non-trivial piece of infrastructure that impacts your solution. Don't add complexity if it is not needed. Some of the problems that a Mesh solves can be solved using other ways (i. e. resiliency can be solved by Polly, A/B testing can be solved using different services and with standard ingress controller).

|

||||

|

||||

* Can your infrastructure support the Mesh? The mesh is not free. Usually every Mesh came with a set of containers run as a side-car containers for all your workload, plus additional containers running as a control plane. Those containers require CPU and memory to run.

|

||||

|

||||

If you evaluate those questions and come up with the answer that you want to use a Mesh, then you need to choose the right one for you. There are various options out here:

|

||||

|

||||

* [Istio](https://istio.io/): Istio is a full featured and highly customizable Mesh. It offers a lot of powerful features, but comes with a high learning curve and complex deployment (i. e. 80+ CRDs are added to your cluster). Although a basic installation of istio is not complex, get the real value of it requires significant ammount of work. Istio integrates a lot of different products (Envoy, Grafana, Prometheus, Jaeger, Kali) playing everyone a specific role inside the mesh.

|

||||

|

||||

* [Consul](https://www.consul.io/mesh.html): Consul from Hashicorp is another option for a service Mesh. Like istio it is based using Envoy a sidecars and offers a wide set of advanced capabilities.

|

||||

|

||||

* [Linkerd](https://linkerd.io/2/overview/): Linkerd (please note that we are always referring to Linkerd 2 when using the word "Linkerd"), is a lightweight and easy-to-install service Mesh. Do not offer the same broad range of capabilities that Istio or Consul, but is easier to install and start with.

|

||||

|

||||

**In eShopOnContainers we choose Linkerd, for its easy installation and setup**. Other meshes offers a broad range of services, but most of them go beyond the scope of a project like eShopOnContainers. However remind: evaluate the options, your needs before choosing your mesh (if any).

|

||||

|

||||

## Installing the Mesh

|

||||

|

||||

To use eShopOnContainers under Linkerd, you need to install Linkerd first in your cluster. This is an administrative task performed only once. We don't provide scripts for Linkerd installation, but the process is very straightforward and is clearly described on its [installation page](https://linkerd.io/2/getting-started/). Just follow steps 0 through 3.

|

||||

|

||||

## Enabling Mesh

|

||||

|

||||

Once Linkerd is installed you can deploy eShopOnContainers. To enable the integration with Linkerd, pass the parameter `useMesh` to `$true` when running the `deploy-all.ps1` script. For the curious what this parameter does is pass the value `inf.mesh.enabled` to `true` to all helm charts. When this value is enabled the helm charts do:

|

||||

|

||||

1. Adds the `linkerd.io/inject: enabled` to all needed deployments.

|

||||

2. Adds the annotations declared in file `ingress_values.yaml` to all ingress resources. Provided `ingress_values.yaml` is as follows:

|

||||

|

||||

```yaml

|

||||

ingress:

|

||||

mesh:

|

||||

annotations:

|

||||

nginx.ingress.kubernetes.io/configuration-snippet: |

|

||||

proxy_set_header l5d-dst-override $service_name.$namespace.svc.cluster.local:$service_port;

|

||||

proxy_hide_header l5d-remote-ip;

|

||||

proxy_hide_header l5d-server-id;

|

||||

```

|

||||

|

||||

This is the specific configuration needed to enable the integration between NGINX ingress (and/or Http Application Routing as is derived from NGINX) and Linkerd. If you use other ingress controller you will need to update this file accordingly, following the [Linkerd ingress integration](https://linkerd.io/2/tasks/using-ingress/) instructions.

|

||||

|

||||

## Service profiles

|

||||

|

||||

By default Linkerd only monitors the network status and gives you detailed results that you can view by using the `linkerd` CLI tool.

|

||||

|

||||

To enable retries and other network policies you must declare a _service profile_ for the specified service you want to be controlled. A very detailed explanation about service profiles is in the [Linkerd documentation](https://linkerd.io/2/tasks/setting-up-service-profiles/)

|

||||

|

||||

Just for reference we include service profiles for basket and catalog API. Feel free to update them, play with it and explore all Linkerd scenarios!

|

||||

|

||||

You can find the service profiles in folder `/k8s/linkerd`. Just use `kubectl apply` to apply them to the cluster. Once a service profile is applied, Linkerd is able to give you detailed statistics (by route) and apply retries and other policies.

|

||||

|

||||

**Note** Previous versions of eShopOnContainers had specific business scenarios to demo the [circuit breaker](https://en.wikipedia.org/wiki/Circuit_breaker_design_pattern) pattern. These secenarios have been removed as, when using Mesh, the circuit breakers are applied by the Mesh under-the-hoods, and the caller do not receive any specific information that a request has been aborted by the circuit breaker. Right now in Linkerd2 there is no specific option to set a circuit breaker policy. This could change in the future as the Mesh itself evolves.

|

||||

|

||||

|

||||

|

||||

|

||||

@ -99,7 +99,7 @@ git clone https://github.com/dotnet-architecture/eShopOnContainers.git

|

||||

|

||||

```console

|

||||

cd eShopOnContainers

|

||||

docker-compose build --build-arg RESTORECMD=scripts/restore-packages

|

||||

docker-compose build

|

||||

```

|

||||

|

||||

While building the docker images, you should see something like the following image, and the process should take between 10 and 30 minutes to complete, depending on the system speed.

|

||||

@ -140,7 +140,7 @@ If you want to explore the code and debug the application to see it working, you

|

||||

|

||||

You have to install at least VS 2017 (15.9) and you can install the latest release from https://visualstudio.microsoft.com/vs/.

|

||||

|

||||

**Make sure you have the latest SDK 2.2 version from <https://dotnet.microsoft.com/download/dotnet-core/2.2> installed.**

|

||||

**Make sure you have the latest SDK 3.0 version from <https://dotnet.microsoft.com/download/dotnet-core/3.0> installed.**

|

||||

|

||||

Upon running the installer, select the following workloads depending on the apps you intend to test or work with:

|

||||

|

||||

|

||||

@ -19,7 +19,7 @@

|

||||

## Explore

|

||||

|

||||

- [Architecture](Architecture)

|

||||

- [BFF implementation](BFFs)

|

||||

- [BFF implementation](BFF-implementation)

|

||||

- [Application](Explore-the-application)

|

||||

- [Code](Explore-the-code)

|

||||

- [Simplified CQRS & DDD](Simplified-CQRS-and-DDD)

|

||||

@ -30,7 +30,7 @@

|

||||

- Logging and Monitoring

|

||||

- [Serilog & Seq](Serilog-and-Seq)

|

||||

- [Using HealthChecks](Using-HealthChecks)

|

||||

- [Resiliency and Service Mesh](Resiliency-and-mesh)

|

||||

- [Resiliency and Service Mesh](Resiliency-and-Service-Mesh)

|

||||

- [ELK Stack](ELK-Stack)

|

||||

- [Application Insights](Application-Insights)

|

||||

- Tests

|

||||

|

||||

83

gRPC.md

@ -1,73 +1,82 @@

|

||||

# gRPC

|

||||

> **CONTENT**

|

||||

|

||||

One of the big news on netcore 3.0 is the native support for gRPC. eShopOnContainers makes use of gRPC for internal microservice-to-microservice synchronous communication. Note that, in eShop most of the communication between microservices is decoupled and asynchronous using an Event Bus (we support RabbitMQ or Azure Service Bus).

|

||||

- [gRPC usage in eShopOnContainers](#grpc-usage-in-eshoponcontainers)

|

||||

- [gRPC implementation in eShopOnContainers](#grpc-implementation-in-eshoponcontainers)

|

||||

- [Generating server or client stubs from proto file in .NET Core 3](#generating-server-or-client-stubs-from-proto-file-in-net-core-3)

|

||||

- [Creating the gRPC server](#creating-the-grpc-server)

|

||||

- [Adding the gRPC pipeline into ASP.NET Core](#adding-the-grpc-pipeline-into-aspnet-core)

|

||||

- [Creating the gRPC client](#creating-the-grpc-client)

|

||||

- [Using gRPC without TLS](#using-grpc-without-tls)

|

||||

- [More information](#more-information)

|

||||

|

||||

gRPC is a high-perfomance communication protocol, based on HTTP/2 and protocol buffers. It should be the primary choice for direct communication between services (as oposed to other protocols like AMQP used for decoupled communication like queues or pub/sub).

|

||||

One of the big news on .NET Core 3.0 is the native support for [gRPC](https://grpc.io/). eShopOnContainers makes use of gRPC for internal microservice-to-microservice synchronous communication. Note that, in eShop most of the communication between microservices is decoupled and asynchronous using an Event Bus (supporting either RabbitMQ or Azure Service Bus).

|

||||

|

||||

Its benefits over using directly HTTP with JSON are:

|

||||

gRPC is a high-performance communication protocol, based on HTTP/2 and protocol buffers. It should be the primary choice for direct synchronous communication between services (as opposed to other protocols like AMQP used for asynchronous communication like queues or pub/sub).

|

||||

|

||||

* Protocol buffers are a binary, high-perfomance serialization mechanism. Depending on the language implementation protocol buffers can be up to 8x faster than JSON serialization while the messages can be around 60%-80% smaller.

|

||||

* Supports streaming of data

|

||||

* Contract between service and client is explicit (by using _proto_ files)

|

||||

Its main benefits over using HTTP with JSON are:

|

||||

|

||||

- Protocol buffers are a binary, high-performance serialization mechanism. Depending on the language implementation protocol buffers can be up to 8x faster than JSON serialization while the messages can be around 60%-80% smaller.

|

||||

- Supports streaming of data

|

||||

- Contract between service and client is explicit (by using _proto_ files)

|

||||

|

||||

## gRPC usage in eShopOnContainers

|

||||

|

||||

In current implementation use of gRPC is limited to the communication between aggregators and micro services. We have following synchronous communications between services in eShop:

|

||||

In the current implementation the use of gRPC is limited to the communication between aggregators and microservices. eShopOnContainers currently has the following synchronous communications between services:

|

||||

|

||||

1. External clients (i. e. Xamarin App or Browser) to Api Gateways (BFFs): Use HTTP/REST

|

||||

1. External clients (that is, Xamarin App or Browser) to Api Gateways (BFFs): Use HTTP/REST

|

||||

2. From BFFs to Aggregators: Use HTTP/REST

|

||||

* This is basically a request forward. Based on route request is forwarded from the BFF to the aggregator. This is performed for "logically complex" requests, when a single client call involves various microservices that are coordinated from the aggregator.

|

||||

- This is basically a request forward. Based on the request route, it's forwarded from the BFF to the aggregator. This is performed for "logically complex" requests, when a single client call involves various microservices that are coordinated from the aggregator.

|

||||

3. From BFFs to microservices: Use HTTP/REST

|

||||

* This is basically a request forward. Based on route request is forwarded from the BFF to the internal microservice. This is performed for simple CRUD requests.

|

||||

- This is basically a request forward. Based on the request route, it's forwarded from the BFF to the internal microservice. This is performed for simple CRUD requests.

|

||||

4. From Aggregators to microservices: Use gRPC

|

||||

|

||||

Currently we are not transcoding from gRPC to HTTP/REST. This would allow use gRPC from the BFFs to both aggregators and microservices while keeping a HTTP/REST interfaceto the clients. This gRPC<->HTTP/REST translation could be done at BFF level.

|

||||

Currently there's no transcoding from gRPC to HTTP/REST. This would allow the use of gRPC from the BFFs to both aggregators and microservices while keeping an HTTP/REST interface to the clients. This gRPC<->HTTP/REST translation could be done at BFF level.

|

||||

|

||||

Following microservices expose gRPC endpoints:

|

||||

|

||||

* Ordering API

|

||||

* Catalog API

|

||||

* Basket API

|

||||

- Ordering API

|

||||

- Catalog API

|

||||

- Basket API

|

||||

|

||||

And following BFFs are gRPC clients:

|

||||

|

||||

* Mobile Shopping

|

||||

* Web Shopping

|

||||

- Mobile Shopping

|

||||

- Web Shopping

|

||||

|

||||

## gRPC implementation in eShopOnContainers

|

||||

|

||||

gRPC is language agnostic: all services are defined using _proto_ files (usually with the `.proto` extension). These files are based on the [protobuffer language](https://developers.google.com/protocol-buffers/docs/proto), and define the interface of the service. Based on the _proto_ file, a code for creating the server and the client can be generated for every language. The canonical tool is _protoc_ which supports generate C# code.

|

||||

|

||||

Starting from netcore3, gRPC is deeply integrated in both, the tooling and the framework, to make the experience of using gRPC as seamless as possible.

|

||||

Starting from .NET Core 3, gRPC is deeply integrated in both, the tooling and the framework, to make the experience of using gRPC as seamless as possible.

|

||||

|

||||

### Generating server or client stubs from proto file in Net Core 3

|

||||

### Generating server or client stubs from proto file in .NET Core 3

|

||||

|

||||

The tooling, integrated in msbuild (so it can be used by Visual Studio but also by the `dotnet build` SDK command), allows the generation of the code needed to create a gRPC server or client based on a _proto_ file. The _proto_ file has to be referenced in the `csproj` using a `<ProtoBuf>` tag (inside a `<ItemGroup>`):

|

||||

The tooling, integrated in `msbuild` (so it can be used by Visual Studio and the `dotnet build` SDK command), allows the generation of the code needed to create a gRPC server or client based on a _proto_ file. The _proto_ file has to be referenced in the `csproj` using a `<ProtoBuf>` tag (inside a `<ItemGroup>`):

|

||||

|

||||

```xml

|

||||

<ItemGroup>

|

||||

<Protobuf Include="Protos\catalog.proto" GrpcServices="Client" />

|

||||

</ItemGroup>

|

||||

<ItemGroup>

|

||||

<Protobuf Include="Protos\catalog.proto" GrpcServices="Client" />

|

||||

</ItemGroup>

|

||||

```

|

||||

|

||||

The `GrpcServices` attributes is for specifying if a `Server` stub has to be generated, or a `Client` one.

|

||||

The `GrpcServices` attributes is for specifying whether a `Server` or a `Client` stub has to be generated.

|

||||

|

||||

>**Note** You can include as many `<Protobuf>` tags as you need.

|

||||

>**Note** You can include as many `<Protobuf>` tags as you need.

|

||||

|

||||

When you compile the code (either by running _Build_ from Visual Studio or `dotnet build`) all code will be generated and placed in the `obj` folder. This is intentionally: this code should never be in the source control repository.

|

||||

|

||||

|

||||

|

||||

|

||||

### Creating the gRPC server

|

||||

|

||||

The server stub generated code, defines an abstract base class with a set of abstract methods, that you have to implement. You will have one abstract method for every rpc method defined in the _proto_ file.

|

||||

The server stub generated code, defines an abstract base class with a set of abstract methods, that you have to implement. You will have one abstract method for every `rpc` method defined in the _proto_ file.

|

||||

|

||||

So, given a _proto_ file that define the following methods:

|

||||

So, given a _proto_ file that defines the following methods:

|

||||

|

||||

```

|

||||

```proto

|

||||

service Catalog {

|

||||

rpc GetItemById (CatalogItemRequest) returns (CatalogItemResponse) {}

|

||||

rpc GetItemsByIds (CatalogItemsRequest) returns (PaginatedItemsResponse) {}

|

||||

rpc GetItemsByIds (CatalogItemsRequest) returns (PaginatedItemsResponse) {}

|

||||

```

|

||||

|

||||

A `CatalogBase` abstract class will be generated:

|

||||

@ -95,7 +104,7 @@ All needed C# types for parameters and return values will be generated automatic

|

||||

|

||||

### Adding the gRPC pipeline into ASP.NET Core

|

||||

|

||||

ASP.NET Core supports direct integration of the gRPC pipeline. Only need to use the method `MapGrpcService` of the `IEndpointRouteBuilder` in your `Startup` class:

|

||||

ASP.NET Core supports direct integration of the gRPC pipeline. You only have to use the method `MapGrpcService` of the `IEndpointRouteBuilder` in your `Startup` class:

|

||||

|

||||

```cs

|

||||

app.UseEndpoints(endpoints =>

|

||||

@ -125,8 +134,8 @@ gRPC works with HTTP/2 only. Usually when a client connects to a server, the con

|

||||

|

||||

However in internal microservices, maybe you don't have a TLS enabled endpoints (because those endpoints are internal). In this case you have two options:

|

||||

|

||||

* Open a single Kestrel endpoint, listening on HTTP/2

|

||||