General wiki update!

@ -1,48 +1,6 @@

|

||||

# Windows dev machine

|

||||

# Superseded

|

||||

|

||||

## Recommended Hardware requirements:

|

||||

- 16Gb of memory RAM - Since you need Hyper-V for Docker Community Edition (aka. Docker for Windows/Mac) in order to run the Linux Docker Host and we're also running a SQL Server container and a Redis container. An 8Gb RAM machine might be too tight.

|

||||

This wiki page has been superseded by:

|

||||

|

||||

## Software requirements for Windows are:

|

||||

- Docker Community Edition (aka. Docker for Windows) - Requires Windows 10 Pro 64 bits and Hyper-V enabled.

|

||||

- Latest .NET Core 2.1 SDK from: https://www.microsoft.com/net/download

|

||||

- (Optional) Visual Studio 2017 **15.8** or later – Much better for debugging multi-containers apps

|

||||

|

||||

The requirements for VS 2017 are:

|

||||

Supported Operating Systems

|

||||

Visual Studio 2017 will install and run on the following operating systems:

|

||||

• Windows 10 version 1507 or higher (recommended Windows 1803): Home, Professional, Education, and Enterprise (LTSB is not supported)

|

||||

|

||||

• Windows Server 2016: Standard and Datacenter

|

||||

|

||||

• Windows 8.1 (with Update 2919355): Basic, Professional, and Enterprise

|

||||

|

||||

• Windows Server 2012 R2 (with Update 2919355): Essentials, Standard, Datacenter

|

||||

|

||||

• Windows 7 SP1 (with latest Windows Updates): Home Premium, Professional, Enterprise, Ultimate

|

||||

|

||||

However, the requirements for “Docker for Windows” are more restrictive:

|

||||

https://docs.docker.com/docker-for-windows/install/#download-docker-for-windows

|

||||

|

||||

**Why is Windows 10 required?**

|

||||

Docker for Windows uses Windows Hyper-V. While older Windows versions have Hyper-V, their Hyper-V implementations lack features critical for Docker for Windows to work.

|

||||

|

||||

Docker for Windows requires 64bit Windows 10 Pro, Enterprise and Education (1511 November update, Build 10586 or later) and Microsoft **Hyper-V**.

|

||||

|

||||

|

||||

# Mac dev machine

|

||||

|

||||

## Recommended Hardware requirements:

|

||||

- 16Gb of memory RAM - Since you a VM in the Mac with the Linux Docker host and we're also running a SQL Server container and a Redis container, 8Gb of RAM might not be enough.

|

||||

|

||||

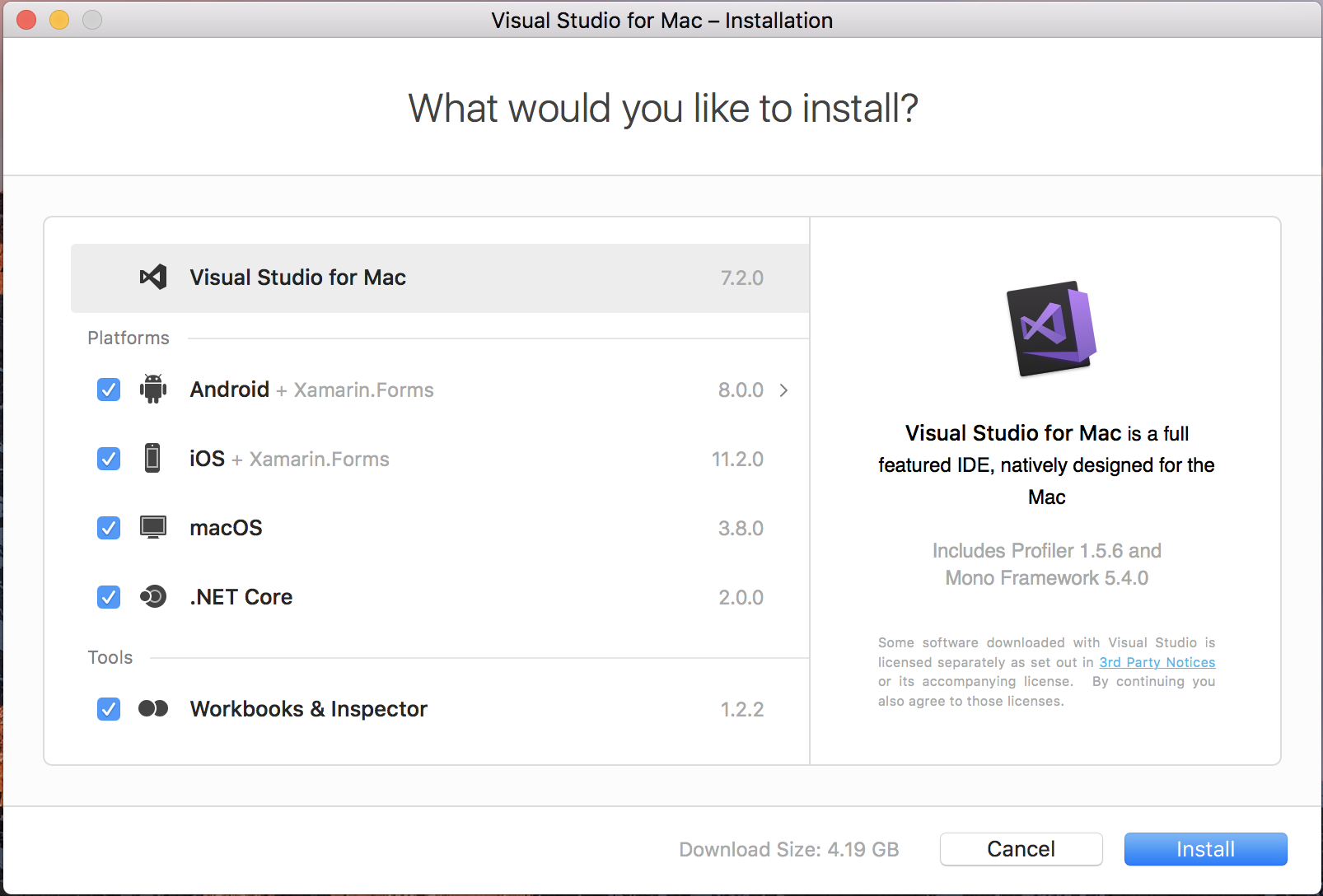

## Software requirements for Mac are:

|

||||

- Docker Community for Mac (aka. Docker for Mac) - Requires OS X El Capitan 10.11 or newer macOS.

|

||||

- .NET Core 2.1 SDK for Mac

|

||||

- (Optional) Visual Studio for Mac

|

||||

- (Optional) Visual Studio Code for Mac

|

||||

|

||||

Docker for Mac requires OS X El Capitan 10.11 or newer macOS release running on a 2010 or newer Mac, with Intel’s hardware support for MMU virtualization.

|

||||

|

||||

## Questions

|

||||

[QUESTION] Answer +1 if the solution is working for you (Through VS2017 or CLI environment):

|

||||

https://github.com/dotnet/eShopOnContainers/issues/107

|

||||

- [Windows setup](Windows-setup)

|

||||

- [Mac setup](Mac-setup)

|

||||

|

||||

@ -1,187 +1,6 @@

|

||||

# Superseded

|

||||

|

||||

## Related readme files (use them for more information after reading this)

|

||||

|

||||

* [https://github.com/dotnet-architecture/eShopOnContainers/blob/dev/branch-guide.md](https://github.com/dotnet-architecture/eShopOnContainers/blob/dev/branch-guide.md): Branches used and their purpose. Any branch not listed in this file is "temporary" and "unsupported" and can be deleted at any time.

|

||||

|

||||

## Version 2.1 (Current version based on .NET Core 2.1)

|

||||

eShopOnContainers v2.1 is evolving at the DEV and MASTER branch.

|

||||

Features supported on eShopOnContainers v2.1 are the features supported on v2.0 listed below, plus the following added features:

|

||||

|

||||

- Use of HttpClientFactory with Polly integration for resilient Http communication (Retries with exponential backoff and Circuit Breakers)

|

||||

|

||||

- Real time communication: SignalR Hub microservice/container for real-time communication. Scenario: Notification of Order's status changes. This is new since SignalR is provided in ASP.NET Core 2.1.

|

||||

|

||||

|

||||

## Version 2 (Based on .NET Core 2.0)

|

||||

|

||||

- Docker containers with .NET Core, Linux images and Windows containers supported and tested.

|

||||

- .NET Core 2.0 and EF Core 2.0 support

|

||||

- Visual Studio 2017, including VS Docker Tooling based on docker-compose.yml files supporting multi-container debugging, etc. CLI and VS Code environments are also supported.

|

||||

- CLI build process using a Docker ASPNETCore build-image (microsoft/aspnetcore-build) with all the needed SDKs so the compilation takes place in the same container recommended to be used in your CI pipeline (Continuous Integration). No need to have all dependencies in the dev machine when using this method. [Using this CI docker-compose file](https://github.com/dotnet/eShopOnContainers/blob/master/docker-compose.ci.build.yml).

|

||||

- Microservice oriented architecture, easy to get started, described in this <a href='https://aka.ms/microservicesebook'>Guide/eBook </a>.

|

||||

|

||||

- Implementation of the [API Gateway and BFF (Backend-For-Front) patterns](http://microservices.io/patterns/apigateway.html) so you can filter and publish simplified APIs and URIs and apply additional security in that tier while hiding/securing the internal microservices to the client apps or outside consumers. These sample API Gateways in eShopOnContainers are based on [Ocelot](https://github.com/ThreeMammals/Ocelot), an OSS lightweight API Gateway solution explained [here](http://threemammals.com/ocelot).

|

||||

|

||||

- Support for Windows Containers running on Windows Server Nano using different Docker base images instead of the Linux based images

|

||||

|

||||

- INTEGRATION EVENTS with Event Bus implementations: Implement Event-Driven communication between microservices/containers based on Event Bus interfaces and two implementations:

|

||||

1. (Implemented as PoC) Standalone Pub/Subs messaging implementation based on an out-of-proc RabbitMQ Container

|

||||

2. (Future version) Azure-attached implementation based on Azure Service Bus using Topics for Pub/Subs

|

||||

Two integration event scenarios to implement in the app:

|

||||

1. Simple (higher priority): Change Product info (name, image URL, etc.) in the Catalog and update that in the existing Orders and Baskets (all, except the price)

|

||||

2. (Future version) Complex: Events propagating Order's states changes related to the Order-Process SAGA (InProcess, Paid, Handling, Shipped, Canceled if timeout because it was not paid, etc.)

|

||||

|

||||

- DOMAIN EVENTS: Implement Domain Events which is related but not the same as integration events for inter-microservice-communication. Domain Events are initially intended to be used within a single microservice's Domain, like communicating state-changes between Aggregates, although they could derive to Integration Events if what happened in a microservice's domain should impact other additional microservices.

|

||||

SCENARIOS IMPLEMENTED:

|

||||

1. Check price change basket vs. catalog when converting to Order: https://github.com/dotnet/eShopOnContainers/issues/38

|

||||

2. Multiple AGGREGATE changes within the same Command-Handler, decoupled by domain events.

|

||||

|

||||

- Resiliency Communication: Resilient synchronous HTTP communication with retry-loops with exponential backoff and circuit-breaker pattern implementations to avoid DDoS initiated by clients. Implementation with Polly: https://github.com/App-vNext/Polly/ OSS lib.

|

||||

|

||||

- Idempotent updates at microservices, so the same update (like OrderCreation) cannot be executed multiple times. Server must implement operations idempotently. An operation is idempotent if it gets the same result when performed multiple times. Implementing idempotency is domain-specific.

|

||||

|

||||

- Exception Handling - ASP.NET middleware extension: Business-Exceptions + Generic-Exception-Handler (ExceptionHandlerHandler)

|

||||

|

||||

- Command Validations with MediatR Decorator - FluentValidation

|

||||

https://github.com/JeremySkinner/FluentValidation

|

||||

|

||||

- HEALTHCHECKS / Health Check Library (Preview) from the ASP.NET team. It provides: A model of healthcheckresults, A Middleware to return ok/bad, A polling service calling the healthchek service and publishing results (open/pluggable to orchestrators, App Insights, etc.)

|

||||

|

||||

- Legacy ASP.NET WebForms client running on a Windows Container (Catalog Manager/Simple CRUD maintenance). Consuming the same Catalog microservice. This is an example of a "lift and shift" scenario.

|

||||

|

||||

- Monolithic ASP.NET MVC app (just web app, no microservices) with public area of the eShop (Home page with catalog-view functionality, basically).

|

||||

. Running as a container: https://github.com/dotnet/eShopOnContainers/tree/master/src/Web/WebMonolithic

|

||||

. More advanced layered web app: As segregated project available at: https://github.com/dotnet/eShopOnWeb This app is not using Docker containers, just plain web

|

||||

|

||||

- Additional microservice (Marketing and Location microservices) with data stored in MongoDB containers or Azure CosmosDB

|

||||

|

||||

## Deploying to Azure

|

||||

<img src="img/exploring-to-production-ready.png">

|

||||

|

||||

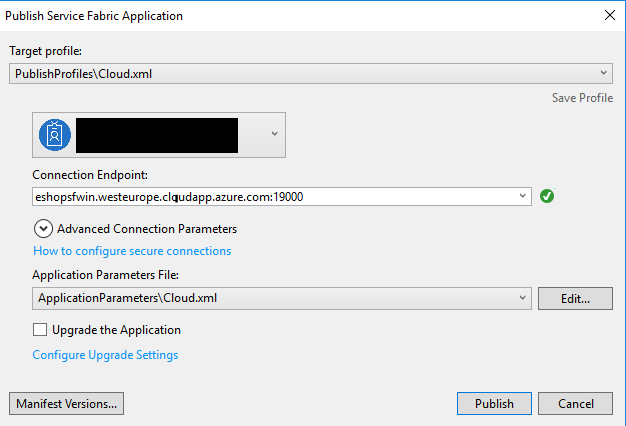

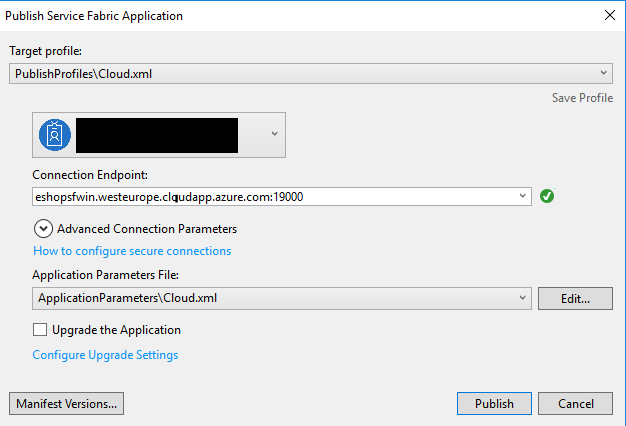

- Deployment/support into Kubernetes in ACS (Linux containers tested)

|

||||

|

||||

- Deployment/support into Azure Service Fabric (Windows Containers and Linux Containers).

|

||||

|

||||

- Azure Storage Blob: Using Azure Blobs to store the Product Images instead of plain files in folders

|

||||

|

||||

- Azure Functions: Azure Function microservice (The Marketing feature has an Azure function returning the Marketing Campaign content)

|

||||

|

||||

- DevOps: eShopOnContainers scripts/procedures of CI/CD pipelines in Visual Studio Team Services

|

||||

|

||||

## Previous version 1.0 (Based on .NET Core 1.1)

|

||||

This is an older version supporting .NET Core 1.1, tagged as v1.0 and available here:

|

||||

https://github.com/dotnet-architecture/eShopOnContainers/releases/tag/netcore1.1

|

||||

|

||||

# VNext

|

||||

|

||||

Other possible features, to be evaluated for the backlog:

|

||||

|

||||

- Helm support for Kubernetes deployment and Azure Dev Spaces compatibility

|

||||

|

||||

- Support for Service Fabric Mesh (codename "SeaBreeze")

|

||||

|

||||

- Manage Secrets with Azure KeyVault

|

||||

|

||||

- Split Unit Test projects and distribute across microservices, so each microservice "owns" its own tests.

|

||||

|

||||

- Implement a more advanced versioning system based on [aspnet-api-versioning](https://github.com/Microsoft/aspnet-api-versioning) or comparable system. Current API versioning is very basic, simply based on the URLs.

|

||||

|

||||

https://github.com/Microsoft/aspnet-api-versioning

|

||||

- Implement more advanced logging suchs as using Serilog: https://serilog.net/ or the selected approach.

|

||||

- Azure Event Grid: Implement an additional Event Bus implementation based on Azure Event Grid.

|

||||

- Azure Functions integrated with Azure Event Grid: Additional event-driven Azure Function microservice (i.e. grabbing uploaded images and adding a watermark and putting it into Azure blobs) - The notification would come from Azure Event Grid when any image is uploaded into a BLOB storage.

|

||||

- Monitoring/Diagnostics of microservices based on Application Insights with custom perfkeys

|

||||

- Service Fabric Stateful Service implementation in the SF branch

|

||||

- Gracefully stopping or shutting down microservice instances - Implemented as an ASP.NET Core middleware in the ASP.NET Core pipeline. Drain in-flight requests before stopping the microservice/container process.

|

||||

|

||||

- (To be Confirmed) Support for .NET Core 2.0 Razor Pages as additional client app.

|

||||

|

||||

- Security:

|

||||

- Encrypt secrets at configuration files (like in docker-compose.yml). Multiple possibilities, Azure Key Vault or using simple Certificates at container level, Consul, etc.

|

||||

- Other "secure-code" practices

|

||||

- Encrypt communication with SSL (related to the specific cloud infrastructure being used)

|

||||

- Implement security best practices about app's secrets (conn-strings, env vars, etc.)

|

||||

(However, this subject depends on the chosen orchestrator...)

|

||||

See when using Swarm: https://blog.docker.com/2017/02/docker-secrets-management/

|

||||

|

||||

- Create a building block to handle Idempotency in a generic way ([Issue 143](https://github.com/dotnet/eShopOnContainers/issues/143))

|

||||

|

||||

- Implement example of Optimistic Concurrency updates and optimistic concurrency exceptions

|

||||

|

||||

- (To be Confirmed) Nancy: Add a Nancy based microservice, also with DocDB, etc.

|

||||

|

||||

- (To be Confirmed) Support other DataProtection providers such as AspNetCore.DataProtection.ServiceFabric

|

||||

|

||||

- (To be Confirmed) Guide on Kubernetes

|

||||

• Possible "Getting started guide w/ local dev Kubernetes integrated to Docker for Windows and Mac"

|

||||

|

||||

- (To be Confirmed) In the Windows Containers fork, implement and add a simple WCF microservice/container implementing any logic like a simulated legacy Payment Gateway, as an example of "lift and shift" scenario.

|

||||

|

||||

- (To be Confirmed) Semantic log - Semantic logic - Related to the Azure app version and Application Insight usage

|

||||

Monitor what microservices are up/down, etc. related to App Insights, but the events are custom

|

||||

ETW events and "Semantic Application Log" from P&P

|

||||

Multiple implementations for the storage of the events, Azure Diagnostics, Elastic Search.

|

||||

Using EventSource base class, etc.

|

||||

|

||||

- (To be Confirmed) Composite UI based on microservices.

|

||||

Including the “UI per microservice”.

|

||||

References on Composite UI with microservices:

|

||||

|

||||

Composite UI using ASP.NET (Particular’s Workshop)

|

||||

http://bit.ly/particular-microservices

|

||||

|

||||

The Monolithic Frontend in the Microservices Architecture

|

||||

http://blog.xebia.com/the-monolithic-frontend-in-the-microservices-architecture/

|

||||

|

||||

The secret of better UI composition

|

||||

https://particular.net/blog/secret-of-better-ui-composition

|

||||

|

||||

Including Front-End Web Components Into Microservices

|

||||

https://technologyconversations.com/2015/08/09/including-front-end-web-components-into-microservices/

|

||||

|

||||

Managing Frontend in the Microservices Architecture

|

||||

http://allegro.tech/2016/03/Managing-Frontend-in-the-microservices-architecture.html

|

||||

|

||||

- Enhance the domain logic for Order Root-Aggregate.

|

||||

|

||||

Already implemented item stock validation (cancels order when quantity is not enough), but could add additional features, check [issue #5](https://github.com/dotnet-architecture/eShopOnContainers/issues/5).

|

||||

|

||||

- Support "multiple redirect urls" for the STS container based on Identity Server 4, check [issue #113](https://github.com/dotnet-architecture/eShopOnContainers/issues/113).

|

||||

|

||||

- Add proper handling of Authentication Token lifetime, check [issue #118](https://github.com/dotnet-architecture/eShopOnContainers/issues/118) for details.

|

||||

|

||||

- Refactor/Improve Polly's resilient code, check [issue #177](https://github.com/dotnet-architecture/eShopOnContainers/issues/177) for details.

|

||||

|

||||

- Add a Jitter strategy to the Retry policy, check [issue #188](https://github.com/dotnet-architecture/eShopOnContainers/issues/188) for details.

|

||||

|

||||

- Increase the resilience of the RequestProvider class in the mobile app, check [issue #206](https://github.com/dotnet-architecture/eShopOnContainers/issues/206) for details.

|

||||

|

||||

- Investigate using the OIDC library to communicate with IdentityServer from the Xamarin client, check [issue #215](https://github.com/dotnet-architecture/eShopOnContainers/issues/215) for details.

|

||||

|

||||

- Replace the WebView in the Xamarin client with device web browsers, check [issue #216](https://github.com/dotnet-architecture/eShopOnContainers/issues/216) for details.

|

||||

|

||||

- Consider using Bash instead of Powershell scripts, check [issue #228](https://github.com/dotnet-architecture/eShopOnContainers/issues/228) for details.

|

||||

|

||||

- Improve app startup time of Xamarin client, check [issue #231](https://github.com/dotnet-architecture/eShopOnContainers/issues/231) for details.

|

||||

|

||||

- Add social login to MVC and SPA apps, check [issue #475](https://github.com/dotnet-architecture/eShopOnContainers/issues/475) for details.

|

||||

|

||||

- Create a new "ServerProblemDetails" response that conforms with RFC 7807, check [issue #602](https://github.com/dotnet-architecture/eShopOnContainers/issues/602) for details.

|

||||

|

||||

- Include some guidance on testing in CI/CD pipelines, check [issue #549](https://github.com/dotnet-architecture/eShopOnContainers/issues/549) for details.

|

||||

|

||||

- Encrypt sensitive information, such as credit card number, along the ordering process, check [issue #407](https://github.com/dotnet-architecture/eShopOnContainers/issues/407)

|

||||

|

||||

- Fix naming inconsistency in EventBus projects and namespaces, they should be EventBus.RabbitMQ" and "EventBus.ServiceBus", check [issue #943](https://github.com/dotnet-architecture/eShopOnContainers/issues/943)

|

||||

|

||||

- Create load testing alternative that's not dependent on the about-to-be-deprecated load testing feature of VS Enterprise, see [issue #950](https://github.com/dotnet-architecture/eShopOnContainers/issues/950) for more details.

|

||||

|

||||

- Revamp the UI to a more modern aspect, consider using semantically correct html, check [issue #1017](https://github.com/dotnet-architecture/eShopOnContainers/issues/1017).

|

||||

|

||||

- Use the JSON:API specification for implementing APIs in eShopOnContainers, check [issue #1064](https://github.com/dotnet-architecture/eShopOnContainers/issues/1064)

|

||||

|

||||

## Sending feedback and pull requests

|

||||

We'd appreciate to your feedback, improvements and ideas.

|

||||

You can create new issues at the issues section, do pull requests and/or send emails to eshop_feedback@service.microsoft.com

|

||||

|

||||

This wiki page has been superseded by:

|

||||

|

||||

- [Roadmap](Roadmap)

|

||||

- [Backlog](Backlog)

|

||||

|

||||

@ -1,249 +1,5 @@

|

||||

## Want to try it out from Visual Studio 2017?

|

||||

|

||||

## IMPORTANT NOTE ON VISUAL STUDIO 2017 VERSION NEEDED!

|

||||

|

||||

**The current supported Visual Studio version for eShopOnContainers is Visual Studio 2017 15.8** or later version.

|

||||

|

||||

Also, make sure you have the latest '.NET Core 2.2 SDK' from: https://www.microsoft.com/net/download

|

||||

|

||||

Main steps to run it in Visual Studio:

|

||||

|

||||

```

|

||||

- Git clone https://github.com/dotnet-architecture/eShopOnContainers.git

|

||||

- Open solution eShopOnContainers-ServicesAndWebApps.sln with Visual Studio 2017

|

||||

- Set the VS startup project to the "docker-compose" project

|

||||

- Hit F5! (Or Ctrl+F5 for a faster start up)

|

||||

```

|

||||

|

||||

**NOTE:** In order for the authentication based on the STS (Security Token Service) to properly work and have access from remote client apps like the Xamarin mobile app, you also need to open the ports in your firewall as specified in the procedure below.

|

||||

|

||||

For further instructions, especially if this is the first time you are going to try .NET Core on Docker, see the detailed instructions below.

|

||||

|

||||

---------------------------------------------------------------------------------

|

||||

|

||||

## Detailed procedure: Setting eShopOnContainers up in a Visual Studio 2017 development machine

|

||||

|

||||

Visual Studio 2017 provides built-in Docker Tools with features like:

|

||||

|

||||

* Docker-compose support

|

||||

* Multi-container debugging, supporting true microservice scenarios

|

||||

* Linux Docker Containers (usually, for .NET Core apps)

|

||||

* Windows Docker Containers (usually for .NET Framework apps)

|

||||

|

||||

So, here's how to setup a VS 2017 environment where you can test eShopOnContainers.

|

||||

|

||||

### GitHub branch to use

|

||||

|

||||

By default, use the DEV branch which has the latest changes and testing.

|

||||

The MASTER branch is also an option but it'll usually be less up to date while we keep evolving the application.

|

||||

|

||||

### Software requirements

|

||||

|

||||

Software installation requirements for a Windows dev machine with Visual Studio 2017 and Docker for Windows:

|

||||

|

||||

- <a href='https://docs.docker.com/docker-for-windows/install/'>Docker for Windows</a> with the concrete configuration specified below.

|

||||

- <a href='https://www.visualstudio.com/vs/'>Visual Studio 2017 version 15.5</a> (Minimum version) with the workloads specified below.

|

||||

- NPM and related dependencies for running the SPA Web app. <a href='https://github.com/dotnet/eShopOnContainers/wiki/06.-Setting-the-Web-SPA-application-up'>Setup process described here </a>

|

||||

|

||||

### Installing and configuring Docker in your development machine

|

||||

|

||||

#### Install Docker CE for Windows

|

||||

|

||||

Install Docker CE for Windows (The Stable channel should suffice) from this page: https://docs.docker.com/docker-for-windows/install/

|

||||

About further info on Docker for windows, check this additional page

|

||||

https://docs.docker.com/docker-for-windows/

|

||||

|

||||

Docker for Windows uses Hyper-V to run a Linux VM which is the by default Docker host. If you don't have Hyper-V installed/enabled, it'll be installed and you will probably need to reboot your machine. Docker's setup should warn you about it, though.

|

||||

|

||||

**IMPORTANT**: Check that you don't have any other hypervisor installed that might be not compatible with Hyper-V. For instance, Intel HAXM can be installed by VS 2017 if you chose to install Google's Android emulator which works on top of Intel HAXM. In that case, you'd need to uninstall Google's Android emulator and Intel HAXM.

|

||||

VS 2017 recommends to install the Google Android emulator because it is the only Android emulator with support for Google Play Store, Google Maps, etc. However, take into account that it currently is not compatible with Hyper-V, so you might have incompatibilities with this scenario.

|

||||

|

||||

#### Set needed assigned Memory and CPU to Docker

|

||||

|

||||

For the development environment of eShopOnContainers, by default, it runs 1 instance of SQL Server running as a container with multiple databases (one DB per microservice), other 6 additional ASP.NET Core apps/services each one running as a container, plus 1 Redis server running as a container. Therefore, especially because of the SQL Server requirements on memory, it is important to set Docker up properly with enough memory RAM and CPU assigned to it or you will get errors when starting the containers with VS 2017 or "docker-compose up".

|

||||

|

||||

Once Docker for Windows is installed in your machine, enter into its Settings and the Advanced menu option so you are able to adjust it to the minimum amount of memory and CPU (Memory: Around 4096MB and CPU:3) as shown in the image. Usually you might need a 16GB memory machine for this configuration if you also want to run the Android emulator for the Xamarin app or multiple instances of applications demanding significant memory at the same time. If you have a less powerful machine, you can try with a lower configuration and/or by not starting certain containers like the basket and Redis. But if you don't start all the containers, the application will not fully function properly, of course.

|

||||

|

||||

<img src="img/docker_settings.png">

|

||||

|

||||

#### Share drives in Docker settings (In order to deploy and debug with Visual Studio 2017)

|

||||

(Note, this is not required if running from Docker CLI with docker-compose up and using VS 2015 or any other IDE or Editor)<p>

|

||||

In order to deploy/debug from Visual Studio 2017, you'll need to share the drives from Settings-> Shared Drives in the "Docker for Windows" configuration.

|

||||

If you don't do this, you will get an error when trying to deploy/debug from VS 2017, like "Cannot create container for service yourApplication: C: drive is not shared". <p>

|

||||

The drive you'll need to share depends on where you place your source code.

|

||||

|

||||

|

||||

<img src="img/docker_settings_shared_drives.png">

|

||||

|

||||

### IMPORTANT: Open ports in local Firewall so Authentication to the STS (Security Token Service container) can be done through the 10.0.75.1 IP which should be available and already setup by Docker. Also needed for client remote apps like Xamarin app or SPA app in remote browser.

|

||||

|

||||

- You can manually create a rule in your local firewall in your development machine or you can also create that rule by just executing the <b>add-firewall-rules-for-sts-auth-thru-docker.ps1</b> script available in the solution's **cli-windows** folder.

|

||||

- Basically, you need to open the ports 5100 to 5110 that are used by the solution by creating an IN-BOUND RULE in your firewall, as shown in the screenshot below (for Windows).

|

||||

|

||||

<img src="img/firewall-rule-for-eshop.png">

|

||||

|

||||

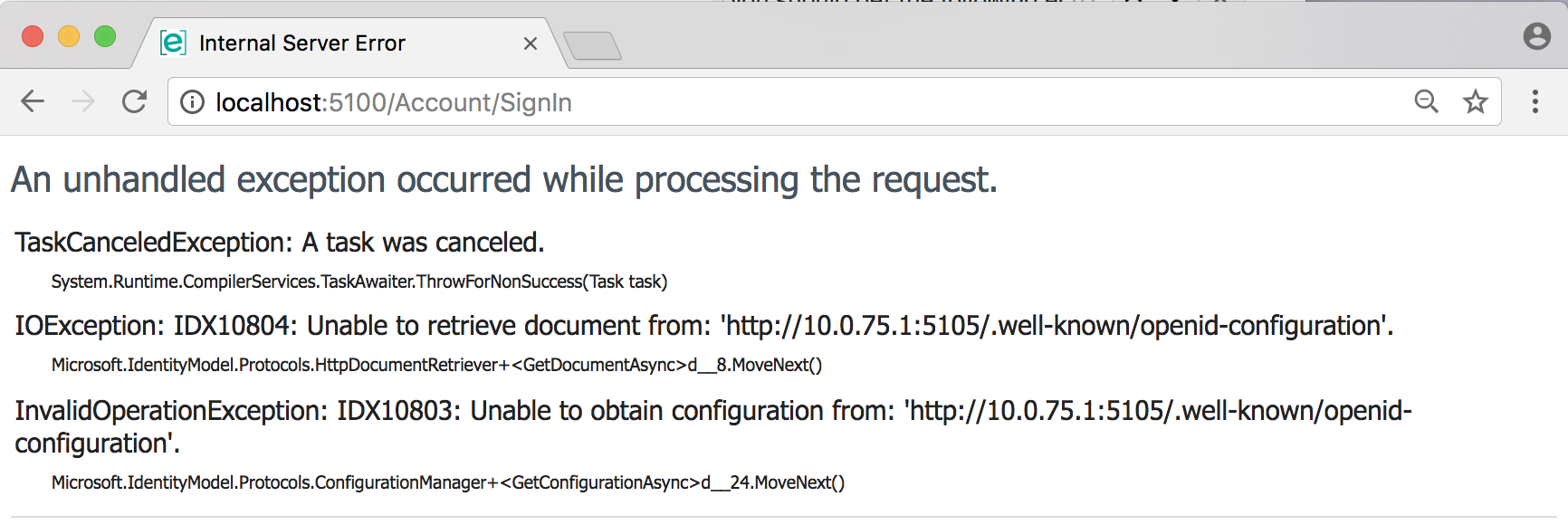

- **NOTE:** If you get the error **Unable to obtain configuration from: `http://10.0.75.1:5105/.well-known/openid-configuration`** you might need to allow to the program `vpnkit` for connections to and from any computer through all ports (see [issue #295](https://github.com/dotnet-architecture/eShopOnContainers/issues/295#issuecomment-327973650).

|

||||

|

||||

### Installing and configuring Visual Studio 2017 in your development machine

|

||||

|

||||

#### Install Visual Studio 2017

|

||||

|

||||

Run the VS 2017 setup file (latest RTM version, **Visual Studio 2017 15.5 or later**) and select the following workloads depending on the apps you intend to test or work with:

|

||||

|

||||

##### Working only with the server side (Microservices and web applications) - Workloads

|

||||

|

||||

- ASP.NET and web development

|

||||

- .NET Core cross-platofrm development

|

||||

- Azure development (Optional) - It is optional but recommended in case you want to deploy to Docker hosts in Azure or use any other infrastructure in Azure.

|

||||

-

|

||||

<img src="img/vs2017/vs2017_server_workload.png">

|

||||

|

||||

##### Working with the mobile app (Xamarin Mobile apps for iOS, Android and Windows UWP) - Workloads

|

||||

|

||||

If you also want to test/work with the eShopOnContainer model app based on Xamarin, you need to install the following additional workloads:

|

||||

|

||||

- Mobile development with .NET (Xamarin)

|

||||

- Universal Windows Platform development

|

||||

- .NET desktop development (Optional) - This is not required, but just in case you also want to make tests consuming the microservices from WPF or WinForms desktop apps

|

||||

|

||||

<img src="img/vs2017/vs2017_additional_mobile_workloads.png">

|

||||

|

||||

IMPORTANT: As mentioned above, make sure you are NOT installing Google's Android emlulator with Intel HAXM hypervisor or you will run on an incompatibility and Hyper-V won't work in your machine, therefore, Docker for Windows wont work when trying to run the Linux host or any host witih Hyper-V.

|

||||

|

||||

Make sur eyou are NOT selecting

|

||||

the highlighted options below with a red arrows:

|

||||

|

||||

<img src="img/vs2017/xamarin-workload-options.png">

|

||||

|

||||

### Issue/Workarounds for "Visual Studio 2017 Tools for Docker" when there's a network proxy in between your machine and Docker Hub in the Internet

|

||||

After installing VS2017 with docker support, if you cannot debug properly and you are trying from a corporate network behind a proxy, take into account the following issue and workarounds, until this issue is fixed in Visual Studio:

|

||||

https://github.com/dotnet-architecture/eShopOnContainers/issues/224#issuecomment-319462344

|

||||

|

||||

### Clone the eShopOnContainers code from GitHub

|

||||

By default, clone the DEV branch which is currently the by default branch to accept Pull Requests, etc.

|

||||

Like here:

|

||||

|

||||

`git clone https://github.com/dotnet-architecture/eShopOnContainers.git`

|

||||

|

||||

**Note:** Remember that the active development is done in `dev` branch. To test the latest code, use this branch instead of `master`.

|

||||

|

||||

### Open eShopOnContainers solution, Build, Run

|

||||

|

||||

#### Open eShopOnContainers solution in Visual Studio 2017

|

||||

|

||||

- If testing/working only with the server-side applications and services, open the solution: **eShopOnContainers-ServicesAndWebApps.sln** (Recommended for most cases testing the containers and web apps)

|

||||

|

||||

- If testing/working either with the server-side applications and services plus the Xamarin mobile apps, open the solution: **eShopOnContainers.sln**

|

||||

|

||||

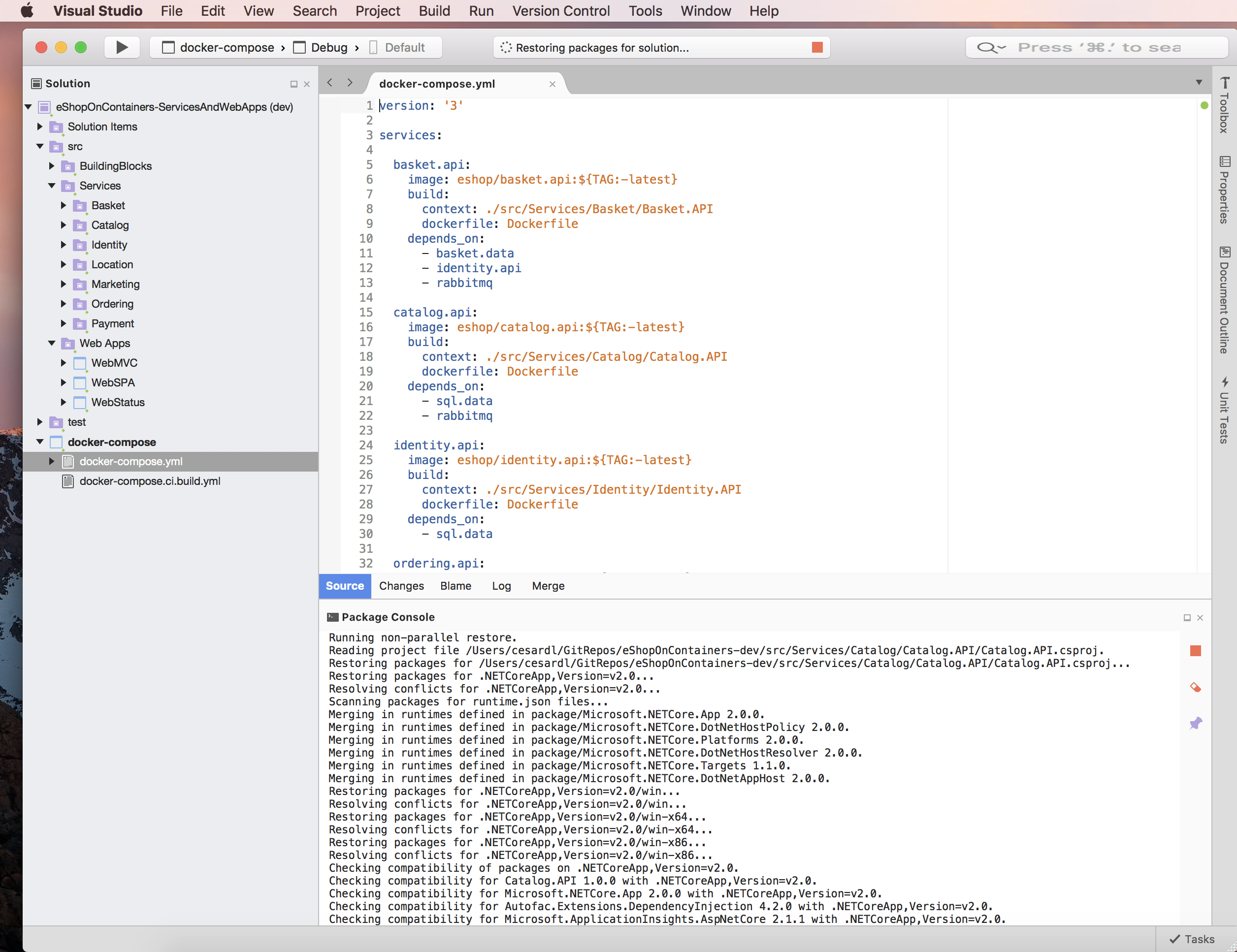

Below you can see the full **eShopOnContainers-ServicesAndWebApps.sln** solution (server side) opened in Visual Studio 2017:

|

||||

|

||||

<img src="img/vs2017/vs-2017-eshoponcontainers-servicesandwebapps-solution.png">

|

||||

|

||||

Note how VS 2017 loads the docker-compose.yml files in a special node-tree so it uses that configuration to deploy/debug all the containers configured, at the same time into your Docker host.

|

||||

|

||||

#### Build and run eShopOnContainers from Visual Studio 2017

|

||||

|

||||

##### Set docker-compose as the default StartUp project

|

||||

**IMPORTANT**: If the **"docker-compose" project** is not your "by default startup project", right click on the "docker-compose" node and select the "Set as Startup Project" menu option, as shown below:

|

||||

<img src="img/vs2017/set-docker-node-as-default.png">

|

||||

|

||||

At this point, after waiting sometime for the Nuget packages to be properly restored, you should be able to build the whole solution or even directly deploy/debug it into Docker by simple hitting F5 or pressing the debug "Play" button that now should be labeled as "Docker":

|

||||

|

||||

<img src="img/vs2017/debug-F5-button.png">

|

||||

|

||||

VS 2017 should compile the .NET projects, then create the Docker images and finally deploy the containers in the Docker host (your by default Linux VM in Docker for Windows).

|

||||

Note that the first time you hit F5 it'll take more time, a few minutes at least, because in addition to compile your bits, it needs to pull/download the base images (SQL for Linux Docker Image, Redis Image, ASPNET image, etc.) and register them in the local image repo of your PC. The next time you hit F5 it'll be much faster.

|

||||

|

||||

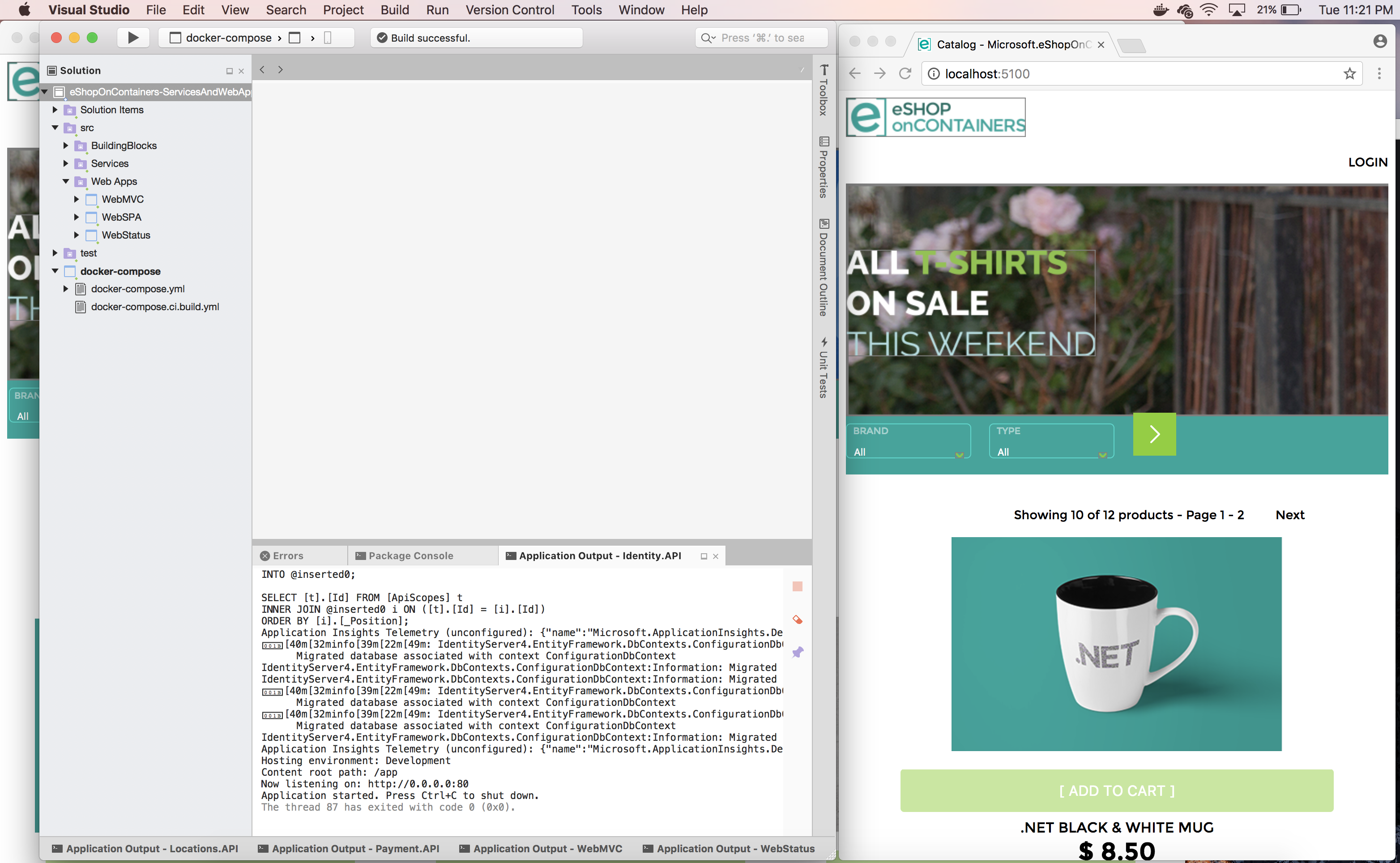

Finally, because the docker-compose configuration project is configured to open the MVC application, it should open your by default browser and show the MVC application with data coming from the microservices/containers:

|

||||

<img src="img/vs2017/vs2017-f5-with-eshoponcontainers-web-mvc-in-browser.png">

|

||||

|

||||

Here's how the docker-compose configuration project is configured to open the MVC application:

|

||||

|

||||

<img src="img/vs2017/docker-compose-properties.png">

|

||||

|

||||

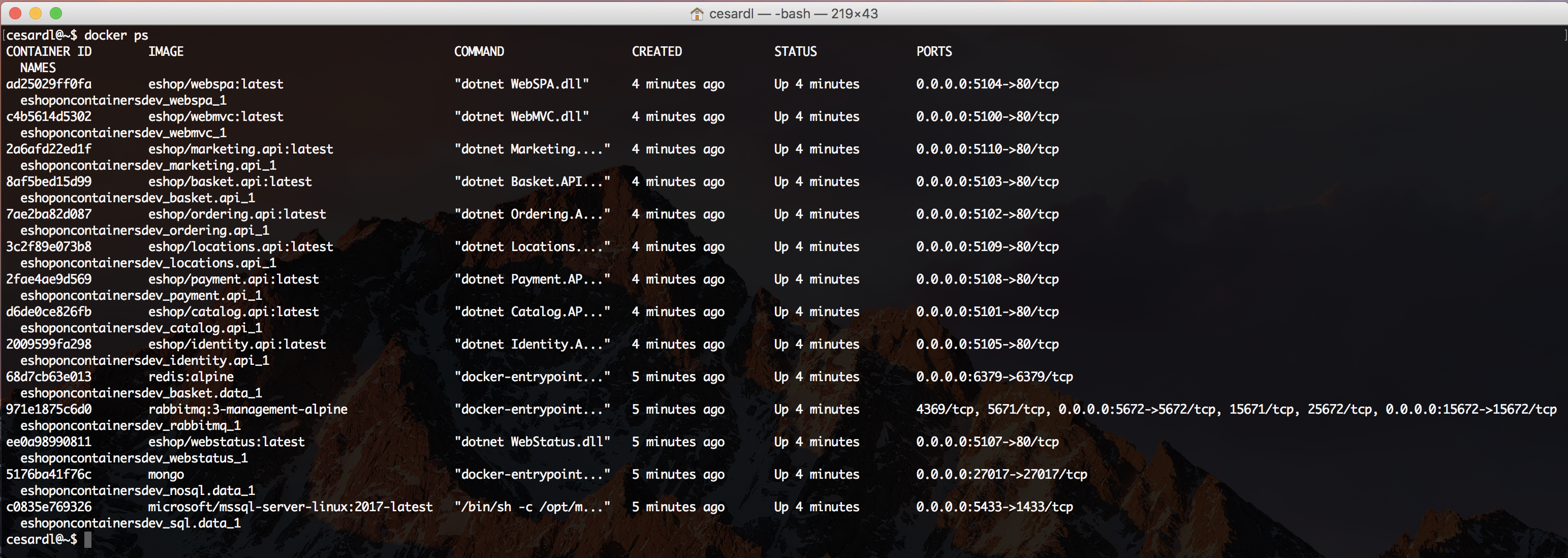

Finally, you can check out how the multiple containers are running in your Docker host by running the command **"docker ps"** like below:

|

||||

|

||||

<img src="img/vs2017/docker-ps.png">

|

||||

|

||||

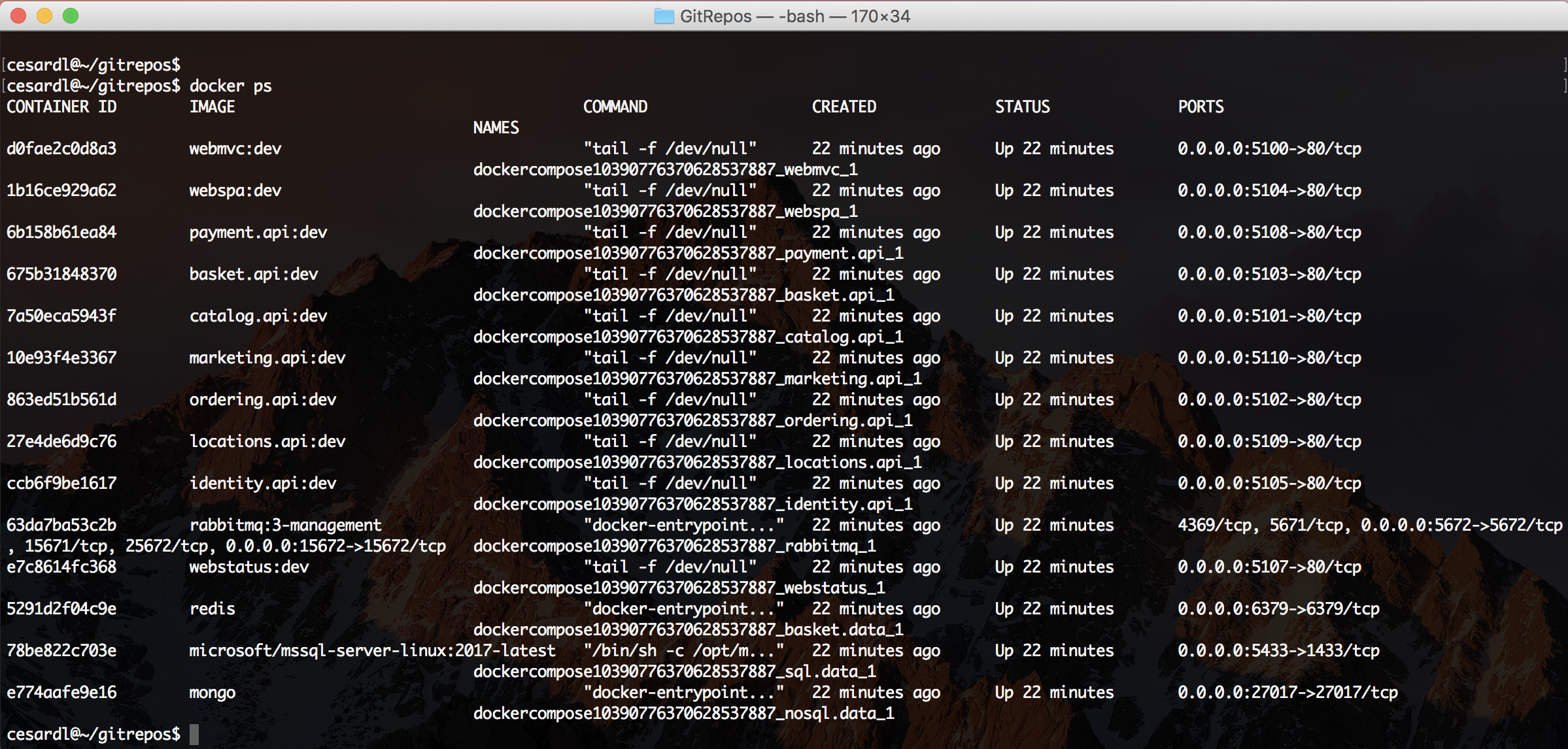

You can see the 8 containers are running and what ports are being exposed, etc.

|

||||

|

||||

### Debug with several breakpoints across the multiple containers/projects

|

||||

|

||||

Something very compelling and productive in VS 2017 is the capability to debug several breakpoints across the multiple containers/projects.

|

||||

For instance, you could set a breakpoint in a controller within the MVC web app plus a second breakpoint in a second controller within the Catalog Web API microservice, then refresh the browser if you were still running the app or F5 again, and VS will be stopping within your microservices running in Docker as shown below! :)

|

||||

|

||||

Breakpoint at the MVC app running as Docker container in the Docker host:

|

||||

<img src="img/vs2017/debugging-mvc-app.png">

|

||||

|

||||

Press F5 again...

|

||||

|

||||

Breakpoint at the Catalog microservice running as Docker container in the Docker host:

|

||||

<img src="img/vs2017/debugging-webapi-microservice.png">

|

||||

|

||||

And that's it! Super simple! Visual Studio is handling all the complexities under the covers and you can directly do F5 and debug a multi-container application!

|

||||

|

||||

|

||||

### Test the SPA Web app

|

||||

While having the containers running, open a browser and type `http://localhost:5104/` and hit enter.

|

||||

You should see the SPA application like in the following screenshot:

|

||||

|

||||

<img src="img/eshop-webspa-app-screenshot.png">

|

||||

<br>

|

||||

|

||||

|

||||

### Test a microservice's Swagger interface (i.e. the Catalog microservice)

|

||||

While having the containers running, open a browser and type `http://localhost:5101` and hit enter.

|

||||

You should see the Swagger page for that microservice that allows you to test the Web API, like in the following screenshot:

|

||||

|

||||

<img src="img/swagger-catalog-1.png">

|

||||

|

||||

Then, after providing the size (i.e. 10) and the current page (i.e. 1) for the data of the catalog, you can run the service hitting the "Try it out!" button and see the returned JSON Data:

|

||||

<img src="img/swagger-catalog-2.png">

|

||||

|

||||

<br>

|

||||

|

||||

----

|

||||

|

||||

### Testing all the applications and microservices

|

||||

Once the containers are deployed, you should be able to access any of the services in the following URLs or connection string, from your dev machine:

|

||||

|

||||

<a href="" target="top"></a>

|

||||

- Web MVC: <a href="http://localhost:5100" target="top">http://localhost:5100</a>

|

||||

- Web Spa: <a href="http://localhost:5104" target="top">http://localhost:5104</a> (Important, check how to set up the SPA app and requirements before building the Docker images. Instructions at https://github.com/dotnet/eShopOnContainers/wiki/06.-Setting-the-Web-SPA-application-up)

|

||||

- Catalog microservice: <a href="http://localhost:5101" target="top">http://localhost:5101</a> (Not secured)

|

||||

- Ordering microservice: <a href="http://localhost:5102" target="top">http://localhost:5102</a> (Requires token for authorization)

|

||||

- Basket microservice: <a href="http://localhost:5103" target="top">http://localhost:5103</a> (Requires token for authorization)

|

||||

- Identity microservice: <a href="http://localhost:5105" target="top">http://localhost:5105</a>

|

||||

- Orders database (SQL Server connection string): Server=tcp:localhost,5432;Database=Microsoft.eShopOnContainers.Services.OrderingDb;User Id=sa;Password=Pass@word;

|

||||

- Catalog database (SQL Server connection string): Server=tcp:localhost,5434;Database=CatalogDB;User Id=sa;Password=Pass@word

|

||||

- ASP.NET Identity database (SQL Server connection string): Server=localhost,5433;Database=aspnet-Microsoft.eShopOnContainers;User Id=sa;Password=Pass@word

|

||||

- Basket data (Redis): listening at localhost:6379

|

||||

|

||||

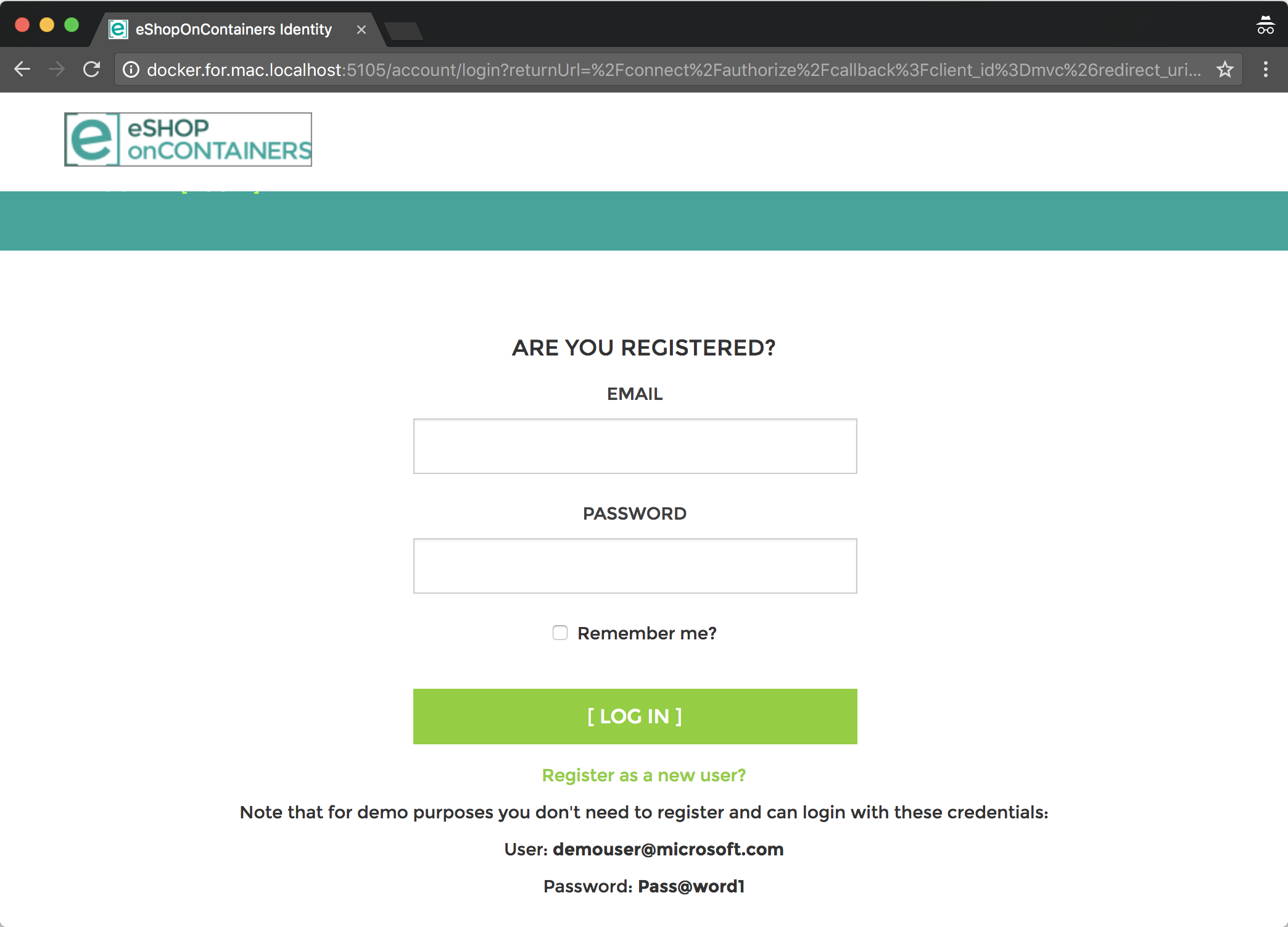

#### Creating and Order and Authenticating on the Web MVC application with the DemoUser@microsoft.com user account

|

||||

When you try the Web MVC application by using the url http://localhost:5100, you'll be able to test the home page which is also the catalog page. But if you want to add articles to the basket you need to login first at the login page which is handled by the STS microservice/container (Security Token Service). At this point, you could register your own user/customer or you can also use a convenient default user/customer named **demoUser@microsoft.com** so you don't need to register your own user and it'll be easier to test.

|

||||

The credentials for this demo user are:

|

||||

- User: **demouser@microsoft.com**

|

||||

- Password: **Pass@word1**

|

||||

|

||||

Below you can see the login page when providing those credentials.

|

||||

<img src="img/login-demo-user.png">

|

||||

|

||||

#### Trying the Xamarin.Forms mobile apps for Android, iOS and Windows

|

||||

You can deploy the Xamarin app to real iOS, Android or Windows devices.

|

||||

You can also test it on an Android Emulator based on Hyper-V like the Visual Studio Android Emulator (Do NOT install the Google's Android emulator or it will break Docker and Hyper-V, as mentioned above).

|

||||

|

||||

By default, the Xamarin app shows fake data from mock-services. In order to really access the microservices/containers in Docker from the mobile app, you need to:

|

||||

- Disable mock-services in the Xamarin app by setting the <b>UseMockServices = false</b> in the App.xaml.cs and specify the host IP in BaseEndpoint = "http://10.106.144.28" at the GlobalSettings.cs. Both files in the Xamarin.Forms project (PCL).

|

||||

- Another alternative is to change that IP through the app UI, by modifying the IP address in the Settings page of the App as shown in the screenshot below.

|

||||

- In addition, you need to make sure that the used TCP ports of the services are open in the local firewall. <img src="img/xamarin-settings.png">

|

||||

|

||||

|

||||

## Sending feedback and pull requests

|

||||

We'd appreciate to your feedback, improvements and ideas.

|

||||

You can create new issues at the issues section, do pull requests and/or send emails to eshop_feedback@service.microsoft.com

|

||||

|

||||

## Questions

|

||||

[QUESTION] Answer +1 if the solution is working for you (Through VS2017 or CLI environment):

|

||||

https://github.com/dotnet/eShopOnContainers/issues/107

|

||||

# Superseded

|

||||

|

||||

This wiki page has been superseded by:

|

||||

|

||||

- [Windows setup](Windows-setup)

|

||||

|

||||

@ -1,277 +1,5 @@

|

||||

## Related readme files (use them for more information after reading this)

|

||||

|

||||

* [https://github.com/dotnet-architecture/eShopOnContainers/blob/master/readme/readme-docker-compose.md](https://github.com/dotnet-architecture/eShopOnContainers/blob/master/readme/readme-docker-compose.md): All docker compose files that we have and how to use them

|

||||

|

||||

## .NET Core SDK

|

||||

Make sure you have the latest '.NET Core 2.1 SDK' installed from: https://www.microsoft.com/net/download

|

||||

|

||||

## Want to try it out from the CLI?

|

||||

|

||||

Main steps to run on the CLI command window:

|

||||

|

||||

```

|

||||

- Git clone https://github.com/dotnet/eShopOnContainers.git

|

||||

- cd eShopOnContainers

|

||||

- Docker-compose build

|

||||

- Docker-compose up

|

||||

(Alternatively, you can directly just run Docker-compose up and it will run the "build" command, first)

|

||||

- Using a browser, try the MVC app at http://localhost:5100

|

||||

```

|

||||

NOTE: In order for the authentication based on the STS (Security Token Service) to properly work and have access from remote client apps like the Xamarin mobile app, you also need to open the ports in your firewall as specified in the procedure below.

|

||||

For further instructions, especially if this is the first time you are going to try .NET Core on Docker, see the detailed instructions below. This is also important in order to make the SPA app (Single Page Application) to work as there are some considerations (npm install, etc.) in regards when using NPM from Windows and Linux.

|

||||

|

||||

--------------------------------------------------------------------

|

||||

|

||||

# Detailed procedure - Setting eShopOnContainers up in a CLI and Windows based development machine

|

||||

This CLI environment means that you want to build/run by using the CLI (Command line interface) available in .NET Core (dotnetcore) and Docker CLI.

|

||||

<p>

|

||||

You don't need Visual Studio 2017 for this environment but can use any code editor like Visual Studio Code, Sublime, etc. Of course, you could still use VS 2017 at the same time, as well.

|

||||

|

||||

## Docker Multi-stage support

|

||||

Since December 2017, Visual Studio 2017 15.5 and eShopOnContainers support [Docker Multi-stage](https://blogs.msdn.microsoft.com/stevelasker/2017/09/11/net-and-multistage-dockerfiles/), therefore, the steps in order to compile the .NET apps/projects before creating the Docker images can now be performed in a single step with "docker-compose build" or "docker build".

|

||||

|

||||

## Prerequisites (Software requirements)

|

||||

|

||||

1. [Docker for Windows](https://docs.docker.com/docker-for-windows/install/). Important, follow the concrete configuration specified in the steps below.

|

||||

1. A Git client. The [git-scm site](https://git-scm.com/download/gui/mac) maintains a great list of clients.

|

||||

1. (OPTIONAL) [Node.js](http://nodejs.org). The stable channel is fine as well.

|

||||

1. (OPTIONAL) Bower (/> npm install -g bower) needed for the MVC web app.

|

||||

1. [.NET Core SDK](http://dot.net). Install the latest SDK and runtime.

|

||||

1. Any code editor, like [Visual Studio Code](https://code.visualstudio.com/)

|

||||

|

||||

*IMPORTANT NOTE:* When building with Docker Multi-stage you don't really need to have installed Node, NPM, Bower or not even .NET Core SDK in your local Windows machine, as the build image used by Docker Multi-stage has all the needed SDKs to compile the projects. However, we recommend to have it installed on Windows so you can do further development and testing.

|

||||

|

||||

# Setting up the development environment

|

||||

|

||||

## Installing and configuring Docker in your development machine

|

||||

|

||||

### Install Docker for Windows

|

||||

Install Docker for Windows (The Stable channel should suffice) from this page: https://docs.docker.com/docker-for-windows/install/

|

||||

About further info on Docker for windows, check this additional page

|

||||

https://docs.docker.com/docker-for-windows/

|

||||

|

||||

Docker for Windows uses Hyper-V to run a Linux VM which is the by default Docker host. If you don't have Hyper-V installed/enabled, it'll be installed and you will probably need to reboot your machine. Docker's setup should warn you about it, though.

|

||||

|

||||

**IMPORTANT**: Check that you don't have any other hypervisor installed that might be not compatible with Hyper-V. For instance, Intel HAXM can be installed by VS 2017 if you chose to install Google's Android emulator which works on top of Intel HAXM. In that case, you'd need to uninstall Google's Android emulator and Intel HAXM.

|

||||

VS 2017 recommends to install the Google Android emulator because it is the only Android emulator with support for Google Play Store, Google Maps, etc. However, take into account that it currently is not compatible with Hyper-V, so you might have incompatibilities with this scenario.

|

||||

|

||||

### Set needed assigned Memory and CPU to Docker

|

||||

For the development environment of eShopOnContainers, by default, it runs 1 instance of SQL Server running as a container with multiple databases (one DB per microservice), other 6 additional ASP.NET Core apps/services each one running as a container, plus 1 Redis server running as a container. Therefore, especially because of the SQL Server requirements on memory, it is important to set Docker up properly with enough memory RAM and CPU assigned to it or you will get errors when starting the containers with "docker-compose up".

|

||||

|

||||

Once Docker for Windows is installed in your machine, enter into its Settings and the Advanced menu option so you are able to adjust it to the minimum amount of memory and CPU (Memory: Around 4096MB and CPU:3) as shown in the image. Usually you might need a 16GB memory machine for optimal configuration.

|

||||

|

||||

<img src="img/docker_settings.png">

|

||||

|

||||

### Share drives in Docker settings

|

||||

This step is optional but recommended, as Docker sometimes needs to access the shared drives when building, depending on the build actions.

|

||||

With the by default eShopOnContainers build process in the CLI, you don't need it.

|

||||

But if you were to use Visual Studio, it is mandatory to share the drive where your code resides.

|

||||

|

||||

The drive you'll need to share depends on where you place your source code.

|

||||

|

||||

<img src="img/docker_settings_shared_drives.png">

|

||||

|

||||

|

||||

### IMPORTANT: Open ports in local Firewall so Authentication to the STS (Security Token Service container) can be done through the 10.0.75.1 IP which should be available and already setup by Docker. Also needed for client remote apps like Xamarin app or SPA app in remote browser.

|

||||

|

||||

- You can manually create a rule in your local firewall in your development machine or you can also create that rule by just executing the <b>add-firewall-rules-for-sts-auth-thru-docker.ps1</b> script available in the solution's **cli-windows** folder.

|

||||

|

||||

- Basically, you need to open the ports 5100 to 5105 that are used by the solution by creating an IN-BOUND RULE in your firewall, as shown in the screenshot below (for Windows).

|

||||

|

||||

<img src="img/firewall-rule-for-eshop.png">

|

||||

|

||||

- **NOTE:** If you get the error **Unable to obtain configuration from: `http://10.0.75.1:5105/.well-known/openid-configuration`** you might need to allow to the program `vpnkit` for connections to and from any computer through all ports (see [issue #295](https://github.com/dotnet-architecture/eShopOnContainers/issues/295#issuecomment-327973650).

|

||||

|

||||

## .NET Core SDK setup

|

||||

(OPTIONAL) As mentioned, this requirement is optional because when building through Docker Multi-Stage it will be using the .NET SDK available within the ASP.NET Core build image, not the local .NET Core SDK. However, it is recommended to have it installed locally for any further building/testing of the ASP.NET Core projects without Docker.

|

||||

The .NET Core SDK install the .NET Core framework plus the SDK CLI tools with commands like "dotnet build", "dotnet publish", etc.

|

||||

|

||||

Install the .NET Core SDK from here:

|

||||

https://www.microsoft.com/net/download/windows#/current

|

||||

|

||||

## Install NPM (Optional, this local installation is not required when using Docker Multi-Stage)

|

||||

|

||||

(OPTIONAL) As mentioned, this requirement is optional because when building through Docker Multi-Stage it will be using the dependencies available within the ASP.NET Core build image, not the installed software on the local machine/PC.

|

||||

|

||||

In order to be able to build the JavaScript dependencies from command line by using npm you need to install npm globally.

|

||||

|

||||

NPM is bundled with NODE.JS. Installing NPM and NODE is pretty straightforward by using the installer package available at https://nodejs.org/en/

|

||||

|

||||

<img src="img/spa/installing_npm_node.png">

|

||||

You can install the version "Recommended For Most Users" of Node (LTS version).

|

||||

|

||||

After installing Node, you can check the installed NPM version with the command <b>npm -v</b>, as shown below.

|

||||

|

||||

<img src="img/spa/npm-versions-powershell.png">

|

||||

|

||||

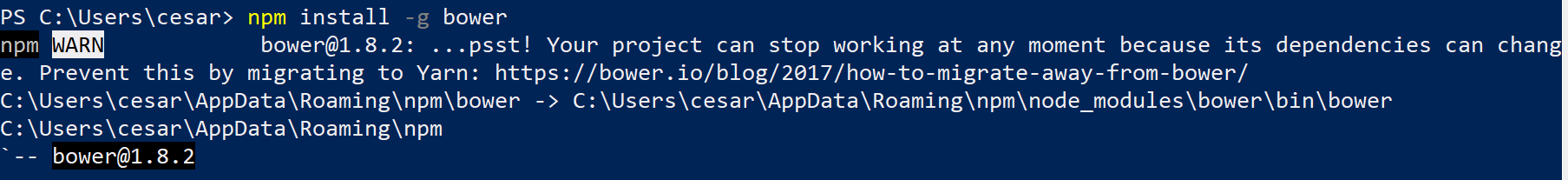

## Install Bower (Optional, this local installation is not required when using Docker Multi-Stage)

|

||||

|

||||

(OPTIONAL) As mentioned, this requirement is optional because when building through Docker Multi-Stage it will be using the dependencies available within the ASP.NET Core build image, not the installed software on the local machine/PC.

|

||||

|

||||

Bower is needed by minor dependencies at the MVC web app. If using Visual Studio, VS will handle this installation. But if using the CLI in Windows with no Docker multi-stage, then you'd need to install Bower globally by running the following NPM command:

|

||||

|

||||

`npm install -g bower `

|

||||

|

||||

|

||||

|

||||

# Clone the eShopOnContainers GitHub code Repository into your dev machine

|

||||

|

||||

## GitHub branch to use/pull

|

||||

Use the default branch at eShopOnContainers Github repo. The same branch's code supports Visual Studio 2017 or CLI scenarios, simultaneously, depending on each developer's preference.

|

||||

|

||||

Clone the code from: https://github.com/dotnet/eShopOnContainers.git

|

||||

as in the following screenshot:

|

||||

|

||||

<img src="img/cli-windows/git-clone-powershell.png">

|

||||

|

||||

# Compile the application's projects and build the Docker images with a single command thanks to Docker Multi-Stage

|

||||

|

||||

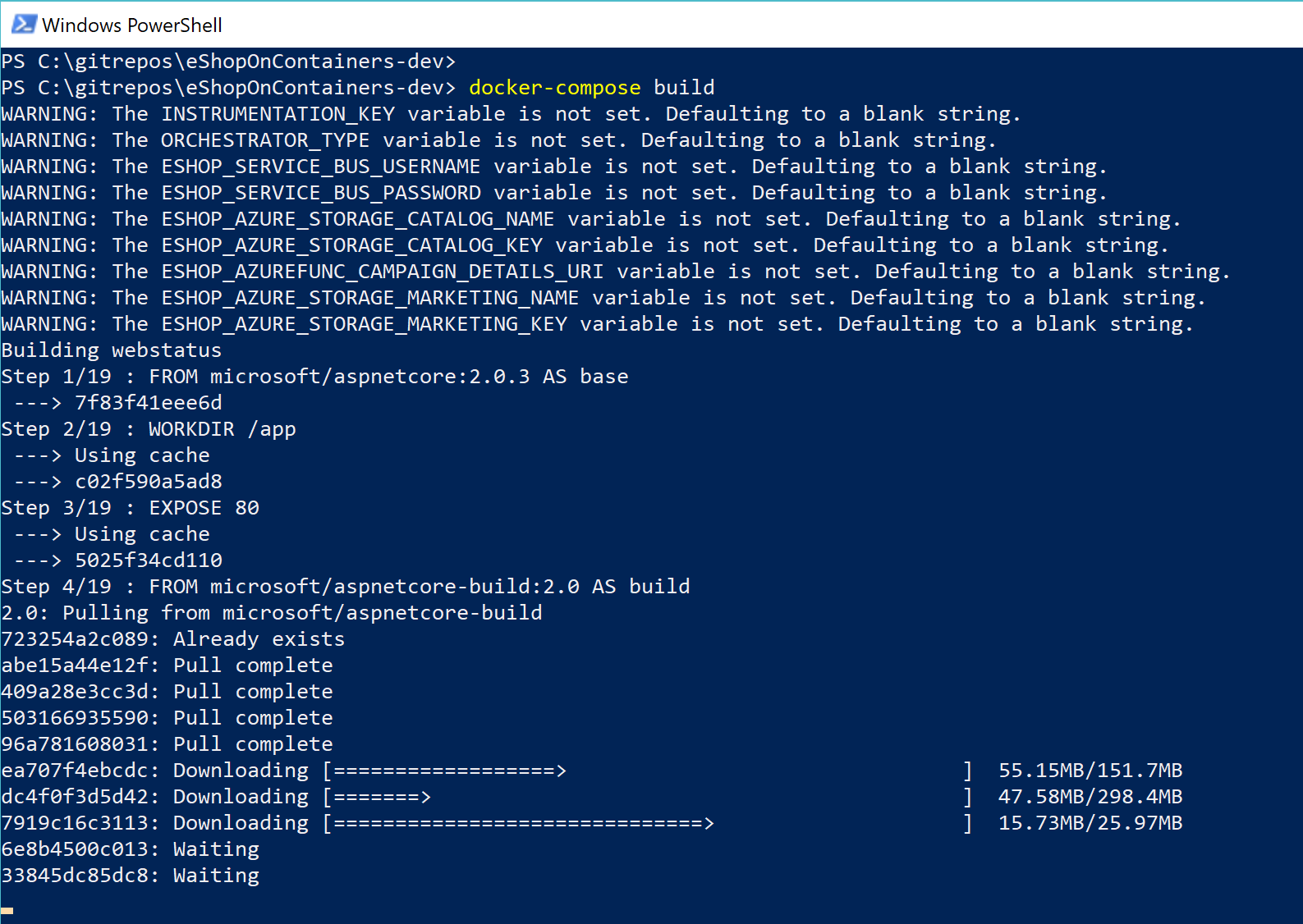

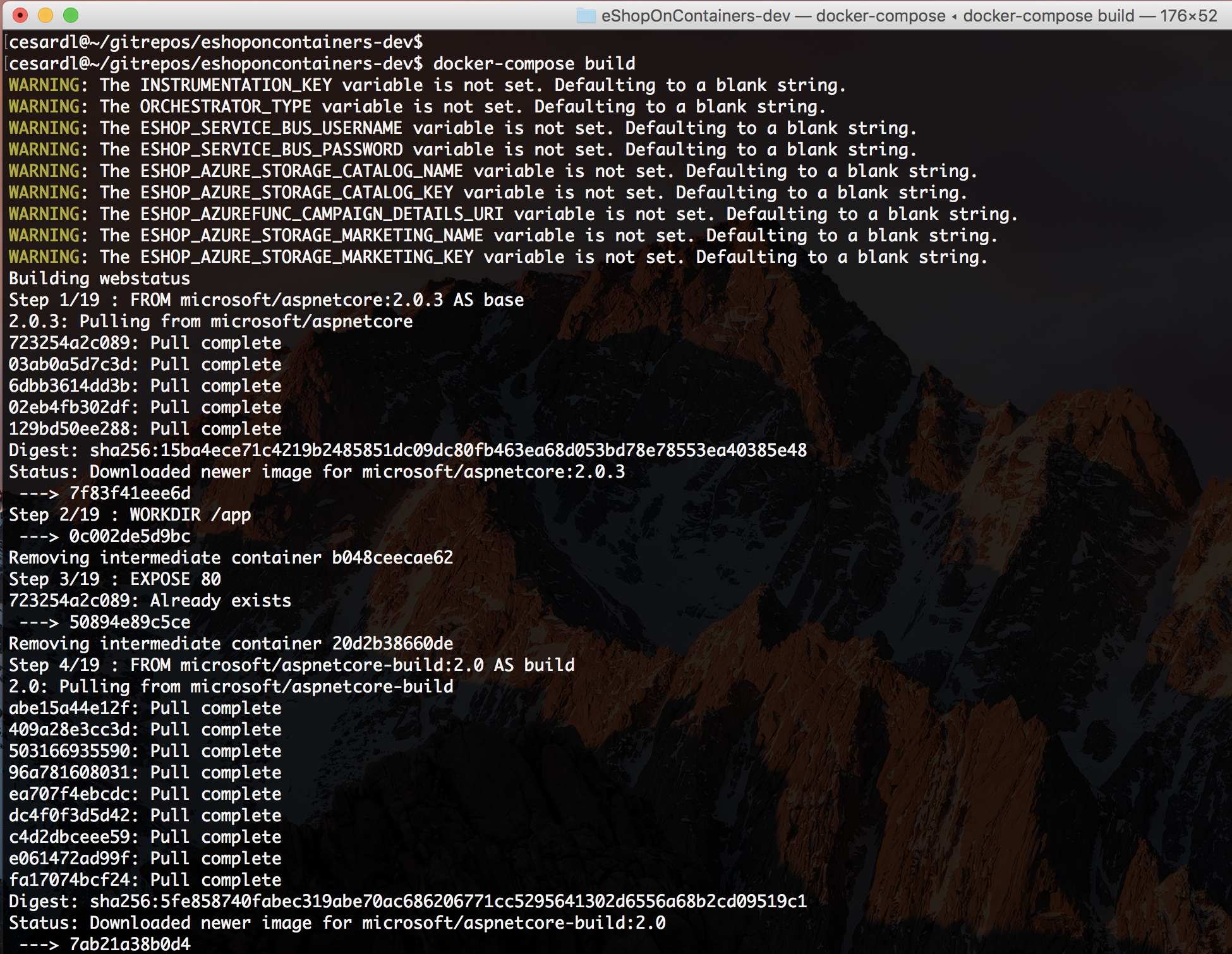

The recommended approach is to build the .NET application/microservices bits and Docker images with a single command based on [Docker Multi-Stage](https://blogs.msdn.microsoft.com/stevelasker/2017/09/11/net-and-multistage-dockerfiles/) by simply running the following commands within the solution's root folder:

|

||||

|

||||

Move to the root folder of the solution:

|

||||

|

||||

`cd YourPath\eShopOnContainers\`

|

||||

|

||||

Then, run the following docker command:

|

||||

|

||||

`docker-compose build`

|

||||

|

||||

|

||||

|

||||

The first time you run this command it'll take some more additional time as it needs to pull/download the aspnet-build image with the SDKs, so it'll take its time.

|

||||

|

||||

It should take a few minutes to compile the .NET Core projects plus the SPA application (TypeScript/JavaScript).

|

||||

|

||||

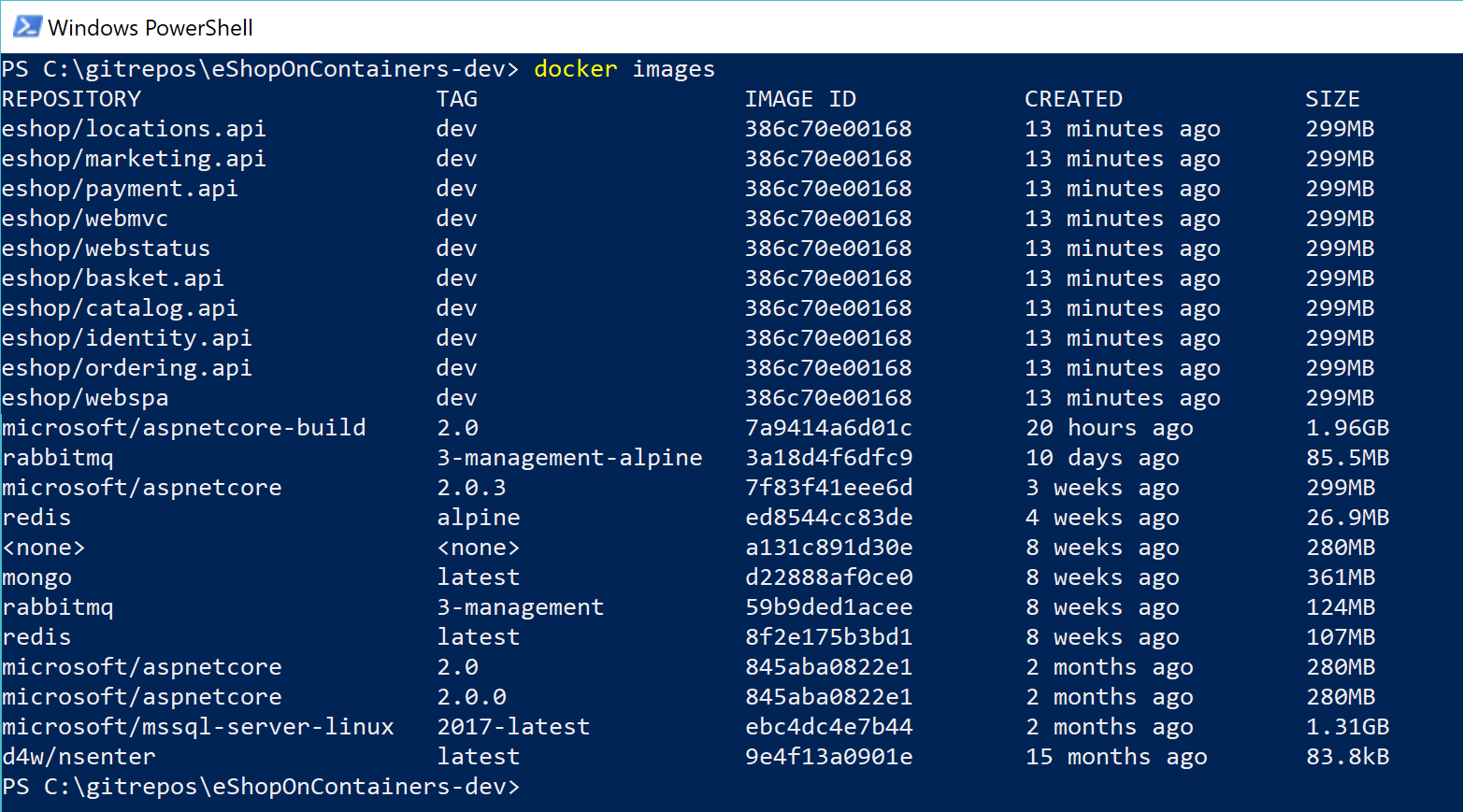

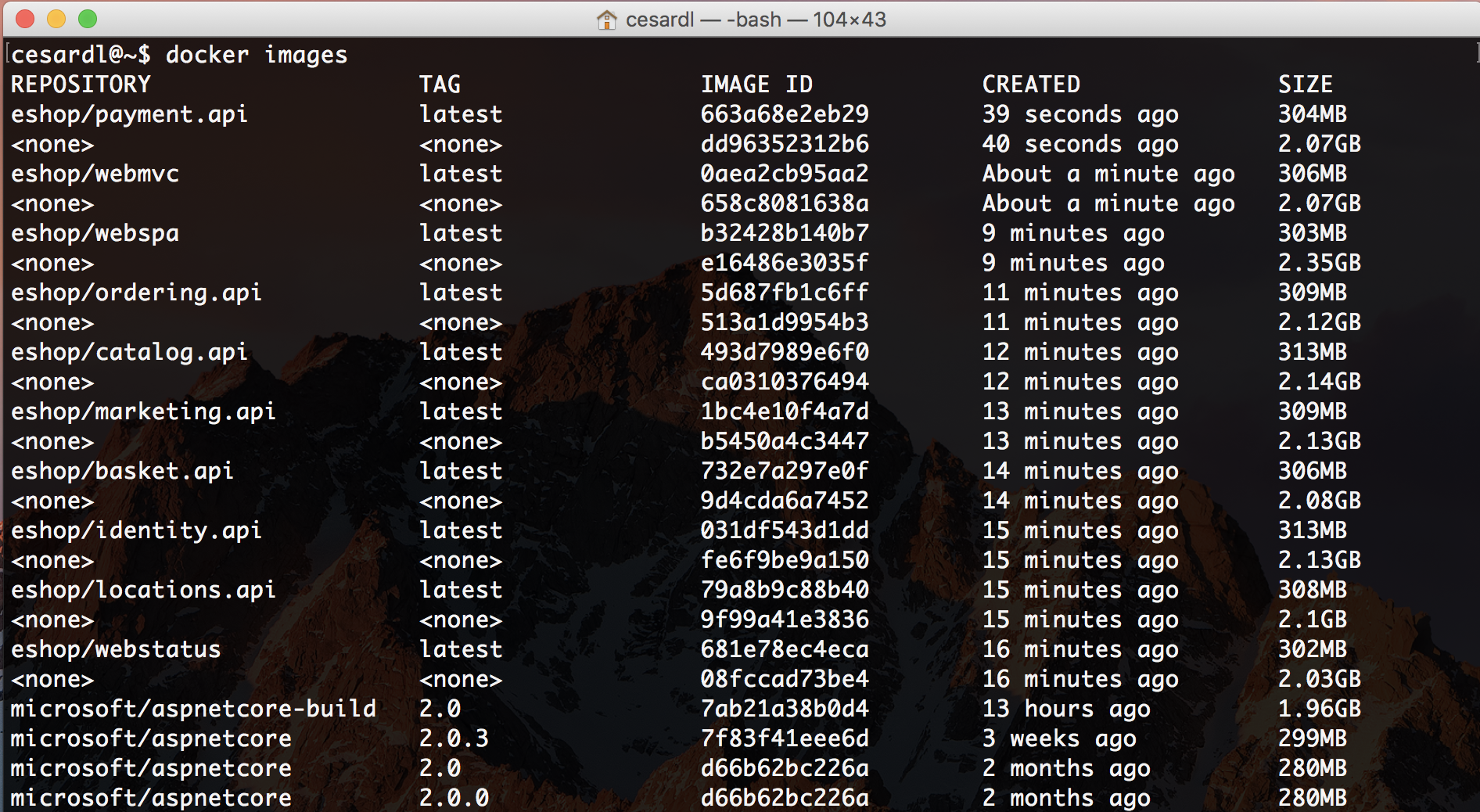

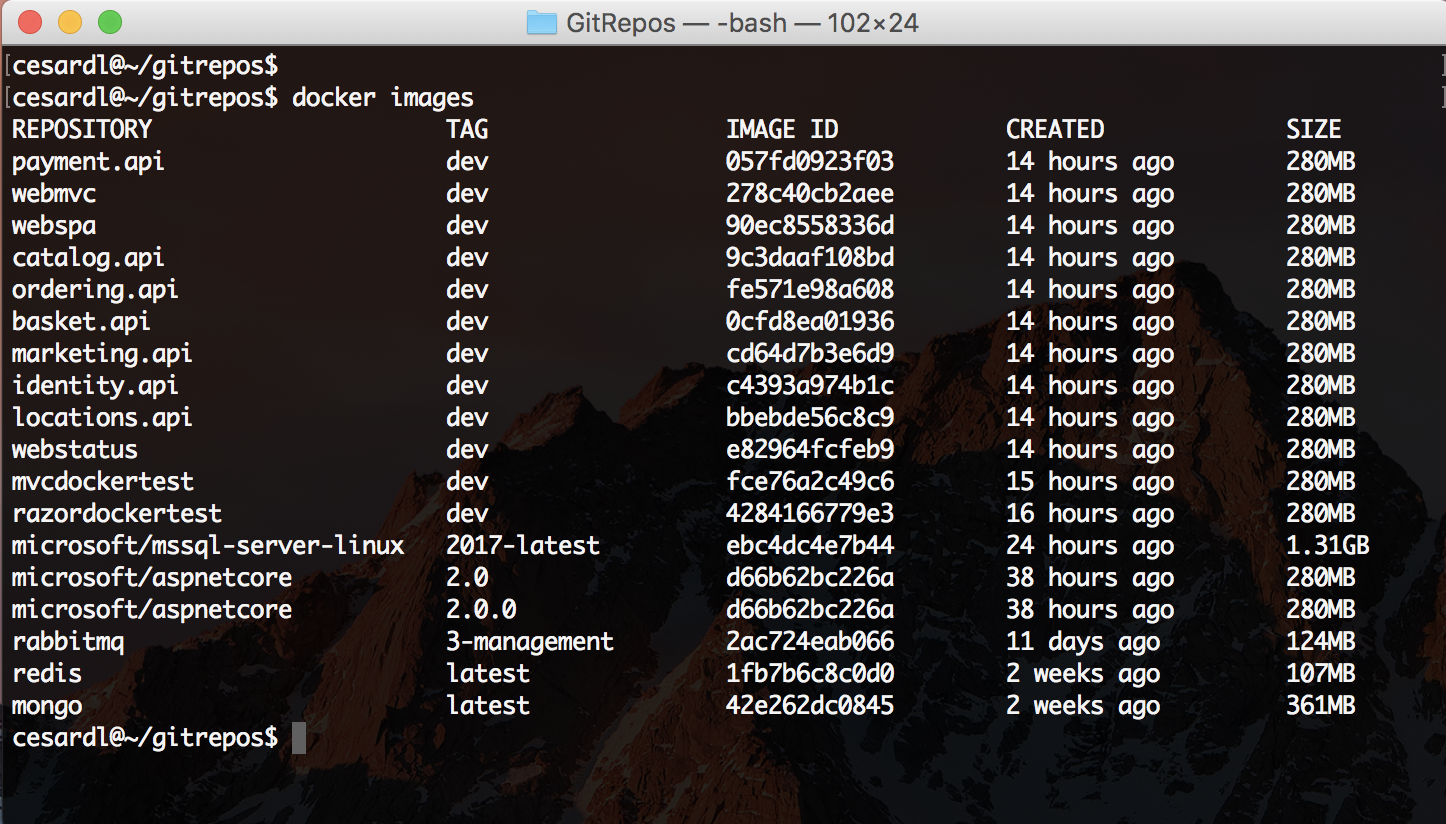

- You can check out with Docker CLI the images created by typing in the PowerShell console the command:

|

||||

|

||||

`docker images`

|

||||

|

||||

|

||||

|

||||

Those Docker images you see are the ones you have available in your local image repository in your machine.

|

||||

You might have additional images, but at least, you should see the the custom images starting with the prefix "eshop" which is the name of the image repo. The rest of the images that are not starting with "eshop" will probably be official base-images like the microsoft/aspnetcore or the SQL Server for Linux images, etc.

|

||||

|

||||

# Deploy the containers into the local Docker host

|

||||

|

||||

With a single command you can deploy the whole solution into your local Docker host by just executing the following:

|

||||

|

||||

`docker-compose up`

|

||||

|

||||

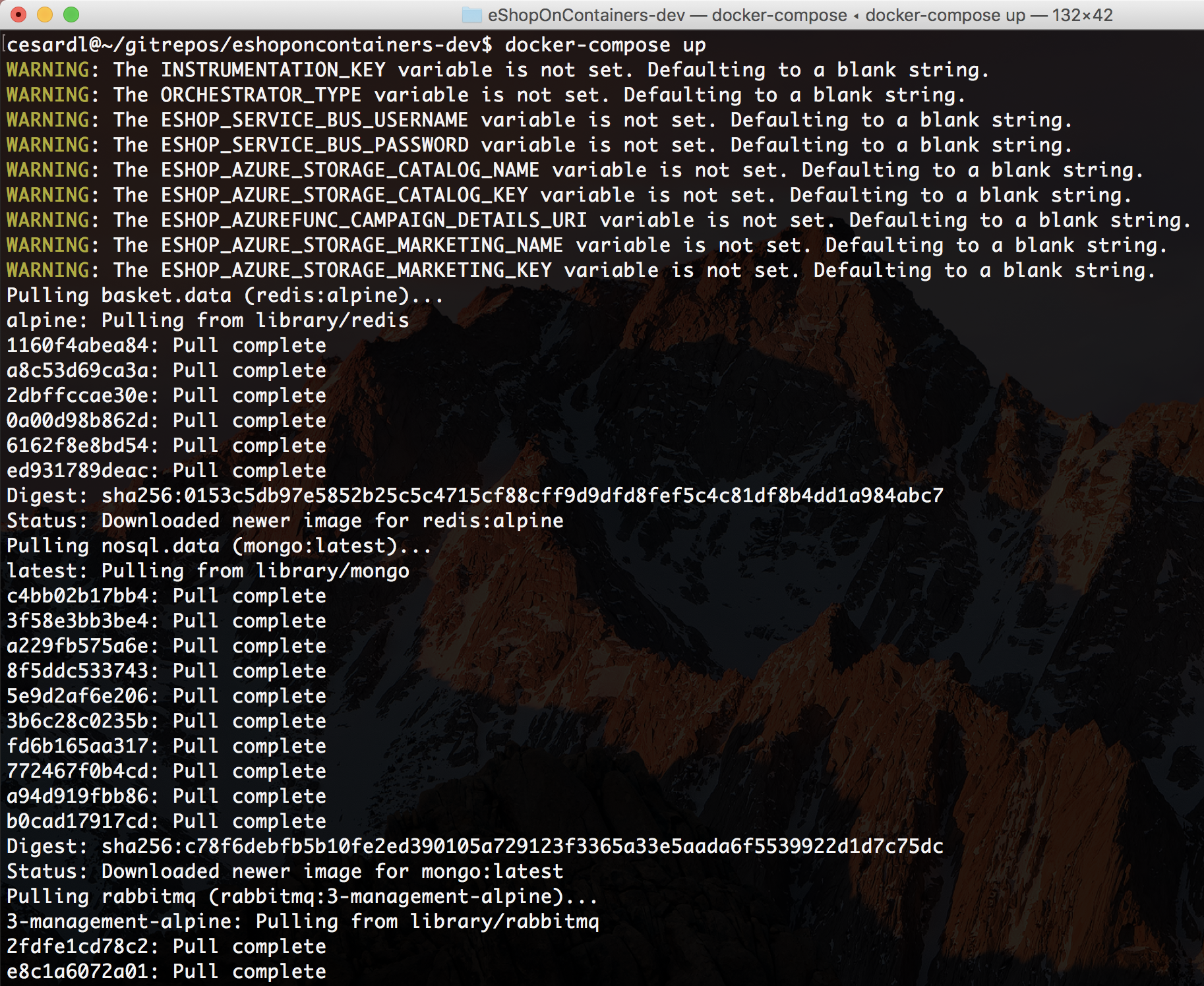

<img src="img/cli-windows/docker-compose-up-1.png">

|

||||

|

||||

Note that the first time you try to run the application (with docker run or docker-compose) it detects that it needs a few related infrastructure images, like the SQL Server image, Redis image, RabbitMQ image, etc. so it will pull or download those base images from the Internet, from the public repo at the Docker registry named DOCKER HUB, by pulling the "microsoft/mssql-server-linux" which is the base image for the SQL Server for Linux on containers, and the "library/redis" which is the base Redis image, and so on. Therefore, the first time you run "docker-compose up" it might take a few minutes pulling those images before it spins up your custom containers.

|

||||

|

||||

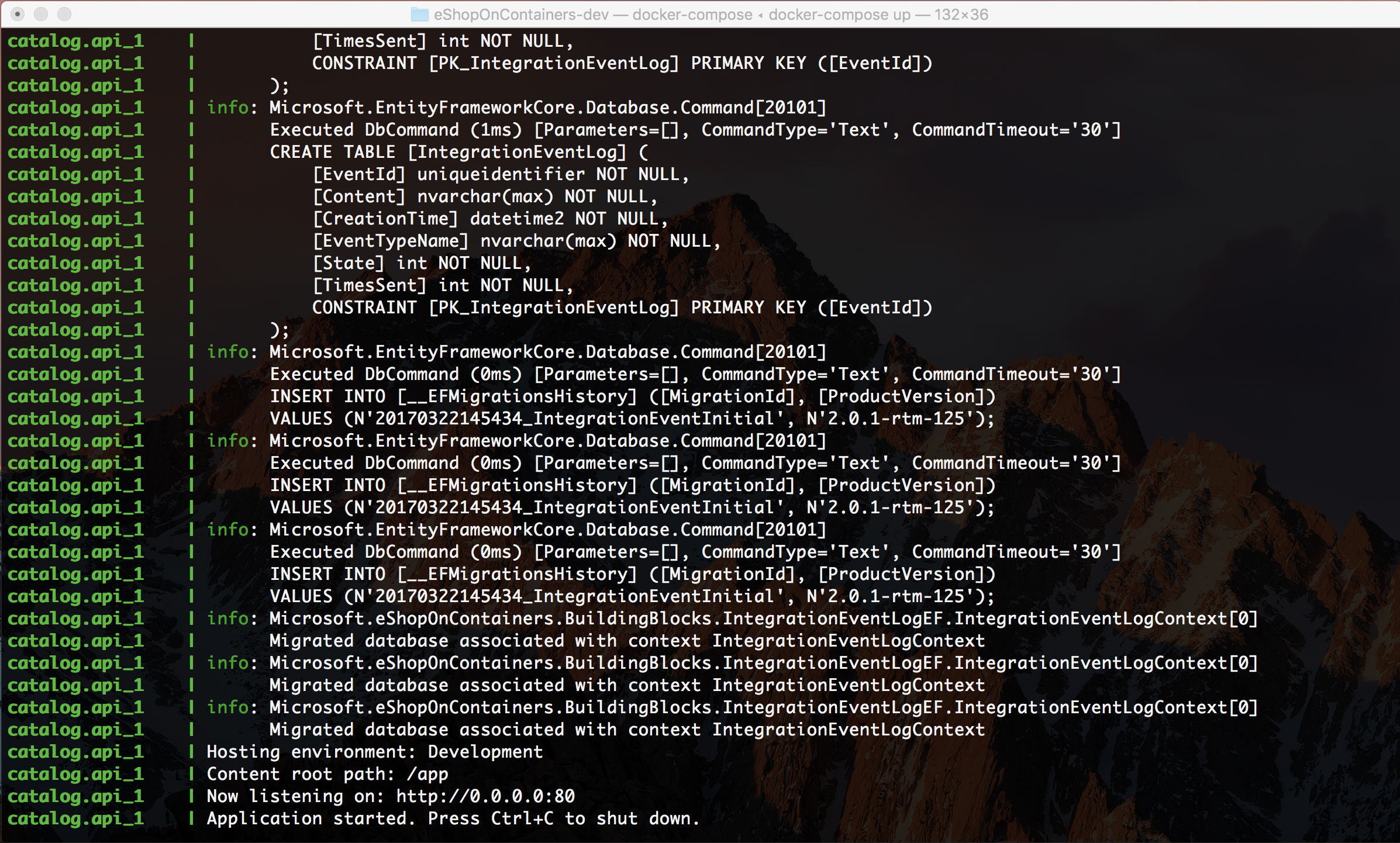

Finally, you can see how the scripts waits after deploying all the containers:

|

||||

|

||||

<img src="img/cli-windows/docker-compose-up-1.2.png">

|

||||

|

||||

- The next time you run "docker-compose up" again, because you already have all the base images downloaded and registered in your local repo and your custom images built and ready to go, it'll be much faster since it just needs to deploy the containers, like the following screenshot:

|

||||

|

||||

<img src="img/cli-windows/docker-compose-up-2.png">

|

||||

|

||||

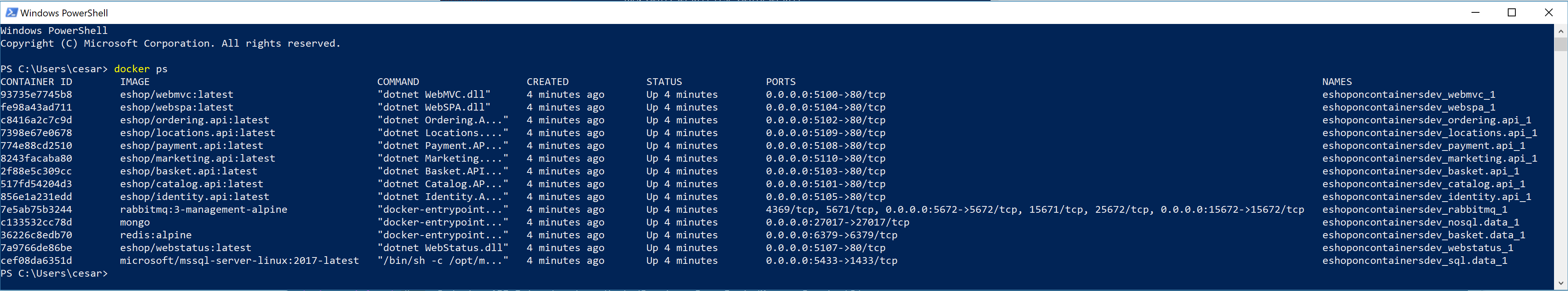

- <b>Check out the containers running in your Docker host</b>: Once docker-compose up finishes, you will have the original PowerShell window busy and showing the execution's output in a "wait state", so in order to ask to Docker about "how it went" and see what containers are running, you need to open a second PowerShell window and type "docker ps" so you'll see all the running containers, as shown in the following screenshot.

|

||||

|

||||

|

||||

|

||||

|

||||

### Test the MVC Web app

|

||||

Open a browser and type `http://localhost:5100/` and hit enter.

|

||||

You should see the MVC application like in the following screenshot:

|

||||

|

||||

<img src="img/eshop-webmvc-app-screenshot.png">

|

||||

<br>

|

||||

|

||||

|

||||

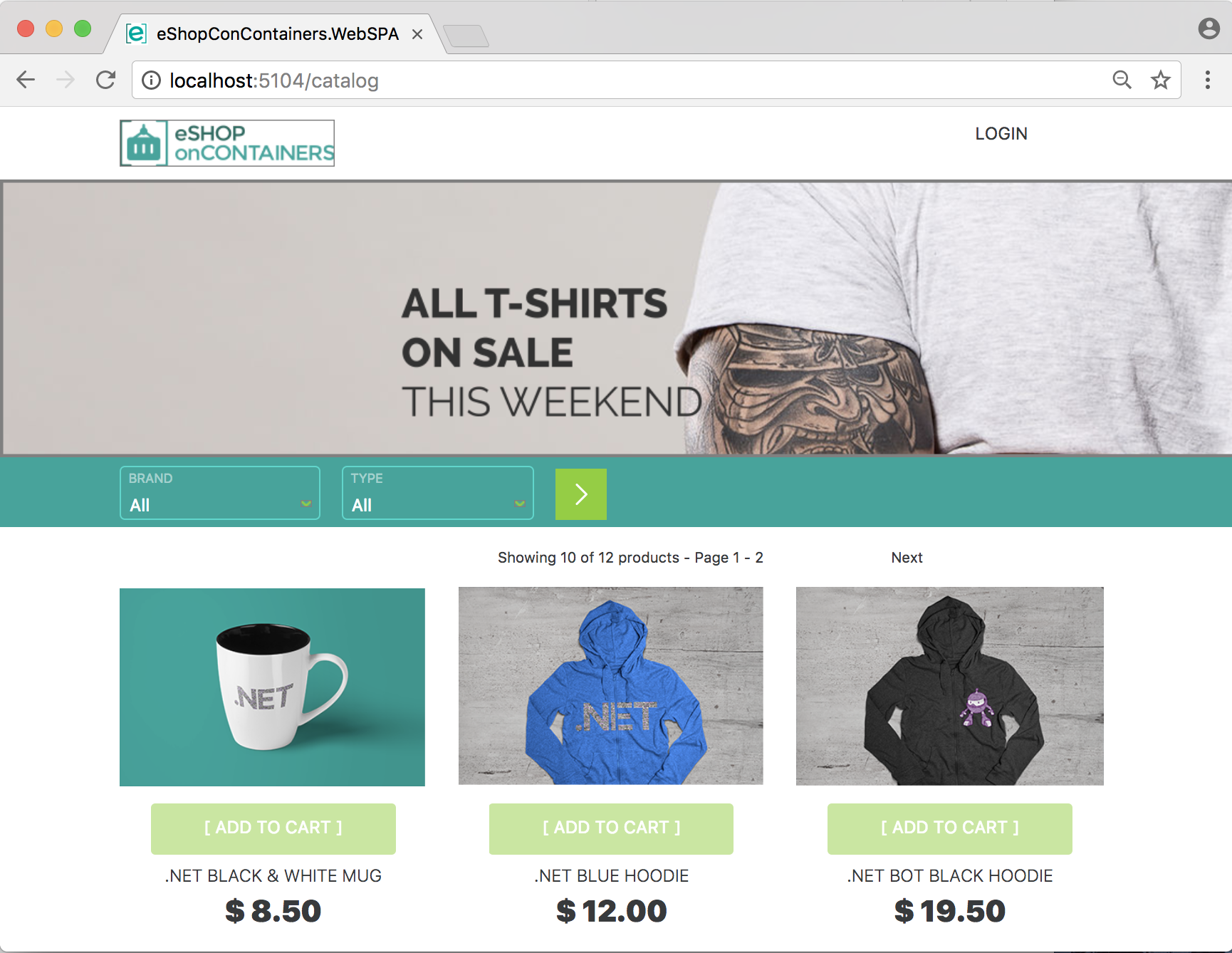

### Test the SPA Web app

|

||||

Open a browser and type `http://localhost:5104/` and hit enter.

|

||||

You should see the SPA application like in the following screenshot:

|

||||

|

||||

<img src="img/eshop-webspa-app-screenshot.png">

|

||||

<br>

|

||||

|

||||

|

||||

### Test a microservice's Swagger interface (i.e. the Catalog microservice)

|

||||

Open a browser and type `http://localhost:5101` and hit enter.

|

||||

You should see the Swagger page for that microservice that allows you to test the Web API, like in the following screenshot:

|

||||

|

||||

<img src="img/swagger-catalog-1.png">

|

||||

|

||||

Then, after providing the size (i.e. 10) and the current page (i.e. 1) for the data of the catalog, you can run the service hitting the "Try it out!" button and see the returned JSON Data:

|

||||

<img src="img/swagger-catalog-2.png">

|

||||

|

||||

<br>

|

||||

|

||||

### Using Visual Code to edit C# code or .yml code

|

||||

After installing VS code from <a href='https://code.visualstudio.com/'>Visual Studio Code</a> you can edit particular file or "open" the whole solution forlder like in the following screenshots:

|

||||

|

||||

`Opening the Solution's folder`

|

||||

<img src="img/cli-windows/vs-code-1.png">

|

||||

|

||||

`Editing a .yml file`

|

||||

<img src="img/cli-windows/vs-code-2.png">

|

||||

|

||||

It is also recommended to install the C# extension and the Docker extension for VS Code:

|

||||

<img src="img/cli-windows/vs-code-3-extensions.png">

|

||||

|

||||

|

||||

----

|

||||

|

||||

### Testing all the applications and microservices

|

||||

Once the containers are deployed, you should be able to access any of the services in the following URLs or connection string, from your dev machine:

|

||||

|

||||

<a href="" target="top"></a>

|

||||

- Web MVC: <a href="http://localhost:5100" target="top">http://localhost:5100</a>

|

||||

- Web Spa: <a href="http://localhost:5104" target="top">http://localhost:5104</a> (Important, check how to set up the SPA app and requirements before building the Docker images. Instructions at https://github.com/dotnet/eShopOnContainers/tree/master/src/Web/WebSPA/eShopOnContainers.WebSPA or the README.MD from eShopOnContainers/src/Web/WebSPA/eShopOnContainers.WebSPA)

|

||||

- Catalog microservice: <a href="http://localhost:5101" target="top">http://localhost:5101</a> (Not secured)

|

||||

- Ordering microservice: <a href="http://localhost:5102" target="top">http://localhost:5102</a> (Requires token for authorization)

|

||||

- Basket microservice: <a href="http://localhost:5103" target="top">http://localhost:5103</a> (Requires token for authorization)

|

||||

- Identity microservice: <a href="http://localhost:5105" target="top">http://localhost:5105</a>

|

||||

- Orders database (SQL Server connection string): Server=tcp:localhost,5432;Database=Microsoft.eShopOnContainers.Services.OrderingDb;User Id=sa;Password=Pass@word;

|

||||

- Catalog database (SQL Server connection string): Server=tcp:localhost,5434;Database=CatalogDB;User Id=sa;Password=Pass@word

|

||||

- ASP.NET Identity database (SQL Server connection string): Server=localhost,5433;Database=aspnet-Microsoft.eShopOnContainers;User Id=sa;Password=Pass@word

|

||||

- Basket data (Redis): listening at localhost:6379

|

||||

|

||||

#### Creating and Order and Authenticating on the Web MVC application with the DemoUser@microsoft.com user account

|

||||

When you try the Web MVC application by using the url http://localhost:5100, you'll be able to test the home page which is also the catalog page. But if you want to add articles to the basket you need to login first at the login page which is handled by the STS microservice/container (Security Token Service). At this point, you could register your own user/customer or you can also use a convenient default user/customer named **demoUser@microsoft.com** so you don't need to register your own user and it'll be easier to test.

|

||||

The credentials for this demo user are:

|

||||

- User: **demouser@microsoft.com**

|

||||

- Password: **Pass@word1**

|

||||

|

||||

Below you can see the login page when providing those credentials.

|

||||

<img src="img/login-demo-user.png">

|

||||

|

||||

#### Trying the Xamarin.Forms mobile apps for Android, iOS and Windows

|

||||

You can deploy the Xamarin app to real iOS, Android or Windows devices.

|

||||

You can also test it on an Android Emulator based on Hyper-V like the Visual Studio Android Emulator (Do NOT install the Google's Android emulator or it will break Docker and Hyper-V, as mentioned aboce).

|

||||

|

||||

By default, the Xamarin app shows fake data from mock-services. In order to really access the microservices/containers in Docker from the mobile app, you need to:

|

||||

- Disable mock-services in the Xamarin app by setting the <b>UseMockServices = false</b> in the App.xaml.cs and specify the host IP in BaseEndpoint = "http://10.106.144.28" at the GlobalSettings.cs. Both files in the Xamarin.Forms project (PCL).

|

||||

- Another alternative is to change that IP through the app UI, by modifying the IP address in the Settings page of the App as shown in the screenshot below.

|

||||

- In addition, you need to make sure that the used TCP ports of the services are open in the local firewall. <img src="img/xamarin-settings.png">

|

||||

|

||||

## Sending feedback and pull requests

|

||||

We'd appreciate to your feedback, improvements and ideas.

|

||||

You can create new issues at the issues section, do pull requests and/or send emails to eshop_feedback@service.microsoft.com

|

||||

|

||||

## Questions

|

||||

[QUESTION] Answer +1 if the solution is working for you (Through VS2017 or CLI environment):

|

||||

https://github.com/dotnet/eShopOnContainers/issues/107

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

# Superseded

|

||||

|

||||

This wiki page has been superseded by:

|

||||

|

||||

- [Windows setup](Windows-setup)

|

||||

|

||||

@ -1,313 +1,5 @@

|

||||

# Global prerequisites

|

||||

# Superseded

|

||||

|

||||

## Docker for Mac

|

||||

Install [Docker for Mac](https://docs.docker.com/docker-for-mac/install/). The stable channel is fine.

|

||||

This wiki page has been superseded by:

|

||||

|

||||

## .NET Core SDK

|

||||

Make sure you have the latest '.NET Core 2.1 SDK' installed from: https://www.microsoft.com/net/download

|

||||

|

||||

## Configure Docker for Mac

|

||||

|

||||

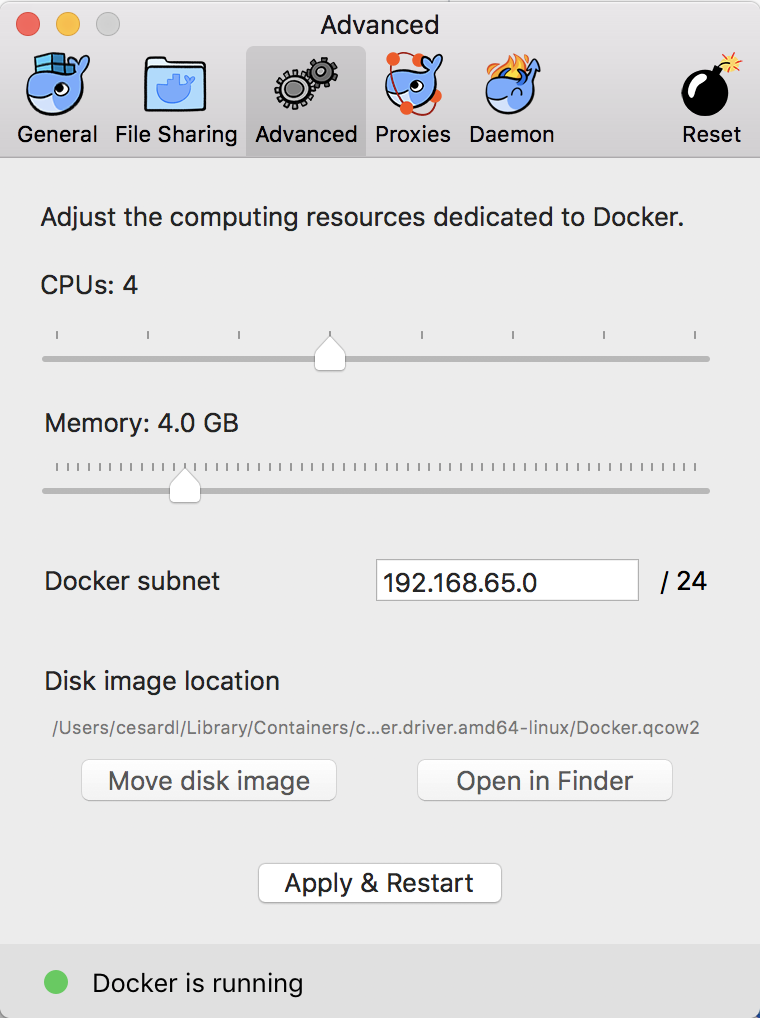

### Docker for Mac (Linux VM) memory assigned

|

||||

The SQL Server image for Docker requires more memory to run. You will need to update your Docker settings to allocate at least 4 GB of memory:

|

||||

|

||||

|

||||

|

||||

Depending on how many apps you are running in your Mac you might need to assign more memory to Docker in the Mac. Usually, 4GB should suffice, but we got feedback from devs who needed to assign up to 8GB of ram to Docker in the Mac.

|

||||

|

||||

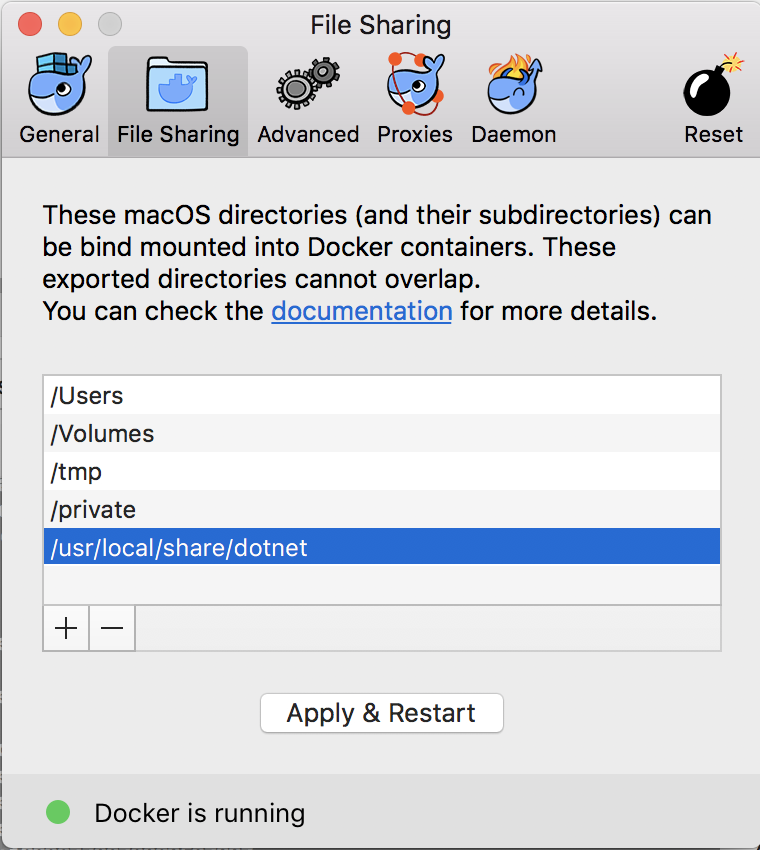

### Folder shares in Docker for Mac

|

||||

If your projects are placed within the /Users folder, you don't need to configure anything additional, as that is a pre-shared folder. However, if you place your projects under a different path, like /MyRootProjects, then you'd need to add that shared folder to Docker's configuration.

|

||||

|

||||

If using Visual Studio for Mac, it is also important that you share the folder `/usr/local/share/dotnet`, like here:

|

||||

|

||||

|

||||

|

||||

## Docker Multi-stage support

|

||||

Since December 2017, eShopOnContainers supports [Docker Multi-stage](https://blogs.msdn.microsoft.com/stevelasker/2017/09/11/net-and-multistage-dockerfiles/), therefore, the steps in order to compile the .NET apps/projects before creating the Docker images can now be performed with a single command based on "docker-compose build" or "docker build".

|

||||

|

||||

|

||||

# Option A: Use a CLI environment (dotnet CLI, Docker CLI with the bash shell) and VS Code as plain editor

|

||||

|

||||

As a summary, with the following simple CLI commands in a bash window you'll be able to build the Docker images and deploy the containers into your local Docker host:

|

||||

|

||||

```

|

||||

$ git clone https://github.com/dotnet-architecture/eShopOnContainers.git

|

||||

$ cd eShopOnContainers

|

||||

$ docker-compose build

|

||||

$ docker-compose up

|

||||

```

|

||||

|

||||

The `docker-compose build` is in reality optional because if you run the `docker-compose up` command and you don't have the Docker images built, Docker will run the `docker-compose build` under the covers.

|

||||

But splitting the commands in two makes it clearer for you to know what it is doing.

|

||||

|

||||

The following explanations show you in detail how you should set it up, build and deploy.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

1. [Docker for Mac](https://docs.docker.com/docker-for-mac/install/). You should already have this.

|

||||

1. A Git client. The [git-scm site](https://git-scm.com/download/gui/mac) maintains a great list of clients.

|

||||

1. (OPTIONAL) NPM installed with [Node.js](http://nodejs.org). The stable channel is fine as well.

|

||||

1. (OPTIONAL) Bower ($ sudo npm install -g bower) needed by the MVC web app.

|

||||

1. [.NET Core and SDK](http://dot.net). Install the SDK and runtime.

|

||||

|

||||

### (OPTIONAL) Installation of NPM/Node, Bower and .NET Core SDK on the local Mac is not required when using Docker Multi-Stage

|

||||

|

||||

The SDKs and dependencies like NPM, Bower and even .NET Core SDK are optional because when building through Docker Multi-Stage it will be using the dependencies available within the ASP.NET Core build image container instead of the installed software on the local machine/Mac.

|

||||

However, if you will be developing .NET apps in the Mac or customizing eShopOnContainers, it is recommended to install all the dependencies locally, as well.

|

||||

|

||||

# Compile the application's projects and build the Docker images with a single command thanks to Docker Multi-Stage

|

||||

|

||||

The recommended approach is to build the .NET application/microservices bits and Docker images with a single command based on [Docker Multi-Stage](https://blogs.msdn.microsoft.com/stevelasker/2017/09/11/net-and-multistage-dockerfiles/) by simply running the following commands within the solution's root folder:

|

||||

|

||||

Move to the root folder of the solution:

|

||||

|

||||

`cd YourPath\eShopOnContainers\`

|

||||

|

||||

Then, run the following docker command:

|

||||

|

||||

`docker-compose build`

|

||||

|

||||

|

||||

|

||||

The first time you run this command it'll take some more additional time as it needs to pull/download the aspnet-build image with the SDKs, so it'll take its time.

|

||||

|

||||

It should take a few minutes to compile all the .NET Core projects plus the SPA application (Angular/TypeScript/JavaScript) which has additional processes and dependencies using NPM.

|

||||

|

||||

- When the `docker-compose build` command finishes, you can check out with Docker CLI the images created by typing in Bash the following Docker command:

|

||||

|

||||

`docker images`

|

||||

|

||||

|

||||

|

||||

Those Docker images you see are the ones you have available in your local image repository in your machine.

|

||||

You might have additional images, but at least, you should see the the custom images starting with the prefix "eshop" which is the name of the image repo. The rest of the images that are not starting with "eshop" will probably be official base-images like the microsoft/aspnetcore or the SQL Server for Linux images, etc.

|

||||

|

||||

# Deploy the containers into the local Docker host

|

||||

|

||||

With a single command you can deploy the whole solution into your local Docker host by just executing the following:

|

||||

|

||||

`docker-compose up`

|

||||

|

||||

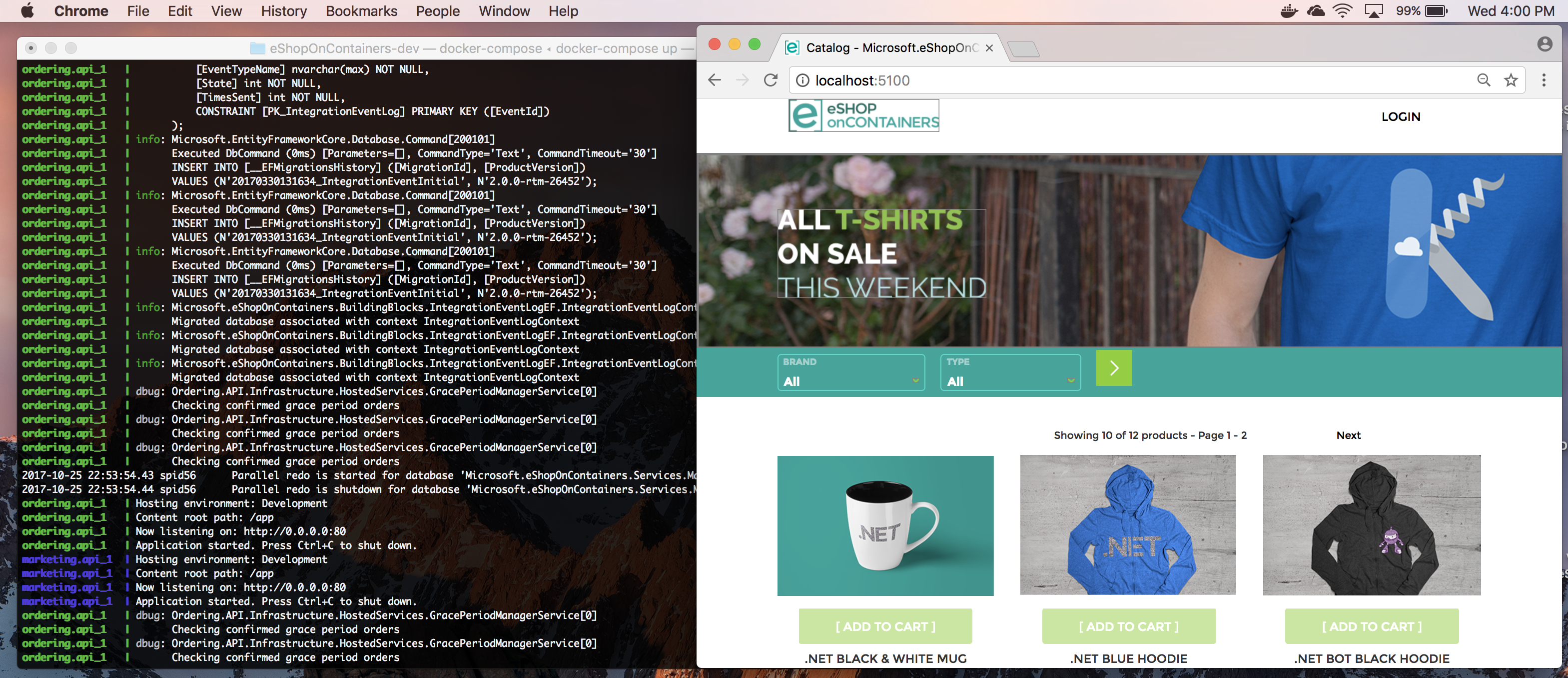

|

||||

|

||||

Ignore the warnings about environment variables for Azure, as that is only needed if you were using infrastructure services in Azure (Azure SQL Database, Redis as a service, Azure Service Bus, etc.) which is the "next step" when using eShopOncontainers.

|

||||

|

||||

Note that the first time you try to run the application (with docker run or docker-compose) it detects that it needs a few related infrastructure images, like the SQL Server image, Redis image, RabbitMQ image, etc. so it will pull or download those base images from the Internet, from the public repo at the Docker registry named DOCKER HUB, by pulling the "microsoft/mssql-server-linux" which is the base image for the SQL Server for Linux on containers, and the "library/redis" which is the base Redis image, and so on. Therefore, the first time you run "docker-compose up" it might take a few minutes pulling those images before it spins up your custom containers.

|

||||

|

||||